The scenario in mind here was a SAP BW on HANA system reading data from a Suite on HANA system and using the SAP HANA live content installed there.

The Open ODS feature of SAP BW on HANA was to be used here as it allows reading from tables and views exposed via SDA in the local SAP HANA instance.

Now this idea sounds splendid.

Instead of having to manually build an extractor or an data export database view (both of which can be extensive development efforts), why not simply reuse the ready made content of SAP HANA live for this?

As usual the proof of the pudding is in the eating and as soon as it was tried out a severe shortcoming was identified:

select * from "LARS"."IMACCESS_LBPB/SCV_USERS"

('PLACEHOLDER' = ('$$userNameFilter$$', 'USER_NAME= LARS'))

Could not execute 'select * from "LARS"."IMACCESS_LBPB/SCV_USERS"('PLACEHOLDER' = ('$$userNameFilter$$', 'USER_NAME= ...'

SAP DBTech JDBC: [7]: feature not supported:

Cannot use parameters on row table: IMACCESS_LBPB/SCV_USERS: line 1 col 22 (at pos 21)

BOOM!

I just created an Information Model similar to the ones provided with the SAP HANA Live content including the heavily used Input Parameters to enable the model to be flexible and reusable (and also to allow filter push-down) but SAP HANA tells me:

"Nope, I'm not doing this, because the PLACEHOLDER syntax only works for information views and not for 'row tables'."

This 'row table' part of the error message stems from the fact that SAP HANA SPS 9 showed SDA tables as row store tables. This also means that all data read from the SDA source gets temporarily stored in SAP HANA row store tables before further processed in the query.

One reason for doing that probably was that the mapping from ODBC row format to column store format (especially the data type mapping from other vendors DBMS) was easier to manage with the SAP HANA row store.

Having said that, when accessing another SAP HANA system, such format mapping surely should be no problem, right?

Right.

And in fact there is an option to change this: the parameter "virtual_table_format" in the "smart_data_access" section on of the indexserver.ini:

= Configuration

Name | Default

indexserver.ini |

smart_data_access |

virtual_table_format | auto

This parameter can be set to ROW, COLUMN or AUTO (the SPS 11 default value, automatically using the right format depending on the SDA adapter capabilities).

For more on how "capabilities" influence the SDA adapter behavior, check the documentation.

Back last year I wasn't aware of this parameter and so I couldn't try and see if, after changing the parameter, the query would've worked.

Anyhow, like all good problems the question just popped up again and I had an opportunity to look into this topic once more.

"Smarter" at last...

And lo and behold, with SAP HANA SPS 11 the PLACEHOLDER syntax works like a charm even for virtual tables.

SELECT -- local execution ---

"D10_VAL",

"D100_VAL",

sum("KF1") AS "KF1",

sum("KF2") AS "KF2",

sum("CC_KF1_FACTORED") AS "CC_KF1_FACTORED"

FROM "_SYS_BIC"."devTest/stupidFactView"

('PLACEHOLDER' = ('$$IP_FACTOR$$','34'))

WHERE "D10_VAL" = 'DimValue9'

and "D100_VAL" = 'DimValue55'

GROUP BY

"D10_VAL",

"D100_VAL";

/*

D10_VAL D100_VAL KF1 KF2 CC_KF1_FACTORED

DimValue9 DimValue55 -1320141.70 525307979 -44884817

successfully executed in 352 ms 417 µs (server processing time: 7 ms 385 µs)

successfully executed in 356 ms 581 µs (server processing time: 8 ms 437 µs)

successfully executed in 350 ms 832 µs (server processing time: 8 ms 88 µs)

OPERATOR_NAME OPERATOR_DETAILS EXECUTION_ENGINE

COLUMN SEARCH 'DimValue9',

DIM1000.D100_VAL,

SUM(FACT.KF1),

SUM(FACT.KF2),

TO_BIGINT(TO_DECIMAL(SUM(FACT.KF1), 21, 2) * '34')

(LATE MATERIALIZATION, OLTP SEARCH, ENUM_BY: CS_JOIN) COLUMN

AGGREGATION GROUPING:

DIM1000.VAL,

AGGREGATION:

SUM(FACT.KF1),

SUM(FACT.KF2) COLUMN

JOIN JOIN CONDITION:

(INNER) FACT.DIM100 = DIM1000.ID,

(INNER) FACT.DIM10 = DIM10.ID COLUMN

COLUMN TABLE COLUMN

COLUMN TABLE FILTER CONDITION: DIM1000.VAL = n'DimValue55' COLUMN

COLUMN TABLE FILTER CONDITION: DIM10.VAL = n'DimValue9' COLUMN

*/

See how the SPS 11 SQL optimisation is visible in the EXPLAIN PLAN: since the tables involved are rather small and only two dimensions are actually referenced, the OLAP engine (usually responsible for STAR SCHEMA queries) didn't kick in, but the execution was completely done in the Join Engine.

Also notable: the calculated key figure was reformulated internally into a SQL expression AFTER the parameter value (34) was supplied.

This is a nice example for how SAP HANA does a lot of the query optimisation upon query execution.

If I had used a placeholder (question mark - ?) for the value instead, this whole statement would still work, but it would not have been optimised by the SQL optimizer and instead the calculation view would've been executed "as-is".

Now the same statement accessing the "remote" view:

SELECT -- SDA access ---

"D10_VAL",

"D100_VAL",

sum("KF1") AS "KF1",

sum("KF2") AS "KF2",

sum("CC_KF1_FACTORED") AS "CC_KF1_FACTORED"

FROM "DEVDUDE"."self_stupidFactView"

('PLACEHOLDER' = ('$$IP_FACTOR$$','34'))

WHERE "D10_VAL" = 'DimValue9'

and "D100_VAL" = 'DimValue55'

GROUP BY

"D10_VAL",

"D100_VAL";

/*

D10_VAL D100_VAL KF1 KF2 CC_KF1_FACTORED

DimValue9 DimValue55 -1320141.70 525307979 -44884817

successfully executed in 351 ms 430 µs (server processing time: 12 ms 417 µs)

successfully executed in 360 ms 272 µs (server processing time: 11 ms 15 µs)

successfully executed in 359 ms 371 µs (server processing time: 11 ms 914 µs)

OPERATOR_NAME OPERATOR_DETAILS EXECUTION_ENGINE

COLUMN SEARCH 'DimValue9', self_stupidFactView.D100_VAL,

SUM(self_stupidFactView.KF1),

SUM(self_stupidFactView.KF2),

SUM(self_stupidFactView.CC_KF1_FACTORED)

(LATE MATERIALIZATION, OLTP SEARCH, ENUM_BY: REMOTE_COLUMN_SCAN) COLUMN

COLUMN SEARCH SUM(self_stupidFactView.KF1),

SUM(self_stupidFactView.KF2),

SUM(self_stupidFactView.CC_KF1_FACTORED),

self_stupidFactView.D100_VAL

(ENUM_BY: REMOTE_COLUMN_SCAN) ROW

REMOTE COLUMN SCAN SELECT SUM("self_stupidFactView"."KF1"),

SUM("self_stupidFactView"."KF2"),

SUM("self_stupidFactView"."CC_KF1_FACTORED"),

"self_stupidFactView"."D100_VAL"

FROM "_SYS_BIC"."devTest/stupidFactView"

( PLACEHOLDER."$$IP_FACTOR$$" => '34' ) "self_stupidFactView"

WHERE "self_stupidFactView"."D10_VAL" = 'DimValue9'

AND "self_stupidFactView"."D100_VAL" = 'DimValue55'

GROUP BY "self_stupidFactView"."D100_VAL" EXTERNAL

*/

Because of the mentioned parameter setting, SAP HANA now can create a statement that can be send to the "remote" database to produce the wanted output.

Note how the statement in the REMOTE COLUMN SCAN is not exactly the statement we used: the aggregated columns are now the first in the statement and the parameter syntax used is the new "arrow"-style syntax (PLACEHOLDER."$$<name> $$" => '<value>'). This nicely reveals how SDA actually rewrites the statement in order to get the best outcome depending on the source systems capabilities.

For a better overview on what happens in both scenarios please look at this piece of ASCII art in awe

|[ ]| = system boundaries

local statement execution

|[SQL statement -> Information view -> Tables +]|

|

|[ RESULT < -------------------------------+]|

SDA statement execution

|[SQL Statement -> Virtual Table -> SDA connection ->]| --- ODBC transport --> |[ Information view -> Tables +]|

|

|[ RESULT < -----------------------------------]| <-- ODBC transport --- |[--< RESULT <---------------+]|

The Web, Stars and the importance of trying things out

For the question discussed above I of course needed to have a test setup ready.

Creating the SDA remote source was the easiest part here, as I just created a "self" source system (BW veterans will remember this approach) that simply pointed to the very same SAP HANA instance.

In order to emulate a proper SAP HANA live view I needed to create an Information model with Input Parameters, so I thought: easy, let's just quickly build one in the Web based development workbench.

So far I've done most of the modelling in SAP HANA studio, so I took this opportunity to get a bit more familiar with the new generation of tools.

I wanted to build a classic Star-Schema-Query model, so that I could use the Star Join function.

From SAP HANA Studio I knew that this required calculation views of of FACT and DIMENSION to work.

Not a problem at all to create those.

The Open ODS feature of SAP BW on HANA was to be used here as it allows reading from tables and views exposed via SDA in the local SAP HANA instance.

Now this idea sounds splendid.

Instead of having to manually build an extractor or an data export database view (both of which can be extensive development efforts), why not simply reuse the ready made content of SAP HANA live for this?

As usual the proof of the pudding is in the eating and as soon as it was tried out a severe shortcoming was identified:

select * from "LARS"."IMACCESS_LBPB/SCV_USERS"

('PLACEHOLDER' = ('$$userNameFilter$$', 'USER_NAME= LARS'))

Could not execute 'select * from "LARS"."IMACCESS_LBPB/SCV_USERS"('PLACEHOLDER' = ('$$userNameFilter$$', 'USER_NAME= ...'

SAP DBTech JDBC: [7]: feature not supported:

Cannot use parameters on row table: IMACCESS_LBPB/SCV_USERS: line 1 col 22 (at pos 21)

BOOM!

I just created an Information Model similar to the ones provided with the SAP HANA Live content including the heavily used Input Parameters to enable the model to be flexible and reusable (and also to allow filter push-down) but SAP HANA tells me:

"Nope, I'm not doing this, because the PLACEHOLDER syntax only works for information views and not for 'row tables'."

This 'row table' part of the error message stems from the fact that SAP HANA SPS 9 showed SDA tables as row store tables. This also means that all data read from the SDA source gets temporarily stored in SAP HANA row store tables before further processed in the query.

One reason for doing that probably was that the mapping from ODBC row format to column store format (especially the data type mapping from other vendors DBMS) was easier to manage with the SAP HANA row store.

Having said that, when accessing another SAP HANA system, such format mapping surely should be no problem, right?

Right.

And in fact there is an option to change this: the parameter "virtual_table_format" in the "smart_data_access" section on of the indexserver.ini:

= Configuration

Name | Default

indexserver.ini |

smart_data_access |

virtual_table_format | auto

This parameter can be set to ROW, COLUMN or AUTO (the SPS 11 default value, automatically using the right format depending on the SDA adapter capabilities).

For more on how "capabilities" influence the SDA adapter behavior, check the documentation.

Back last year I wasn't aware of this parameter and so I couldn't try and see if, after changing the parameter, the query would've worked.

Anyhow, like all good problems the question just popped up again and I had an opportunity to look into this topic once more.

"Smarter" at last...

And lo and behold, with SAP HANA SPS 11 the PLACEHOLDER syntax works like a charm even for virtual tables.

SELECT -- local execution ---

"D10_VAL",

"D100_VAL",

sum("KF1") AS "KF1",

sum("KF2") AS "KF2",

sum("CC_KF1_FACTORED") AS "CC_KF1_FACTORED"

FROM "_SYS_BIC"."devTest/stupidFactView"

('PLACEHOLDER' = ('$$IP_FACTOR$$','34'))

WHERE "D10_VAL" = 'DimValue9'

and "D100_VAL" = 'DimValue55'

GROUP BY

"D10_VAL",

"D100_VAL";

/*

D10_VAL D100_VAL KF1 KF2 CC_KF1_FACTORED

DimValue9 DimValue55 -1320141.70 525307979 -44884817

successfully executed in 352 ms 417 µs (server processing time: 7 ms 385 µs)

successfully executed in 356 ms 581 µs (server processing time: 8 ms 437 µs)

successfully executed in 350 ms 832 µs (server processing time: 8 ms 88 µs)

OPERATOR_NAME OPERATOR_DETAILS EXECUTION_ENGINE

COLUMN SEARCH 'DimValue9',

DIM1000.D100_VAL,

SUM(FACT.KF1),

SUM(FACT.KF2),

TO_BIGINT(TO_DECIMAL(SUM(FACT.KF1), 21, 2) * '34')

(LATE MATERIALIZATION, OLTP SEARCH, ENUM_BY: CS_JOIN) COLUMN

AGGREGATION GROUPING:

DIM1000.VAL,

AGGREGATION:

SUM(FACT.KF1),

SUM(FACT.KF2) COLUMN

JOIN JOIN CONDITION:

(INNER) FACT.DIM100 = DIM1000.ID,

(INNER) FACT.DIM10 = DIM10.ID COLUMN

COLUMN TABLE COLUMN

COLUMN TABLE FILTER CONDITION: DIM1000.VAL = n'DimValue55' COLUMN

COLUMN TABLE FILTER CONDITION: DIM10.VAL = n'DimValue9' COLUMN

*/

See how the SPS 11 SQL optimisation is visible in the EXPLAIN PLAN: since the tables involved are rather small and only two dimensions are actually referenced, the OLAP engine (usually responsible for STAR SCHEMA queries) didn't kick in, but the execution was completely done in the Join Engine.

Also notable: the calculated key figure was reformulated internally into a SQL expression AFTER the parameter value (34) was supplied.

This is a nice example for how SAP HANA does a lot of the query optimisation upon query execution.

If I had used a placeholder (question mark - ?) for the value instead, this whole statement would still work, but it would not have been optimised by the SQL optimizer and instead the calculation view would've been executed "as-is".

Now the same statement accessing the "remote" view:

SELECT -- SDA access ---

"D10_VAL",

"D100_VAL",

sum("KF1") AS "KF1",

sum("KF2") AS "KF2",

sum("CC_KF1_FACTORED") AS "CC_KF1_FACTORED"

FROM "DEVDUDE"."self_stupidFactView"

('PLACEHOLDER' = ('$$IP_FACTOR$$','34'))

WHERE "D10_VAL" = 'DimValue9'

and "D100_VAL" = 'DimValue55'

GROUP BY

"D10_VAL",

"D100_VAL";

/*

D10_VAL D100_VAL KF1 KF2 CC_KF1_FACTORED

DimValue9 DimValue55 -1320141.70 525307979 -44884817

successfully executed in 351 ms 430 µs (server processing time: 12 ms 417 µs)

successfully executed in 360 ms 272 µs (server processing time: 11 ms 15 µs)

successfully executed in 359 ms 371 µs (server processing time: 11 ms 914 µs)

OPERATOR_NAME OPERATOR_DETAILS EXECUTION_ENGINE

COLUMN SEARCH 'DimValue9', self_stupidFactView.D100_VAL,

SUM(self_stupidFactView.KF1),

SUM(self_stupidFactView.KF2),

SUM(self_stupidFactView.CC_KF1_FACTORED)

(LATE MATERIALIZATION, OLTP SEARCH, ENUM_BY: REMOTE_COLUMN_SCAN) COLUMN

COLUMN SEARCH SUM(self_stupidFactView.KF1),

SUM(self_stupidFactView.KF2),

SUM(self_stupidFactView.CC_KF1_FACTORED),

self_stupidFactView.D100_VAL

(ENUM_BY: REMOTE_COLUMN_SCAN) ROW

REMOTE COLUMN SCAN SELECT SUM("self_stupidFactView"."KF1"),

SUM("self_stupidFactView"."KF2"),

SUM("self_stupidFactView"."CC_KF1_FACTORED"),

"self_stupidFactView"."D100_VAL"

FROM "_SYS_BIC"."devTest/stupidFactView"

( PLACEHOLDER."$$IP_FACTOR$$" => '34' ) "self_stupidFactView"

WHERE "self_stupidFactView"."D10_VAL" = 'DimValue9'

AND "self_stupidFactView"."D100_VAL" = 'DimValue55'

GROUP BY "self_stupidFactView"."D100_VAL" EXTERNAL

*/

Because of the mentioned parameter setting, SAP HANA now can create a statement that can be send to the "remote" database to produce the wanted output.

Note how the statement in the REMOTE COLUMN SCAN is not exactly the statement we used: the aggregated columns are now the first in the statement and the parameter syntax used is the new "arrow"-style syntax (PLACEHOLDER."$$<name> $$" => '<value>'). This nicely reveals how SDA actually rewrites the statement in order to get the best outcome depending on the source systems capabilities.

For a better overview on what happens in both scenarios please look at this piece of ASCII art in awe

|[ ]| = system boundaries

local statement execution

|[SQL statement -> Information view -> Tables +]|

|

|[ RESULT < -------------------------------+]|

SDA statement execution

|[SQL Statement -> Virtual Table -> SDA connection ->]| --- ODBC transport --> |[ Information view -> Tables +]|

|

|[ RESULT < -----------------------------------]| <-- ODBC transport --- |[--< RESULT <---------------+]|

The Web, Stars and the importance of trying things out

For the question discussed above I of course needed to have a test setup ready.

Creating the SDA remote source was the easiest part here, as I just created a "self" source system (BW veterans will remember this approach) that simply pointed to the very same SAP HANA instance.

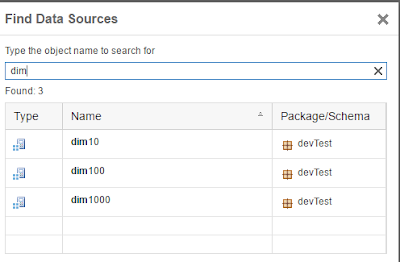

In order to emulate a proper SAP HANA live view I needed to create an Information model with Input Parameters, so I thought: easy, let's just quickly build one in the Web based development workbench.

So far I've done most of the modelling in SAP HANA studio, so I took this opportunity to get a bit more familiar with the new generation of tools.

I wanted to build a classic Star-Schema-Query model, so that I could use the Star Join function.

From SAP HANA Studio I knew that this required calculation views of of FACT and DIMENSION to work.

Not a problem at all to create those.

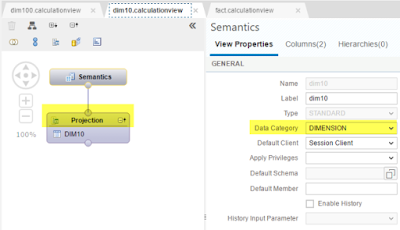

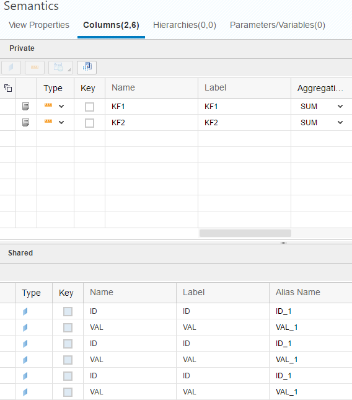

A CUBE type view for the fact table

One of the Dimension type views

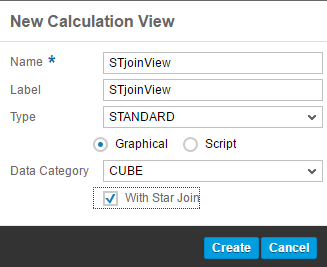

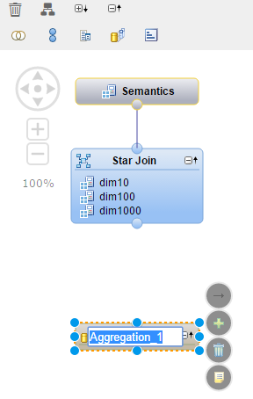

I then went on and created a new calculation view of data type CUBE and checked the WITH STAR JOIN check box.

Next I tried to add all my FACT and DIMENSION views to the join, but boy was I wrong...

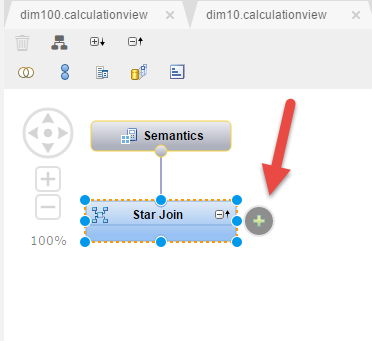

Clicking on the + button should allow to add the views.

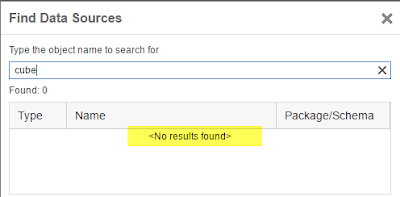

But no option there to add the fact view into the STAR JOIN node - while adding dimension just worked fine:

Now I had all my dimensions in place but no way to join them with fact table:

After some trial and error (and no, I didn't read the documentation and I should have. But on the other hand, a little more guidance in the UI wouldn't hurt either) I figured out that one has to manually add a projection or aggregation node that feeds into the Star Join:.

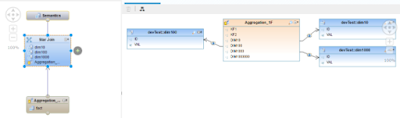

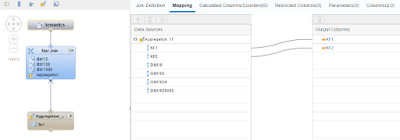

Once this is done, the columns that should be visible in the Star join need to be mapped:

And NOW we can drag and drop the join lines between the different boxes in the Star Join editor.

Be careful not to overlook that the fact table that just got added, might not be within the current window portion. In that case either zoom out with the [-] button or move the view around via mouse dragging or the arrow icons.

After the joins are all defined (classic star schema, left outer join n:1, remember?) again the mapping of the output columns need to be done.

Here, map only the key figures, since the dimension columns are already available in the view output anyhow as "shared columns".

For my test I further went on and added a calculated key figure that takes an Input Parameter to multiply one of the original key figures.

Source: scn.sap.com

No comments:

Post a Comment