In the last part (part 2), we saw how to import a CSV file into HANA using HANA Studio, let’s now see how we can take care of the geometry column.

If you want to learn more about geoJSON, you can check the following website: http://geojson.org/

if you remember, our CSV file (http://datasets.antwerpen.be/v4/gis/grondwaterstroming.csv) has a field named “geometry” which is a geoJSON stream.

As stated before HANA does support this format yet with the proper ST_GEOMETRY data type constructor or constructor. So we will need to convert this field to a “Well-Known Text” format.

I found a “Node.js” package to do this (only one), so I decided to build a little program to convert the field from the local CSV file.

First you will need to install Node.js from their website: https://nodejs.org/en/download/

Once installed you can open command line prompt and run the following command:

node --version

This will return the currently installed and make sure it’s properly installed.

Now, run the following commands:

npm install fast-csv

npm install fs

npm install terraformer

npm install terraformer-wkt-parser

This will install the relevant packages to run the package.

You can now create a new file named ‘convert-geojson-to-wkt-csv.js‘ and add the following code:

var csv = require('fast-csv');

var fs = require('fs');

var Terraformer = require('terraformer');

var WKT = require('terraformer-wkt-parser');

var writeOptions = {

headers : true,

quoteHeaders : true,

quoteColumns : true,

rowDelimiter :'\n',

delimiter : ';'

};

var csvWriteStream = csv

.format(writeOptions);

var writableStream = fs.createWriteStream("C:/temp/grondwaterstroming-out.csv")

.on("finish", function(){

console.log("All done");

});

csvWriteStream.pipe(writableStream);

var readOptions = {

objectMode : true,

headers : true,

delimiter : ';',

quote : '"',

escape : '"'

};

var csvReadStream = fs.createReadStream("C:/temp/grondwaterstroming.csv");

var csvStream = csv

.parse(readOptions)

.on("data", function(data){

data.geometry = WKT.convert(JSON.parse(data.geometry));

csvWriteStream.write(data);

})

.on("end", function(){

csvWriteStream.end();

});

csvReadStream.pipe(csvStream);

The program will read the ‘C:/temp/grondwaterstroming.csv‘ file and out the result in ‘C:/temp/grondwaterstroming-out.csv‘ where the geoJSON field will be converted in a WKT format.

You can now run the following command:

node convert-geojson-to-wkt-csv.js

And you can now check the output file: ‘C:/temp/grondwaterstroming-out.csv‘

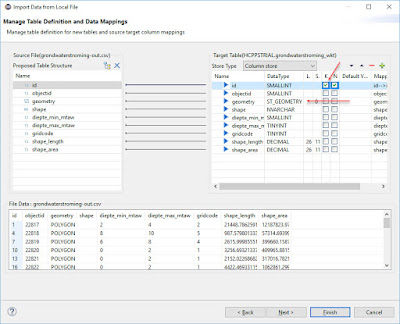

Let’s now import that files in HANA Studio like we did in the last blog, but this set the geometry field to ST_GEOMETRY instead of BLOB.

If you want to learn more about geoJSON, you can check the following website: http://geojson.org/

if you remember, our CSV file (http://datasets.antwerpen.be/v4/gis/grondwaterstroming.csv) has a field named “geometry” which is a geoJSON stream.

As stated before HANA does support this format yet with the proper ST_GEOMETRY data type constructor or constructor. So we will need to convert this field to a “Well-Known Text” format.

I found a “Node.js” package to do this (only one), so I decided to build a little program to convert the field from the local CSV file.

First you will need to install Node.js from their website: https://nodejs.org/en/download/

Once installed you can open command line prompt and run the following command:

node --version

This will return the currently installed and make sure it’s properly installed.

Now, run the following commands:

npm install fast-csv

npm install fs

npm install terraformer

npm install terraformer-wkt-parser

This will install the relevant packages to run the package.

You can now create a new file named ‘convert-geojson-to-wkt-csv.js‘ and add the following code:

var csv = require('fast-csv');

var fs = require('fs');

var Terraformer = require('terraformer');

var WKT = require('terraformer-wkt-parser');

var writeOptions = {

headers : true,

quoteHeaders : true,

quoteColumns : true,

rowDelimiter :'\n',

delimiter : ';'

};

var csvWriteStream = csv

.format(writeOptions);

var writableStream = fs.createWriteStream("C:/temp/grondwaterstroming-out.csv")

.on("finish", function(){

console.log("All done");

});

csvWriteStream.pipe(writableStream);

var readOptions = {

objectMode : true,

headers : true,

delimiter : ';',

quote : '"',

escape : '"'

};

var csvReadStream = fs.createReadStream("C:/temp/grondwaterstroming.csv");

var csvStream = csv

.parse(readOptions)

.on("data", function(data){

data.geometry = WKT.convert(JSON.parse(data.geometry));

csvWriteStream.write(data);

})

.on("end", function(){

csvWriteStream.end();

});

csvReadStream.pipe(csvStream);

The program will read the ‘C:/temp/grondwaterstroming.csv‘ file and out the result in ‘C:/temp/grondwaterstroming-out.csv‘ where the geoJSON field will be converted in a WKT format.

You can now run the following command:

node convert-geojson-to-wkt-csv.js

And you can now check the output file: ‘C:/temp/grondwaterstroming-out.csv‘

Let’s now import that files in HANA Studio like we did in the last blog, but this set the geometry field to ST_GEOMETRY instead of BLOB.

But unfortunately this file contains some invalid polygons, and you will get the following error message: “Invalid polygon: multiple exterior rings“.

So let’s remove these polygons with holes now using the following code:

var csv = require('fast-csv');

var fs = require('fs');

var Terraformer = require('terraformer');

var WKT = require('terraformer-wkt-parser');

var writeOptions = {

headers : true,

quoteHeaders : true,

quoteColumns : true,

rowDelimiter :'\n',

delimiter : ';'

};

var csvWriteStream = csv

.format(writeOptions);

var writableStream = fs.createWriteStream("C:/temp/grondwaterstroming-out.csv")

.on("finish", function(){

console.log("All done");

});

csvWriteStream.pipe(writableStream);

var readOptions = {

objectMode : true,

headers : true,

delimiter : ';',

quote : '"',

escape : '"'

};

var csvReadStream = fs.createReadStream("C:/temp/grondwaterstroming.csv");

var csvStream = csv

.parse(readOptions)

.on("data", function(data){

var geometry = new Terraformer.Primitive(JSON.parse(data.geometry));

if (geometry.hasHoles()) {

console.log("found holes in " + data.id + ". let's remove the holes");

} else {

data.geometry = WKT.convert(JSON.parse(data.geometry));

csvWriteStream.write(data);

}

})

.on("end", function(){

csvWriteStream.end();

});

csvReadStream.pipe(csvStream);

The newly generate file will have less lines, and should import fine with the same procedure as earlier (don’t forget to change the geometry type from BLOB to ST_GEOMETRY).

OK, this is all great but as I said earlier these Opendata CSV files may not contain all the data (mostly the first 20k row), so you may want or need to use the JSON format from time to time because they may support pagination. This is waht we will discuss in the last of the series: Port of Antwerp from the Opendata challenge perspective – part 4

No comments:

Post a Comment