The prerequisites for your SAP HANA instance:

CREATE DATABASE DB1 SYSTEM USER PASSWORD Initial1;

alter system start database DB1;

- A tenant DB, which you can create with the following command.

CREATE DATABASE DB1 SYSTEM USER PASSWORD Initial1;

alter system start database DB1;

- While we are abiding by better practices, it’s a good idea to create a Developer user instead of using the SYSTEM user for development.

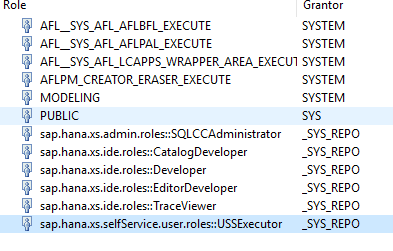

- A user with the proper permissions to execute the PAL functions and DROP/CREATE ANY in a schema (different from the AFL schema, please). The following is a sample list of roles taking into account what this blog needs, but you need to restrict permissions depending on your needs (you will also need CATALOG READ and the proper package privileges):

- The tenant DB needs to be reachable from the outside if you want to call the oData service from a Google Script. This may imply overriding host resolution and getting a Fully Qualified Domain Name (of course, configuring your hosts file in the meantime).

- XS tooling is installed as explained here

- You have installed SAP HANA Studio or the add-on for eclipse

- You have connected to your tenant database

The fun part

You are now ready for some classic extended applications. This kind of project includes the descriptors that handle log in and leverage the WHAT IS XS

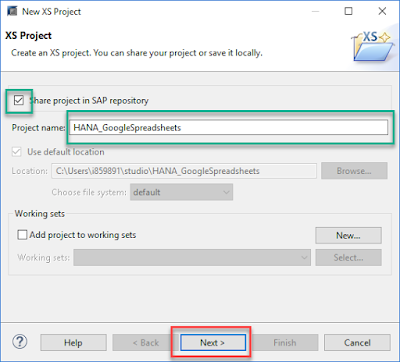

Go into File -> New -> XS Project

Add a Name for the Project, keep the “Share project in SAP repository” flag on and click on “Next”.

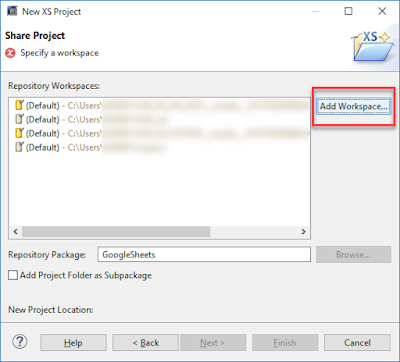

Click on **Add Workspace**

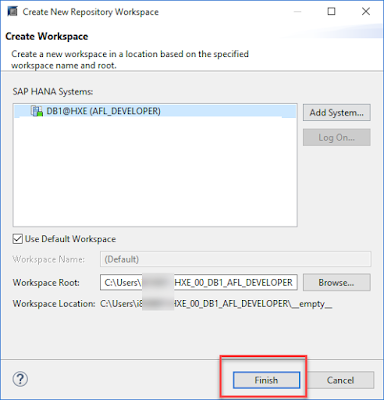

Click on the System you are logged into:

Add a name for the repository package and click on Next.

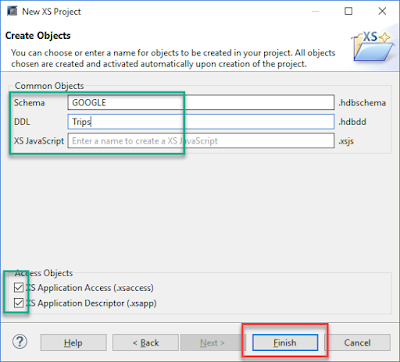

Add a name to the `Schema`, a name for the `.hdbdd` file (this is where you will create your tables)

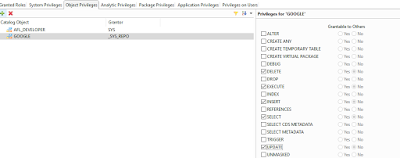

Go into the security menu, look for your user ID and grant yourself with the necessary access to the schema:

- Create ANY

- Create temporary table

- Delete

- Drop

- Execute

- Insert

- select

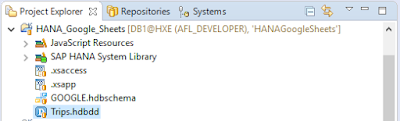

before you continue with development, I would advise you go into the “Repositories” tab, right click on your newly-created package and click on Check out and Import Projects.

Create Development Objects

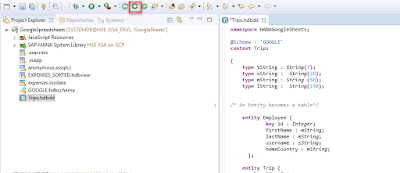

You can now see the artifacts the XS Project wizard has created for you. Double click on the Trips.hdbdd

And copy the following code:

namespace HANAGoogleSheets;

@Schema : 'GOOGLE'

context Trips

{

type tString : String(3);

type sString : String(20);

type mString : String (50);

type lString : String(150);

/* An Entity becomes a table*/

entity Employee {

key id : Integer;

firstName : mString;

lastName : mString;

username : sString;

homeCountry : mString;

};

entity Trip {

key id : Integer;

fromDate : LocalDate; //2016-01-02

toDate : LocalDate;

destination : mString;

description : lString;

approver : Association to Employee on approver.username = traveller;

traveller : sString;

approver_uname : sString;

};

//The Predictive Analytic Library does not currently support the decimal data type. The decimal needs to be "Double" (BinaryFloat in CDS)

// or can be converted later using the following entries in the signature table:

//(-2, '_SYS_AFL', 'CAST_DECIMAL_TO_DOUBLE', 'INOUT');

//(-1, '_SYS_AFL', 'CREATE_TABLE_TYPES', 'INOUT');

entity Expenses {

key id : Integer;

key tripid : Integer;

expenseType : sString;

amount : BinaryFloat;

currency : tString;

trip : Association to Trip on trip.id = tripid;

};

define view ExpensesView as SELECT from Expenses{

Expenses.id as ExpenseId,

trip.id as TripId,

trip.toDate as tripDate,

trip.traveller as employee,

trip.approver_uname as approver,

trip.destination as destination,

Expenses.expenseType as eType,

Expenses.amount as amount

};

entity periodConversion {

key periodId : String(2);

month : String(2);

year : String(4);

}

};

Click on the Green arrow on the top bar to Activate the artifacts.

If you go into the Systems tab and into Catalog, you will see that the Entities have become tables and the joins have become Views. This is Core Data Services providing a simpler way of creating database artifacts. Moving forward, if you need to alter or move the objects you can do it from this file.

In case you are wondering, the “periodConversion” table contains a translation from periods such as “2017-02” into integers, as the predictive procedure expects an integer and a double.

I used the M_TIME_DIMENSION table to perform the translation between the transaction date and the period. This table can be populated by going the SAP HANA Modeler perspective and go into Help-> Quick view. You can start the wizard from the “Generate Time Data” option.

It’s Forecast Time!

The tool for this is as simple as the SQL command. I strongly recommend you save the SQL file this time if, like me, you generally don’t save them.

The following script uses a view) to join the transactional data from the expenses and the M_TIME_DIMENSION table.

It also outputs an integer as the month and a double as the total value for that month. The assumption in this case is that the periods correspond to a single year. Otherwise, you would need a different table for mapping:

The code in the “.hdbview” file is the following:

schema="GOOGLE";

query = "SELECT TO_INT(TIMES.\"MONTH_INT\") as \"TIMESTAMP\", SUM(\"amount\") as \"VALUE\" from \"GoogleSheets::Trips.ExpensesView\" as EXPENSES join \"_SYS_BI\".\"M_TIME_DIMENSION\" as TIMES on EXPENSES.\"tripDate\" = TIMES.\"DATE_SQL\" group by TIMES.\"MONTH_INT\" order by \"TIMESTAMP\"; ";

depends_on_table=["HANAGoogleSheets::Trips.ExpensesView"];

And this is the SQL that will call the wrapper to build your Predictive Analytic procedure:

/*PAL Selection, training and forecast*/

set schema "GOOGLE";

DROP TYPE PAL_FORECASTMODELSELECTION_DATA_T;

CREATE TYPE PAL_FORECASTMODELSELECTION_DATA_T AS TABLE ("TIMESTAMP" INT, "VALUE" DOUBLE);

DROP TYPE PAL_CONTROL_T;

CREATE TYPE PAL_CONTROL_T AS TABLE ("NAME" VARCHAR(100), "INTARGS" INT, "DOUBLEARGS" DOUBLE, "STRINGARGS" VARCHAR(100));

DROP TYPE PAL_OUTPARAMETER_T;

CREATE TYPE PAL_OUTPARAMETER_T AS TABLE ("NAME" VARCHAR(100), "VALUE" VARCHAR(100));

DROP TYPE PAL_FORECASTMODELSELECTION_FORECAST_T;

CREATE TYPE PAL_FORECASTMODELSELECTION_FORECAST_T AS TABLE ("TIMESTAMP" INT, "VALUE" DOUBLE, "DIFFERENCE" DOUBLE);

/*Signature table

1st position: Input table type

2nd position: Control table

3rd position: Output Parameter table type

4th position: Results*/

DROP TABLE PAL_FORECASTMODELSELECTION_PDATA_TBL;

CREATE COLUMN TABLE PAL_FORECASTMODELSELECTION_PDATA_TBL("POSITION" INT, "SCHEMA_NAME" NVARCHAR(256), "TYPE_NAME" NVARCHAR(256), "PARAMETER_TYPE" VARCHAR(7));

INSERT INTO PAL_FORECASTMODELSELECTION_PDATA_TBL VALUES (1,'GOOGLE', 'PAL_FORECASTMODELSELECTION_DATA_T','IN');

INSERT INTO PAL_FORECASTMODELSELECTION_PDATA_TBL VALUES(2,'GOOGLE', 'PAL_CONTROL_T','IN');

INSERT INTO PAL_FORECASTMODELSELECTION_PDATA_TBL VALUES(3,'GOOGLE', 'PAL_OUTPARAMETER_T','OUT');

INSERT INTO PAL_FORECASTMODELSELECTION_PDATA_TBL VALUES(4,'GOOGLE', 'PAL_FORECASTMODELSELECTION_FORECAST_T','OUT');

/* Call the wrapper procedure to generate the predictive procedure, named "PALFORECASTSMOOTHING_PROC" in this example

CALL SYS.AFLLANG_WRAPPER_PROCEDURE_CREATE (‘<area_name>’, ‘<function_name>’, ‘<schema_name>’, '<procedure_name>', <signature_table>);

<area_name>: Always set to AFLPAL.

<function_name>: A PAL built-in function name.

<schema_name>: A name of the schema that you want to create.

<procedure_name>: A name for the PAL procedure. This can be anything you want.

<signature_table>: A user-defined table variable. The table contains records to describe the position, schema name, table type name, and parameter type, as defined below:

*/

CALL SYS.AFLLANG_WRAPPER_PROCEDURE_DROP('GOOGLE', 'PALFORECASTSMOOTHING_PROC');

CALL SYS.AFLLANG_WRAPPER_PROCEDURE_CREATE('AFLPAL', 'FORECASTSMOOTHING', 'GOOGLE', 'PALFORECASTSMOOTHING_PROC',PAL_FORECASTMODELSELECTION_PDATA_TBL);

/*Create the temporary Control table

Each row contains only one parameter value, either integer, double or string.

This configuration tells the wrapper that we will be training the model based on 90% of the data and that we want the forecast to start after the seventh period

*/

DROP TABLE #PAL_CONTROL_TBL;

CREATE LOCAL TEMPORARY COLUMN TABLE #PAL_CONTROL_TBL ("NAME" VARCHAR(100), "INTARGS" INT, "DOUBLEARGS" DOUBLE,"STRINGARGS" VARCHAR(100));

INSERT INTO #PAL_CONTROL_TBL VALUES ('FORECAST_MODEL_NAME', NULL, NULL,'TESM');

INSERT INTO #PAL_CONTROL_TBL VALUES ('THREAD_NUMBER',8, NULL, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('ALPHA', NULL,0.4, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('BETA', NULL,0.4, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('GAMMA', NULL,0.4, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('CYCLE',2, NULL, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('FORECAST_NUM',3, NULL, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('SEASONAL',0, NULL, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('INITIAL_METHOD',1, NULL, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('MAX_ITERATION',300, NULL, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('TRAINING_RATIO',NULL, 0.90, NULL);

INSERT INTO #PAL_CONTROL_TBL VALUES ('STARTTIME',7,NULL, NULL);

DROP TABLE PAL_OUTPARAMETER_TBL;

CREATE COLUMN TABLE PAL_OUTPARAMETER_TBL LIKE PAL_OUTPARAMETER_T;

DROP TABLE PAL_FORECASTMODELSELECTION_RESULT_TBL;

CREATE COLUMN TABLE PAL_FORECASTMODELSELECTION_RESULT_TBL LIKE PAL_FORECASTMODELSELECTION_FORECAST_T;

CALL GOOGLE.PALFORECASTSMOOTHING_PROC( "GOOGLE"."HANAGoogleSheets::EXPENSES_SORTED", "#PAL_CONTROL_TBL", PAL_OUTPARAMETER_TBL, PAL_FORECASTMODELSELECTION_RESULT_TBL) WITH OVERVIEW;

SELECT * FROM PAL_OUTPARAMETER_TBL;

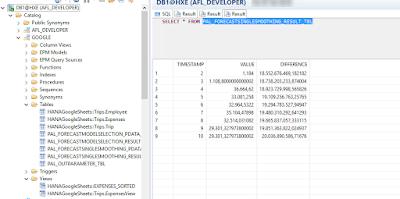

SELECT * FROM PAL_FORECASTMODELSELECTION_RESULT_TBL;

What sorcery are those many dots and colons?? You will see that, for example, the view I created as the input for data is referenced in the code as “GOOGLE”.”HANAGoogleSheets::EXPENSES_SORTED”. “GOOGLE” stands for the schema (the same name as in the .hdbschema file). “HANAGoogleSheets” is the package as you see it in the `Repositories` tab. If this Package had been included in an existing package as a subpackage, you would need to use the full path separated by dots, e.g., rootPackage.secondlevelpackage.thisPackage.

Execute the script and you will see the results from the procedure:

Ta-da! Your forecast is now in table PAL_FORECASTMODELSELECTION_RESULT_TBL.

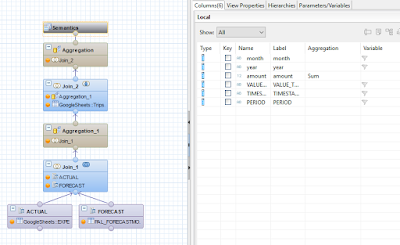

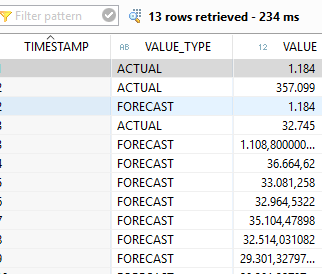

I created a calculation view to join the actual and forecast values and let HANA handle the joins and aggregations of the many transaction records we are expecting, which is what she does like a champ and in microseconds.

Resulting in:

You may choose to create a calculation view, a CDS view or even publish the results table itself.

Exposing the oData service through an anonymous connection

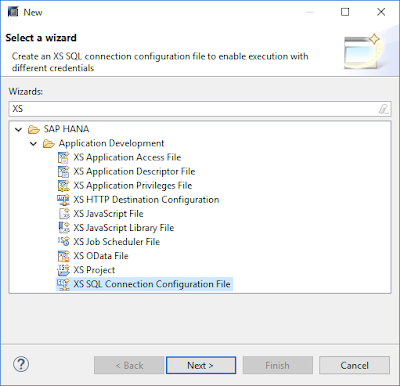

I created an anonymous oData service that will not request credentials from a user, and will use an internal user (and the roles assigned to it) instead. The steps are fairly simple.

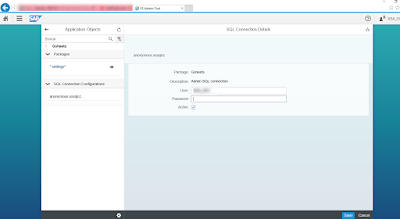

You will need to create an SQL Connection Configuration file we will use to enable execution without requesting login details from the user:

You now need to configure the connection from your XS Administration tool, which you can probably find in a link like this one: https://your-host:4300/sap/hana/xs/admin/

After saving, you should see a green message indicating that the SQLCC artifact is enabled for runtime application usage.

The only missing step is to make sure the following lines are as follows in your .xsaccess file:

"anonymous_connection" : "<<YourRootPackage>>::anonymous",

"exposed" : true,

"authentication" : null

No comments:

Post a Comment