If you successfully finished my last blog post about data aging ‘General Iinformation about data aging’ it is time for the deep dive. How SAP has implement it and how it works in detail.

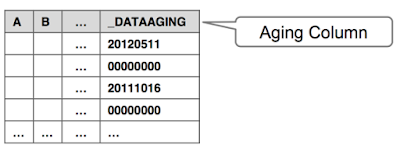

As you already have read partitioning is an elementary part of data aging process to separate the current from the historical. Therefor range partitioning is used with an additional column called ‘_DATAAGING’:

As you already have read partitioning is an elementary part of data aging process to separate the current from the historical. Therefor range partitioning is used with an additional column called ‘_DATAAGING’:

Short separation for the the two parts:

Current data is the data relevant to the operations of application objects, needed in day-to day-business transactions. The application logic determines when current data turns historical by using its knowledge about the object’s life cycle. The application logic validates the conditions at the object level from a business point of view, based on the status, execution of existence checks, and verification of cross-object dependencies.

Historical data is data that is not used for day-to day-business transactions. By default, historical data is not visible to ABAP applications. It is no longer updated from a business point of view. The application logic determines when current data turns historical by using its knowledge about the object’s lifecycle. The application logic validates the conditions at object level from a business point of view, based on the status, executing existence checks, and verifying cross object dependencies.

Limitation: There can only be one current partition with max. 2 billion rows, but there can be multiple ones for the historical part.

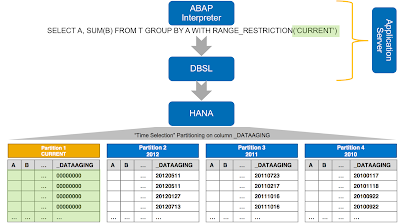

If you activate data aging for one object / table you only can select it via a special syntax. The SAP HANA-specific database shared library (DBSL) in the ABAP server adds a corresponding clause to the SQL statements that are sent to SAP HANA. The classes ABAP CL_ABAP_SESSION_TEMPERATURE and CL_ABAP_STACK_TEMPERATURE enables the data access for the historical data.

Selection

By adding the clause WITH RANGE RESTRICTION (‘CURRENT’) to a SQL statement, SAP HANA restricts the operation to the hot data partition only.

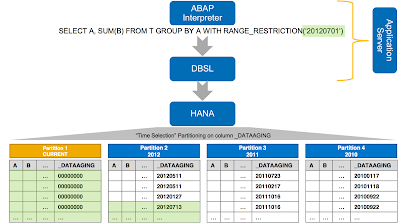

This restricts the operation to all partitions with data temperatures above the specified value. The clause WITH RANGE RESTRICTION (‘20120701’), for example, tells SAP HANA to search the hot partition and all cold partitions that contain values greater or equal than ‘20120701’. Range restriction can be applied to SELECT, UPDATE, UPSERT, DELETE statements and to procedure calls.

RANGE RESTRICTION Current

RANGE RESTRICTION Time

The query will select the current partition 1 and partly the partition 2. HANA won’t load the complete partition 2 into memory! Cold partitions make use of Paged Attributes. While ordinary columns are loaded entirely into memory upon first access, Paged Attributes are loaded page-wise. Ideally only the pages that hold the requested rows are being loaded.

Parameter

It is possible to configure the amount of memory used by page loadable columns. The parameter are a little bit confusing. The defaults in megabyte or procent are:

global.ini:page_loadable_columns_min_size=1047527424

global.ini:page_loadable_columns_limit=1047527424

global.ini:page_loadable_columns_min_size_rel=5

global.ini:page_loadable_columns_limit_rel=10

The first ones are set with a default of 999TB!

The last two ones setting a relative lower and upper (*_rel) threshold for the total memory size of page loadable column resources per service in percent of the process allocation limit.

When the total size of page loadable column resources per service falls below the minimum of the two threshold values (page_loadable_columns_min*) resulting from the corresponding parameters (= effective lower threshold value), the HANA System stops unloading page loadable column resources from memory with first priority based on an LRU strategy and switches to a weighted LRU strategy for all resources.

When the total memory size of page loadable column resources per service exceeds the minimum of the two threshold (page_loadable_columns_limit*) values resulting from the parameters the HANA System automatically starts unloading page loadable column resources from memory with first priority based on an LRU strategy.

You can set them by hana studio interface or via sql command (example value 50GB):

ALTER SYSTEM ALTER CONFIGURATION ('global.ini', 'System' ) SET ('memoryobjects', 'page_loadable_columns_min_size') = '51200' WITH RECONFIGURE;

Partitioning

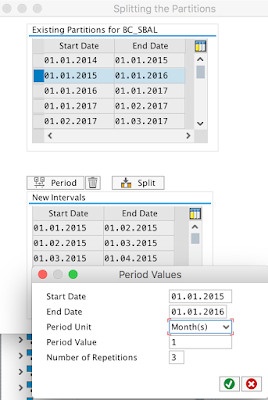

You can define a partition range for every table. For instance you can define a partition per year and if the partitions are getting too big you can repartition (only splitting) them from yearly to monthly:

But be careful, currently it is not possible to merge partitions with the transaction DAGPTM (tested with release: S/4 1610 FP1). So start with a high level range (year) and split them if needed.

Known Bugs

| Note | Description | Fixed with |

| 2509513 | Indexserver Crash at UnifiedTable:: ColumnFragmentPagedNBitReaderIterator::r eposition During Table Load of Cold Paged Partition |

Revisions: >= 122.12 (SPS12) >= 012.01 (SPS01) |

| 2497016 | Pages Belonging to Cold Partitions Created With Paged Attribute Are Not Unloaded by The Resource Manager if They Are Pinned by an Inverted Index |

Revisions: >= 122.10 (SPS12) >= 002.01 (SPS00) >= 012.00 (SPS01) |

| 2440614 | SAP HANA: SQL error for MDX statement with WITH RANGE_RESTRICTION |

DBSL: 745 Patch Level 415 749 Patch Level 210 750 Patch Level 27 751 Patch Level 17 752 Patch Level 7 |

| 2128075 | AppLog: Short dump ASSERTION_FAILED in CL_BAL_DB_SEARCH |

SAP_BASIS SP: SAP_BASIS 740 SP13 SAP_BASIS 750 SP3 |

No comments:

Post a Comment