Since Sapphire this year SAP and Intel have announced some new details regarding Skylake and NVM (non-volatile memory).

With the new processors it should be possible to gain ~60% more performance when running HANA workload on it. Additionally new DIMMs based on 3D XPoint technology (NVM) help overcome the traditional I/O bottlenecks that slow data flows and limit application capacity and performance.

Till now no there were no details about how SAP will use this new technology. You can use it as filesystem or as in-memory format.

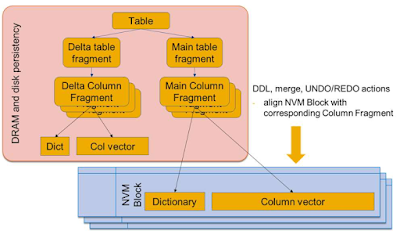

◉ tests only include the in-memory format for NVM

◉ tests only cosider the main table fragments

◉ each Main Column Fragment locates its associated NVRAM block and points directly to its column vector and dictionary backing arrays

◉ no change in the process of data creation for a new Main Column Fragment

With the new processors it should be possible to gain ~60% more performance when running HANA workload on it. Additionally new DIMMs based on 3D XPoint technology (NVM) help overcome the traditional I/O bottlenecks that slow data flows and limit application capacity and performance.

Till now no there were no details about how SAP will use this new technology. You can use it as filesystem or as in-memory format.

1. Initial situation

◉ tests only include the in-memory format for NVM

◉ tests only cosider the main table fragments

◉ each Main Column Fragment locates its associated NVRAM block and points directly to its column vector and dictionary backing arrays

◉ no change in the process of data creation for a new Main Column Fragment

Scenario 1 (2.1 + 2.2) OLTP and OLAP:

◉ Used table 4 million records, 500 columns

◉ Hardware: Intel Xeon processors E5-4620 v2 with 8 cores each, running at 2.6 GHz without Hyper-Threading

Scenario 2 (2.3):

◉ Used table 4 million records, 500 columns, size ~5GB

◉ Hardware: Intel(R) Xeon(R) CPU E7-8880 v2 @ 2.50GHz with 1TB Main Memory

2. Results in a nutshell

2.1 OLTP workload

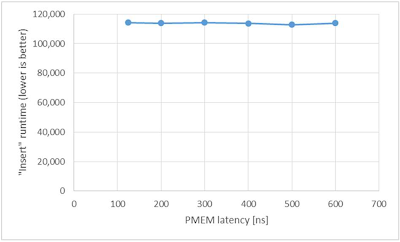

2.1.1 Inserts

The inserts performance is not affected, because the delta store, where the data will be changed in case of an insert, remains in DRAM.

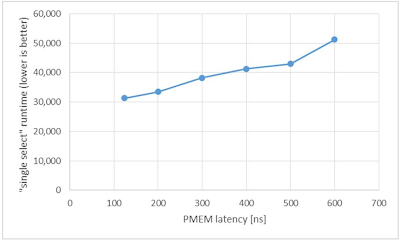

2.1.2 Single Select

Single select at 6xlatency: runtime + ~66%

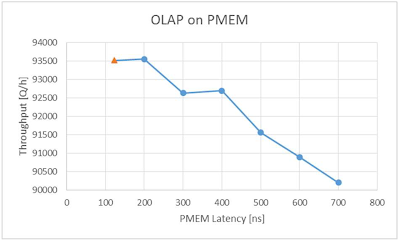

2.2 OLAP workload

2.2.1 I/O Troughput

I/O throughput decreases at 7xlatency to ~-3%

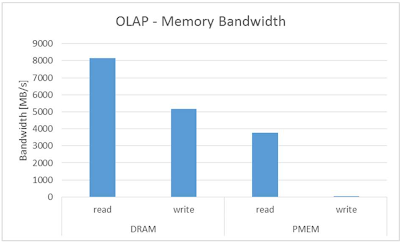

2.2.2 OLAP Memory bandwidth

read performance DRAM : NVRAM faktor 2,27

write operations (delta store) only will take place in DRAM so no comparison is possible.

2.3 Restart times and memory footprint

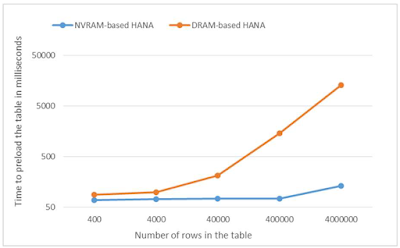

2.3.1 Table preload

A table with 400 to 4.000.000 records are preloaded (used table 5GB size, 4 million records, 100 columns). The time needed in case of using only DRAM extremely rises starting with more than 400.000 rows. This means in the worst case a factor of 100!

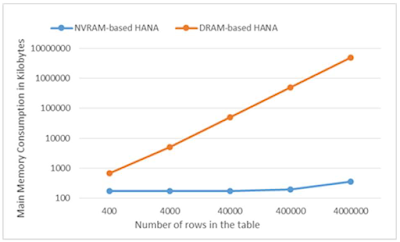

2.6 DRAM consumption

DRAM savings if you place the main fragments into NVM at 4 Mio rows: ~8GB

This measurements tables don’t include ~5GB shared memory allocated by NVRAM. No exact details how this scales with bigger or more tables.

“For the NVRAM- aware HANA case, we could see ~5GB being allocated from NVRAM based on Shared Memory (i.e. /dev/shm) when all 4 million rows of the table are loaded”

Also observed:

“In DRAM case, majority of CPU cost is being spent in disk-based reads whereas for NVRAM, the disk reads are absent since we map data directly into process address space.”

3. Challenges

Here are some challenges which are partly special to HANA. The whitepaper was written pretty general to adopt it also for other databases.

3.1 Possibility for table pinning

Pinning of tables is already available for some other databases. This means that defined tables will be permanent placed in main memory. No LRU (=least recently used) will unload it.

With NVM most of the tables can be placed in the cheaper and bigger memory. But may it make sense to place some of the important tables which are frequently accesed permanent in the main memory?

3.2 Load / unload affect NVRAM data?

Currently when a DBA or the system itself unloads a table in cause of the existing residence time the table will be unloaded from main memory. On the next access it have to be loaded initially from disk. What happens if I use NVM? Will the data remain as main col fragment as NVRAM block or will it be unloaded to disk?

3.3 Data aging aspects

With Data Aging it is possible to keep the important tables in memory and permanent place historical data on disk. This way you can save a lot of main memory and the data can still be changed. It is no archiving! If you access historical data they will be for sure placed for a couple of time inside of the main memory.

With NVM the current main fragments would be placed in NVRAM, the delta fragments remain in main memory (DRAM) and the historical data placed on disk. May be it would make sense to place the pool for the accessed historical data in NVM (page_loadable_columns heap pool).

Or as explained in 3.1 place current (=hot) data in DRAM and historical data in NVM.

4. Missing aspects

Important / questions tests weren’t considered or released.

4.1 Delta Merge times

The data from main (NVRAM) and delta (RAM) have to merged to the new main store which is also located in NVRAM. In the old architecture this process was only in memory and very fast. How this affects the system and the runtimes?

4.2 Savepoint times

The savepoint (default every 5min) writes the changes of all modified rows of all tables to the persistency layer. This data mostly are read only from the delta store. But it can happen that after a delta merge with a lot of rows takes place the runtime of the savepoint increase in cause of the slower performance of NVM. For example in BW when a big ETL process is running and the delta merge is deactivated (normal for some BW processes) and action finished shortly before the savepoint starts => a final delta merge is executed. Accordingly all new entries are transferred to the main store and have to be read for the savepoint. All data since the last savepoint have to be read from the new main store which is placed in the NVRAM.

A long running savepoint can lead to a performance issue, because there is a critical time phase which holds certain locks. The longer the critical phase the more the systems performance is affected.

4.3 Other tables / heap pools

Temp tables, Indexes, BW PSA and changelogs, result cache and page cache may be placed in NVM.

4.4 Growth

What happens if a OOM situation takes place? In this case the pointer in the main memory may be lost which leads to a initialization of the NVRAM.

What happens if a OONVM (=out of non-volatile memory) situation takes place? What is the fallback?

5. Summary

NVM can be a game changer but it is still a long way to go in which way SAP will adapt it for HANA. There are a lot of possibilities, but also some challenges left.

So the amount of data which can be placed in RAM can be extended with some performance disturbance. If your business can accept this performance you can save a lot of memory and thereby money. So NVRAM can definitely reduce your TCO.

No comments:

Post a Comment