In general nearly everyone knows that with new installations based on NW750 there are no cluster tables anymore. When you migrate to HANA one prerequisite is that all cluster and pool tables have to be declustered/depooled.

But is this really correct? In my last projects there were a lot of issues regarding cluster and pool tables. SUM and SWPM have not worked as expected and so some data was not migrated / depooled / declustered automatically in cause of bugs.

So I want to shed some light on this topic to help to understand the usage, procedure and checks.

First of all what are cluster and pool tables in detail?

◈ Cluster tables combine information from several tables logically belonging together. They allow efficient access to a whole application object without incurring joins on database level. This means they can only be logical read by DBSL. A normal native SQL won’t result in correct data by default.

◈ Pool tables combine a large number of individual small tables into one database table, which adressed problems with large numbers of individual database objects. This tables can be read by native SQL by default without any trouble.

Do you know how many cluster/pool tables existing in an ERP EhP8 system? Have a look into table DD06L. In my system I count 162.

Cluster: 53

Pool: 109

At first it is wrong that HANA can’t run with cluster and pool tables. HANA can handle it, but it is not wise to do so for technical reasons.

There are still some cluster / pool tables after the migration by default!

“There are pooled tables that cannot be converted into transparent tables for technical reasons. Keep these tables as pooled tables. This might apply to the following tables:

GLP1, GLP2, GLPPC, GLS1, GLS2, GLSPC, GLT1, GLT2, JVS1, T157T. It might also apply to all pooled tables with the prefix M_.”

Check it out by yourself for your systems (DBACOCKPIT->Diagnostics->SQL Editor or HANA Studio or any other SQL Client):

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT TABNAME FROM DD02L WHERE TABCLASS = 'CLUSTER') ORDER BY record_count DESC;

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT TABNAME FROM DD02L WHERE TABCLASS = 'POOL') ORDER BY record_count DESC;

#All in one:

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT TABNAME FROM DD02L WHERE TABCLASS = 'POOL' OR TABCLASS = 'CLUSTER') ORDER BY record_count DESC;

So which tables should be declustered / depooled after migration and what exactly happens to data and ABAP dictionary?

Ok, let’s start with which tables currently existing in the system with such classification. This can be answered by a DB query on table DD06L which works on anyDB _AND_ HANA:

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT SQLTAB FROM DD06L WHERE SQLCLASS = 'POOL') ORDER BY record_count DESC

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT SQLTAB FROM DD06L WHERE SQLCLASS = 'CLUSTER') ORDER BY record_count DESC

#All in one:

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT SQLTAB FROM DD06L WHERE SQLCLASS = 'CLUSTER' OR SQLCLASS = 'POOL') ORDER BY record_count DESC

Second step after we identified the filled tables is what happens in case of declustering/depooling?

You can select which transparent table belongs to a cluster/pool table by selecting DD02L:

SELECT TABNAME, TABCLASS, SQLTAB FROM DD02L WHERE TABCLASS = 'POOL' OR TABCLASS = 'CLUSTER';

Where TABNAME is the transparent table and SQLTAB is the cluster/pool table.

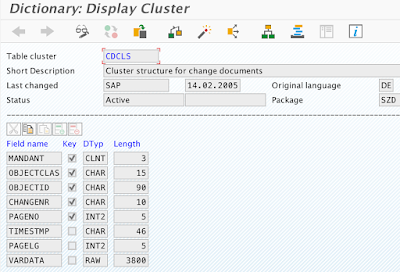

I take an example with a well known cluster called CDCLS. This one is normally one of the biggest in an ERP system.

SE11 layout:

But is this really correct? In my last projects there were a lot of issues regarding cluster and pool tables. SUM and SWPM have not worked as expected and so some data was not migrated / depooled / declustered automatically in cause of bugs.

So I want to shed some light on this topic to help to understand the usage, procedure and checks.

1) Clarification Cluster and Pool tables

First of all what are cluster and pool tables in detail?

◈ Cluster tables combine information from several tables logically belonging together. They allow efficient access to a whole application object without incurring joins on database level. This means they can only be logical read by DBSL. A normal native SQL won’t result in correct data by default.

◈ Pool tables combine a large number of individual small tables into one database table, which adressed problems with large numbers of individual database objects. This tables can be read by native SQL by default without any trouble.

Do you know how many cluster/pool tables existing in an ERP EhP8 system? Have a look into table DD06L. In my system I count 162.

Cluster: 53

Pool: 109

2) Check Pool and Cluster tables in the system

At first it is wrong that HANA can’t run with cluster and pool tables. HANA can handle it, but it is not wise to do so for technical reasons.

There are still some cluster / pool tables after the migration by default!

“There are pooled tables that cannot be converted into transparent tables for technical reasons. Keep these tables as pooled tables. This might apply to the following tables:

GLP1, GLP2, GLPPC, GLS1, GLS2, GLSPC, GLT1, GLT2, JVS1, T157T. It might also apply to all pooled tables with the prefix M_.”

Check it out by yourself for your systems (DBACOCKPIT->Diagnostics->SQL Editor or HANA Studio or any other SQL Client):

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT TABNAME FROM DD02L WHERE TABCLASS = 'CLUSTER') ORDER BY record_count DESC;

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT TABNAME FROM DD02L WHERE TABCLASS = 'POOL') ORDER BY record_count DESC;

#All in one:

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT TABNAME FROM DD02L WHERE TABCLASS = 'POOL' OR TABCLASS = 'CLUSTER') ORDER BY record_count DESC;

So which tables should be declustered / depooled after migration and what exactly happens to data and ABAP dictionary?

Ok, let’s start with which tables currently existing in the system with such classification. This can be answered by a DB query on table DD06L which works on anyDB _AND_ HANA:

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT SQLTAB FROM DD06L WHERE SQLCLASS = 'POOL') ORDER BY record_count DESC

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT SQLTAB FROM DD06L WHERE SQLCLASS = 'CLUSTER') ORDER BY record_count DESC

#All in one:

SELECT * FROM M_TABLES where TABLE_NAME in (SELECT SQLTAB FROM DD06L WHERE SQLCLASS = 'CLUSTER' OR SQLCLASS = 'POOL') ORDER BY record_count DESC

3) Procedure of depooling and declustering using R3load

Second step after we identified the filled tables is what happens in case of declustering/depooling?

You can select which transparent table belongs to a cluster/pool table by selecting DD02L:

SELECT TABNAME, TABCLASS, SQLTAB FROM DD02L WHERE TABCLASS = 'POOL' OR TABCLASS = 'CLUSTER';

Where TABNAME is the transparent table and SQLTAB is the cluster/pool table.

I take an example with a well known cluster called CDCLS. This one is normally one of the biggest in an ERP system.

SE11 layout:

This table will be splitted in two transparent tables called CDPOS and PCDPOS.

For small tables you can use the ABAP approach. For bigger clusters like CDCLS I strongly recommend to use the R3load procedure because of the size and duration. R3load is called with an option decluster=true.

This result in different ways:

◈ for pool tables it is easy to select the dataso no difference to other tables

◈ for cluster table a logical structure mapping will be created.

I won’t go to deep because the most migrations today will happen via SUM and here everything happens automatically, but some words to understand the high level:

#Declustering will happen with this option in the properties file

export_monitor_cmd.properties:

decluster=true

Packages (depending on procedure SWPM/SUM):

◈ SAPCLUST.STR => Cluster table structures

◈ SAPCDCLS.STR.logical => logical structure of CDCLS

tab: PCDPOS

att: SDOCU 2 ?N Tc all PCDPOS~0 SDOCU 2

ref: CDCLS

fld: MANDANT CLNT 3 0 0 not_null 1

fld: OBJECTCLAS CHAR 15 0 0 not_null 2

fld: OBJECTID CHAR 90 0 0 not_null 3

fld: CHANGENR CHAR 10 0 0 not_null 4

fld: TABNAME CHAR 30 0 0 not_null 5

fld: TABKEY CHAR 70 0 0 not_null 6

fld: FNAME CHAR 30 0 0 not_null 7

fld: CHNGIND CHAR 1 0 0 not_null 8

fld: TEXT_CASE CHAR 1 0 0 not_null 0

fld: UNIT_OLD UNIT 3 0 0 not_null 0

fld: UNIT_NEW UNIT 3 0 0 not_null 0

fld: CUKY_OLD CUKY 5 0 0 not_null 0

fld: CUKY_NEW CUKY 5 0 0 not_null 0

fld: VALUE_NEW CHAR 254 0 0 not_null 0

fld: VALUE_OLD CHAR 254 0 0 not_null 0

tab: CDPOS

att: SDOCU 6 ?N Tc all CDPOS~0 SDOCU 6

ref: CDCLS

fld: MANDANT CLNT 3 0 0 not_null 1

fld: OBJECTCLAS CHAR 15 0 0 not_null 2

fld: OBJECTID CHAR 90 0 0 not_null 3

fld: CHANGENR CHAR 10 0 0 not_null 4

fld: TABNAME CHAR 30 0 0 not_null 5

fld: TABKEY CHAR 70 0 0 not_null 6

fld: FNAME CHAR 30 0 0 not_null 7

fld: CHNGIND CHAR 1 0 0 not_null 8

fld: TEXT_CASE CHAR 1 0 0 not_null 0

fld: UNIT_OLD UNIT 3 0 0 not_null 0

fld: UNIT_NEW UNIT 3 0 0 not_null 0

fld: CUKY_OLD CUKY 5 0 0 not_null 0

fld: CUKY_NEW CUKY 5 0 0 not_null 0

fld: VALUE_NEW CHAR 254 0 0 not_null 0

fld: VALUE_OLD CHAR 254 0 0 not_null 0

fld: _DATAAGING DATS 8 0 0 not_null 0

4) Splitting behaviour

Be careful if you have splitted your cluster tables with SWPM and R3ta. This will result in a behaviour which differs from transparent tables:

CDCLS-1*

SAPCDCLS-1*

SAPCDCLS.STR.logical

The SAPCDCLS* files will be the correct files for searching for errors. These are needed and automatically created by the procedure for the logical mapping. Don’t get irretated by import/export logs as well as on things like the migration time stats from migtime.

5) ABAP dictionary adaption and checks

Ok, now we know the declustering and depooling happens during export. But as you know also the ABAP dictionary has to be adjusted in other case the tables are not known and can’t be used by the ABAP stack.

For this the following reports exist:

◈ RUTCSADAPT => adjust cluster dictionary structures

◈ RUTPOADAPT => adjust pool dictionary structures

The reports will be called automatically by SWPM (if you check declustering/depooling in dialog phase) and SUM. They will just adjust the dictionary, they won’t migrate or decluster data!

This can only happen if the cluster tables are empty which should be the result of the declustering/depooling, because all data imported into the transparent tables.

To check if all data were transfered successfully execute report SHDB_MIGRATION_CHECK (note 1785060). This report should always be part of your cutover procedure as postprocessing task.

So if this report finished without errors AND warnings you should be fine, shouldn’t you? Seems to be wrong because the report won’t check all cluster / pool tables. Some of them are excluded for reason, some are just not checked. For example pool tables like KAPOL, KBPOL, UTAB. There is no official documentation for there existence as pool table on HANA.

They are getting depooled but not checked by the report. May be SAP will adjust documentation and the check report in the future.

6) HANA checks

There is another check on HANA with SQL statement from the SQL collection attached to note 1969700.

You can use the Minichecks (HANA_Configuration_MiniChecks*) or HANA_Tables_SpecialTables.

The statements will check if there are still any records in tables ‘CDCLS’, ‘EDI40’, ‘KAPOL’, ‘KOCLU’ and ‘RFBLG’.

Other once like KBPOL or UTAB won’t be checked in the current version.

I currently have several customers with different pool tables which were correctly splitted and filled into transparent tables, but some entries existing in the new tables AND in the old original pool table. The dictionary structures are correct the transparent table are in use and there were no migration errors. OSS messages are still in processing why this happened… I assume that the procedure is buggy or there are technical reasons which are not offically documented.

7) Technical takeaways

◈ difference between cluster and pool tables

◈ design cluster and pool tables on HANA

◈ depooling and declustering procedure

◈ ABAP adaption with reports RUTPOADAPT and RUTCSADAPT

◈ check report SHDB_MIGRATION_CHECK

◈ HANA statements for checking cluster/pool tables: HANA_Configuration_MiniChecks* and HANA_Tables_SpecialTables

No comments:

Post a Comment