In section 34 Changed Data Capture of the SAP Data Services Designer Guide you can find a very good description of the various delta load capabilities in SAP Data Services. Isn’t that worth an attentive read?

Delta loads are commonly used to reduce overall ETL processing time. When the number of new and modified source records is relatively low compared to the full data size, overall performance will increase significantly. Not only the time spent in extraction will be much lower, also the transformation and load steps will run much faster because those operations will apply to a (minor) subset of data only.

I would say that, in general, you may consider following options to implement an incremental extraction:

◈ Use last_modification timestamps from the source tables. Create a control table for keeping track of the timestamp(s) used in the previous incremental extraction. Have your jobs call an initialisation script to assign the timestamps to global variables. Use the variables in the where-clause of the Query transform in your extraction dataflows.

◈ If there’s no timestamp available, but the records are numbered sequentially, you can apply a similar approach using the sequence numbers.

◈ Use log tables. Many systems do not include timestamps in the actual tables modified. They use so-called log tables to keep track of changes. Join source and log tables and proceed as above.

◈ Use the built-in CDC-mechanisms of the source database.

◈ Use specific CDC-enabling software, like SLT.

◈ With SAP as a source, you can leverage delta-enabled Business Content Extractors.

Unfortunately, when none of those options are available, you probably will have to do a full extraction of your source data, not such a great idea when dealing with big data. Note that, in such a scenario, you still have the possibility to reduce transformation and load times by calculating the delta yourself. You achieve this by keeping a copy of the data extracted in the previous job run and running it thru a Table_Comparison transform to calculate the delta. Then you can apply the transformations to the delta only. And write the new and modified records only to the target.

This blog describes a solution around the two potential bottlenecks in that approach:

◈ Full extraction times are related to the size of the source data, the number of records time the record size. The larger the latter (multiple and wide columns), the more time it will take to extract the data.

◈ The time spent in the Table_comparison transform (make sure to always sort the input data and set the comparison method in the transform to Sorted input!) may negate the profit obtained from transforming and loading a reduced data set. The more columns, the longer the comparison process will run.

Both problems can be overcome by using hash functionality. The example below is for SAP HANA but can be applied to any database that has a built-in hash function or is able to call an external one.

Create two tables in HANA, first:

◈ CHECKSUM_MINUS1, to contain checksums from the previous job run. The table has 2 columns, one for the primary key and the other for the hash value of the record.

◈ CHECKSUM, to contain the same data from the current job run.

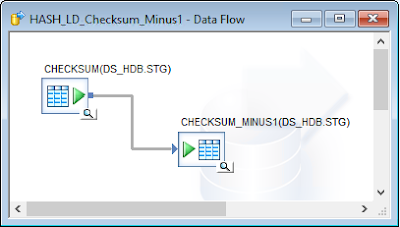

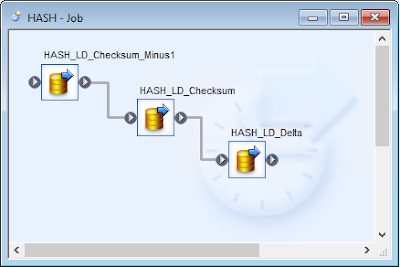

Create a 1st dataflow to copy the checksums from the previous run to CHECKSUM_MINUS1:

Delta loads are commonly used to reduce overall ETL processing time. When the number of new and modified source records is relatively low compared to the full data size, overall performance will increase significantly. Not only the time spent in extraction will be much lower, also the transformation and load steps will run much faster because those operations will apply to a (minor) subset of data only.

I would say that, in general, you may consider following options to implement an incremental extraction:

◈ Use last_modification timestamps from the source tables. Create a control table for keeping track of the timestamp(s) used in the previous incremental extraction. Have your jobs call an initialisation script to assign the timestamps to global variables. Use the variables in the where-clause of the Query transform in your extraction dataflows.

◈ If there’s no timestamp available, but the records are numbered sequentially, you can apply a similar approach using the sequence numbers.

◈ Use log tables. Many systems do not include timestamps in the actual tables modified. They use so-called log tables to keep track of changes. Join source and log tables and proceed as above.

◈ Use the built-in CDC-mechanisms of the source database.

◈ Use specific CDC-enabling software, like SLT.

◈ With SAP as a source, you can leverage delta-enabled Business Content Extractors.

Unfortunately, when none of those options are available, you probably will have to do a full extraction of your source data, not such a great idea when dealing with big data. Note that, in such a scenario, you still have the possibility to reduce transformation and load times by calculating the delta yourself. You achieve this by keeping a copy of the data extracted in the previous job run and running it thru a Table_Comparison transform to calculate the delta. Then you can apply the transformations to the delta only. And write the new and modified records only to the target.

This blog describes a solution around the two potential bottlenecks in that approach:

◈ Full extraction times are related to the size of the source data, the number of records time the record size. The larger the latter (multiple and wide columns), the more time it will take to extract the data.

◈ The time spent in the Table_comparison transform (make sure to always sort the input data and set the comparison method in the transform to Sorted input!) may negate the profit obtained from transforming and loading a reduced data set. The more columns, the longer the comparison process will run.

Both problems can be overcome by using hash functionality. The example below is for SAP HANA but can be applied to any database that has a built-in hash function or is able to call an external one.

Create two tables in HANA, first:

◈ CHECKSUM_MINUS1, to contain checksums from the previous job run. The table has 2 columns, one for the primary key and the other for the hash value of the record.

◈ CHECKSUM, to contain the same data from the current job run.

Create a 1st dataflow to copy the checksums from the previous run to CHECKSUM_MINUS1:

As both tables are in the same database, full logic is pushed to HANA and execution terminated almost instantly.

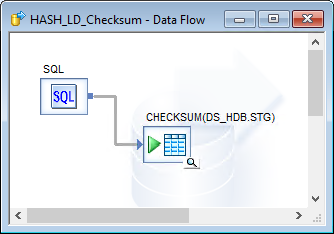

Create a 2nd dataflow to calculate the checksums. Use a SQL transform as source:

Open the SQL – Transform Editor. Enter the SQL text as:

SELECT PKCOL, hash_sha256(

to_binary(PKCOL)

, to_binary(ifnull(INT1,0))

, to_binary(ifnull(INT2,0))

, to_binary(ifnull(DATE1,''))

, to_binary(ifnull(INT3,0))

, to_binary(ifnull(INT4,0))

, to_binary(ifnull(INT5,''))

, to_binary(ifnull(TXT01,''))

, to_binary(ifnull(TXT02,''))

, to_binary(ifnull(INT6,0))

, to_binary(ifnull(TXT03,''))

, to_binary(ifnull(TXT04,''))

, to_binary(ifnull(INT7,0))

, to_binary(ifnull(DEC1,0))

, to_binary(ifnull(TXT05,''))

, to_binary(ifnull(DEC2,0))

, to_binary(ifnull(TXT06,''))

, to_binary(ifnull(DEC3,0))

, to_binary(ifnull(TXT07,''))

, to_binary(ifnull(INT8,0))

, to_binary(ifnull(TXT08,''))

, to_binary(ifnull(TXT09,''))

, to_binary(ifnull(INT9,0.0))

, to_binary(ifnull(DEC4,0.0))

, to_binary(ifnull(DEC5,0))

, to_binary(ifnull(TXT10,''))

, to_binary(ifnull(TXT11,''))

, to_binary(ifnull(DEC6,0))

, to_binary(ifnull(TXT12,''))

, to_binary(ifnull(TXT13,''))

, to_binary(ifnull(DATE2,''))

) as hash_value

FROM MY_SOURCE_TABLE

Column PKCOL is the primary key column of MY_SOURCE_TABLE that has several additional numeric, date and varchar columns.

Then select the Update schema button. And close the Editor window.

The SQL transform prevents full SQL-pushdown to HANA, so all data is passing thru SAP Data Services memory. Because the data size is relatively small (2 columns only) compared to the full source table size, performance gains may be high.

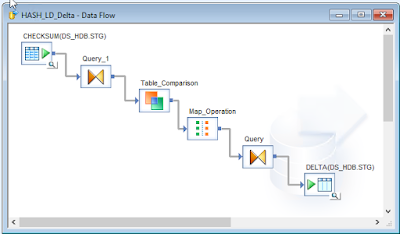

Create a 3rd dataflow to calculate the delta:

The Table_Comparison compares the input data to CHECKSUM_MINUS1 (current to previous data set).

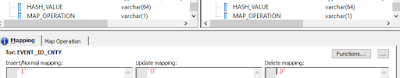

The Map_Operation maps all Input row types to Normal. And registers the type of operation in the additional column.

Because there’s one comparison column only, the operation will run much quicker, too.

When you run the 3 dataflows in sequence, the DELTA table will contain the primary key column of all new, updated and deleted records, with the type of operation.

You can now join DELTA and MY_SOURCE_TABLE to limit the size of the input data set. Apply the necessary transformations to that subset. And use a Map_CDC_Operation to eventually apply the results to the target.

No comments:

Post a Comment