While I no longer work within SAP HANA Product Management, in my new role in SAP Cloud Business Group I am still developing on HANA and assisting teams that are doing so as well. Therefore I wanted to some research on “skinny” local development options for SAP HANA. The goal was to use HANA Express with as small a resource footprint as possible. This meant starting with the server only edition of HANA Express which does not include XSA nor Web IDE for SAP HANA. Yet I still wanted to be able to create database artifacts via HANA Deployment Infrastructure (HDI) design time approach. So that’s the challenge of this blog entry – “how low can I go”?

So first I needed to gather some tools which I would install onto my development laptop.

◈ HANA Express – Server Only

Actually you don’t need a local install. I could also connect to a HANA Express or any HANA instance and deploy artifacts remotely. But for my purposes, I wanted to see how small I could get a HANA Express installation locally.

◈ HANA Client

I want to install the HANA Client on my local laptop so I can use hdbsql to issue SQL commands directly from my development environment. (You might also want to add the HANA client to your PATH to make calling it from the command line easier).

◈ Node.js

As we will see the HDI deployer is just a Node.js application. It doesn’t require XSA to run. We will run the deployer locally from our laptop directly but to do so we need Node.js installed locally as well. This will also allow us to run, test, debug Node.js applications completely local without the need for XSA as well.

◈ Microsoft VS Code (Optional)

We need an editor. Of course you use Notepad or really any text editor. However we probably want something a little nicer. I chose VS Code because it has great support for Node.js development/debugging, some basic SQL syntax support, and we can even run a command prompt (and therefore hdbsql) from within the tool.

◈ SQuirreL SQL Client (Optional)

Without XSA, we won’t have the SAP Web IDE for SAP HANA, HANA Cockpit, nor Database Explorer locally. We could fall back to HANA Studio, but I hate the idea of depending upon a deprecated tool. Therefore when I really want some graphical data exploration tools, I like this super lightweight open source solution that works well with HANA.

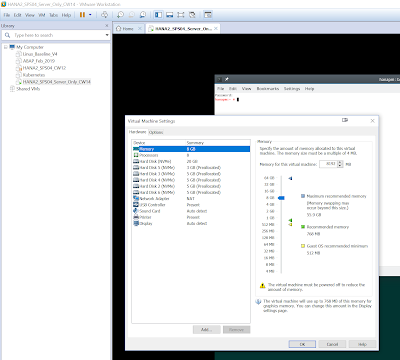

While many people choose to start with the pre-built HANA Express VM or cloud images; I choose to begin with the binary installer into my own VM of Linux. This way I’m able to tune the installation scripts even further. I start with the Database Server only version of HXE (which is already sized for around 8Gb machines). I know that I don’t need XS Classic either. Therefore I go into the configuration files (/configurations/custom).

I edit the daemon.ini file to set the webdispatcher instances to 0. If I’m going to completely disable XS Classic, then I also don’t need a Web Dispatcher. Then I edit nameserver.ini and set embedded in the httpserver section to false. HXE will try to run XSEngine (XS Classic) in embedded mode and not as a standalone process. But this configuration turns off embedded mode as well. Therefore it completely disables the XSEngine. The same entry needs to be made in the xsengine.ini file as well – set embedded to false.

I then go forward with the rest of the installation. Without XSA I don’t need nearly as much disk space for the VM nor do I need as much memory. I set my VM to only 8Gb, but I actually could have squeezed it down even further.

Prerequisites

So first I needed to gather some tools which I would install onto my development laptop.

◈ HANA Express – Server Only

Actually you don’t need a local install. I could also connect to a HANA Express or any HANA instance and deploy artifacts remotely. But for my purposes, I wanted to see how small I could get a HANA Express installation locally.

◈ HANA Client

I want to install the HANA Client on my local laptop so I can use hdbsql to issue SQL commands directly from my development environment. (You might also want to add the HANA client to your PATH to make calling it from the command line easier).

◈ Node.js

As we will see the HDI deployer is just a Node.js application. It doesn’t require XSA to run. We will run the deployer locally from our laptop directly but to do so we need Node.js installed locally as well. This will also allow us to run, test, debug Node.js applications completely local without the need for XSA as well.

◈ Microsoft VS Code (Optional)

We need an editor. Of course you use Notepad or really any text editor. However we probably want something a little nicer. I chose VS Code because it has great support for Node.js development/debugging, some basic SQL syntax support, and we can even run a command prompt (and therefore hdbsql) from within the tool.

◈ SQuirreL SQL Client (Optional)

Without XSA, we won’t have the SAP Web IDE for SAP HANA, HANA Cockpit, nor Database Explorer locally. We could fall back to HANA Studio, but I hate the idea of depending upon a deprecated tool. Therefore when I really want some graphical data exploration tools, I like this super lightweight open source solution that works well with HANA.

HANA Express

While many people choose to start with the pre-built HANA Express VM or cloud images; I choose to begin with the binary installer into my own VM of Linux. This way I’m able to tune the installation scripts even further. I start with the Database Server only version of HXE (which is already sized for around 8Gb machines). I know that I don’t need XS Classic either. Therefore I go into the configuration files (/configurations/custom).

I edit the daemon.ini file to set the webdispatcher instances to 0. If I’m going to completely disable XS Classic, then I also don’t need a Web Dispatcher. Then I edit nameserver.ini and set embedded in the httpserver section to false. HXE will try to run XSEngine (XS Classic) in embedded mode and not as a standalone process. But this configuration turns off embedded mode as well. Therefore it completely disables the XSEngine. The same entry needs to be made in the xsengine.ini file as well – set embedded to false.

I then go forward with the rest of the installation. Without XSA I don’t need nearly as much disk space for the VM nor do I need as much memory. I set my VM to only 8Gb, but I actually could have squeezed it down even further.

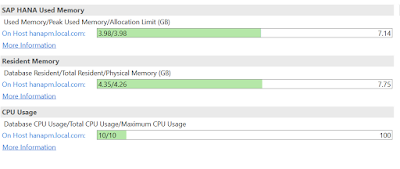

So this all results in a nice and skinny HANA installation that runs well within the 8Gb of memory. Perfect for doing development if your laptop doesn’t have much memory to spare.

HDI Bootstrapping

Now comes the slightly tricky part. You want to do HDI development, but your HANA system doesn’t have XSA. Many people have the impression that HDI and XSA are totally dependent upon each other. This is a believe largely supported by the fact that XSA and HDI were introduced at the same time, that those of us at SAP almost always talk about them interchangeably, and that there are some technical intertwining of the two technologies. However we can operate HDI completely without XSA or the Web IDE as we are about to see.

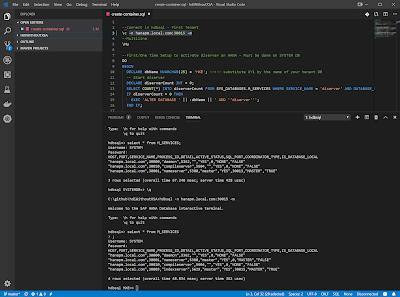

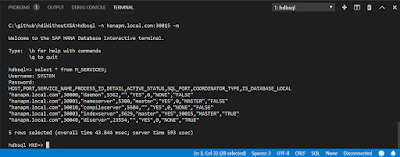

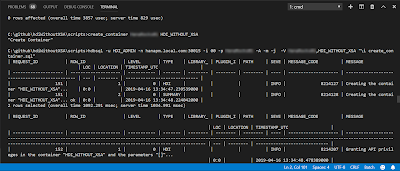

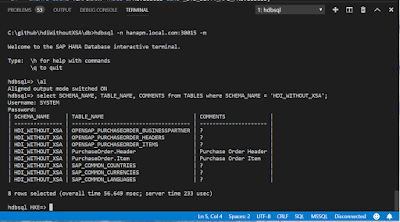

The first problem we face is that the HDI has a diserver process that isn’t even running in a HANA system normally. It is only started if you run the XSA installer. We can confirm this in our system by opening a terminal in Visual Studio Code and running the hdbsql command. From there we can write SQL queries just like we would from any SQL Console. For instance a select * from M_SERVICES will show us the running services on HANA (and there’s no diserver).

Now luckily HDI isn’t locked completely into XSA nor even the Node.js deployer interfaces. There are also SQL APIs for just about anything you’d want to do with HDI. This is what we will leverage to interact with HDI from HDBSQL directly within VS Code.

So we will being with a little bootstrap script that only needs to be ran once on a new system installation.

https://github.com/jungsap/hdiWithoutXSA/blob/master/scripts/bootstrap.sql

In this script we will add the diserver process. Then we will create an HDI_ADMIN user. You would run this one-time script as the SYSTEM user, but then afterwards you can do everything via this HDI_ADMIN user. We will make both SYSTEM and HDI_ADMIN HDI Group admins for the default group of _SYS_DI.

--First/One Time Setup to activate diserver on HANA

DO

BEGIN

DECLARE dbName NVARCHAR(25) = 'HXE'; --<-- substitute XY1 by the name of your tenant DB

-- Start diserver

DECLARE diserverCount INT = 0;

SELECT COUNT(*) INTO diserverCount FROM SYS_DATABASES.M_SERVICES WHERE SERVICE_NAME = 'diserver' AND DATABASE_NAME = :dbName AND ACTIVE_STATUS = 'YES';

IF diserverCount = 0 THEN

EXEC 'ALTER DATABASE ' || :dbName || ' ADD ''diserver''';

END IF;

END;

--One Time Setup - Create HDI_ADMIN User and make SYSTEM and HDI_ADMIN HDI Admins

CREATE USER HDI_ADMIN PASSWORD "&1" NO FORCE_FIRST_PASSWORD_CHANGE;

GRANT USER ADMIN to HDI_ADMIN;

CREATE LOCAL TEMPORARY TABLE #PRIVILEGES LIKE _SYS_DI.TT_API_PRIVILEGES;

INSERT INTO #PRIVILEGES (PRINCIPAL_NAME, PRIVILEGE_NAME, OBJECT_NAME) SELECT 'SYSTEM', PRIVILEGE_NAME, OBJECT_NAME FROM _SYS_DI.T_DEFAULT_DI_ADMIN_PRIVILEGES;

INSERT INTO #PRIVILEGES (PRINCIPAL_NAME, PRIVILEGE_NAME, OBJECT_NAME) SELECT 'HDI_ADMIN', PRIVILEGE_NAME, OBJECT_NAME FROM _SYS_DI.T_DEFAULT_DI_ADMIN_PRIVILEGES;

CALL _SYS_DI.GRANT_CONTAINER_GROUP_API_PRIVILEGES('_SYS_DI', #PRIVILEGES, _SYS_DI.T_NO_PARAMETERS, ?, ?, ?);

DROP TABLE #PRIVILEGES;

If successful, you can run the M_SERVICES query again and you will see you now have the diserver process.

Creating an HDI Container

When working from XSA you just create an instance of the HDI service broker and it does all the work to create the HDI container, users, etc. It also stores all of this information within the service broker and your application only needs to bind to the service broker to access it.

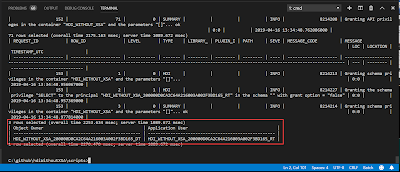

In this scenario, we are going to have to do all these things that the service broker would have done for us via the HDI SQL APIs. To make this step easier, I’ve created a reusable script that will take two input parameters – a Password for the generated users and the name of the container. It then does all the work to create the container, create the users (a object owner and application user just like when working from XSA) and setup the default libraries for the container.

--Create Container

CALL _SYS_DI.CREATE_CONTAINER('&2', _SYS_DI.T_NO_PARAMETERS, ?, ?, ?);

DO

BEGIN

DECLARE userName NVARCHAR(100);

DECLARE userDT NVARCHAR(100);

DECLARE userRT NVARCHAR(100);

declare return_code int;

declare request_id bigint;

declare MESSAGES _SYS_DI.TT_MESSAGES;

declare PRIVILEGES _SYS_DI.TT_API_PRIVILEGES;

declare SCHEMA_PRIV _SYS_DI.TT_SCHEMA_PRIVILEGES;

no_params = SELECT * FROM _SYS_DI.T_NO_PARAMETERS;

SELECT SYSUUID INTO userName FROM DUMMY;

SELECT '&2' || '_' || :userName || '_DT' into userDT FROM DUMMY;

SELECT '&2' || '_' || :userName || '_RT' into userRT FROM DUMMY;

EXEC 'CREATE USER ' || :userDT || ' PASSWORD "&1" NO FORCE_FIRST_PASSWORD_CHANGE';

EXEC 'CREATE USER ' || :userRT || ' PASSWORD "&1" NO FORCE_FIRST_PASSWORD_CHANGE';

COMMIT;

--Grant Container Admin to Development User(s)

PRIVILEGES = SELECT PRIVILEGE_NAME, OBJECT_NAME, PRINCIPAL_SCHEMA_NAME, (SELECT :userDT FROM DUMMY) AS PRINCIPAL_NAME FROM _SYS_DI.T_DEFAULT_CONTAINER_ADMIN_PRIVILEGES;

CALL _SYS_DI.GRANT_CONTAINER_API_PRIVILEGES('&2', :PRIVILEGES, :no_params, :return_code, :request_id, :MESSAGES);

select * from :MESSAGES;

--Grant Container User to Development User(s)

SCHEMA_PRIV = SELECT 'SELECT' AS PRIVILEGE_NAME, '' AS PRINCIPAL_SCHEMA_NAME, :userRT AS PRINCIPAL_NAME FROM DUMMY;

CALL _SYS_DI.GRANT_CONTAINER_SCHEMA_PRIVILEGES('&2', :SCHEMA_PRIV, :no_params, :return_code, :request_id, :MESSAGES);

select * from :MESSAGES;

--Configure Default Libraries for Container

default = SELECT * FROM _SYS_DI.T_DEFAULT_LIBRARIES;

CALL _SYS_DI.CONFIGURE_LIBRARIES('&2', :default, :no_params, :return_code, :request_id, :MESSAGES);

SELECT :userDT as "Object Owner", :userRT as "Application User" from DUMMY;

END;

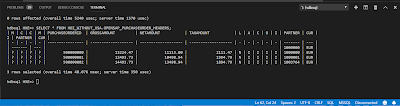

I will run the script and you should see that output are the names of the two generated users. This the a not so nice part of this approach. You are having to manage these users and passwords directly. This is probably OK for local, private development like this; but nothing you’d want to do in a real, productive environment.

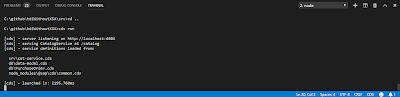

Running the HDI Deployer from local Node.js

The HDI Deployer is really just a Node.js application that doesn’t have any strict requirements upon XSA. We can run it from the local Node.js runtime on our laptop via the command terminal of VS Code as well. The only tricky part is getting the connection information and credentials to the deployer.

Within XSA or Cloud Foundry, the deployer would get this information from the server broker as described above. But all the service broker does place this information in the VCAP_SERVICES environment variable of your application. Here is what this environment variable looks like:

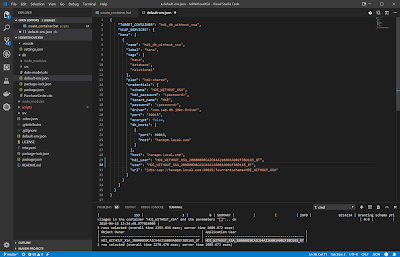

But the majority of SAP Node.js modules (including the HDI deployer) have a fallback option for local development. You can simulate this environment binding by simply creating a file named default-env.json. From here we can copy in a VCAP_SERVICES section and change the connection parameters and insert the HDI users which were generated in the previous step.

The only warning I have about this default-env.json is that you probably want to be careful not to commit it to Git or transport it downstream since it contains specific user names and passwords. Normally I would filter this file out with a .gitignore rule. However I kept it in this sample repository as a reusable template.

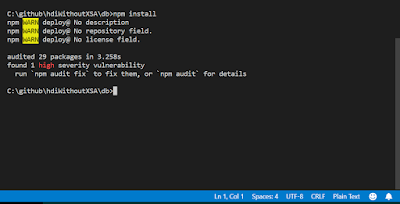

One difference to the Web IDE is that it will automatically run NPM install to pull down any dependent modules. We must do that manually in this environment. Simply run the npm install from the db folder and NPM will read your package.json and install the prerequisites just like from the Web IDE.

After configuring the default-env.json you can now run the Node.js start script for the HDI Deployer and it will deploy your design time artifacts into the target container just like the Build command from the Web IDE.

Your development artifacts are deployed into your container. You could of course perform HDBSQL queries to confirm this:

But this is also where I like to use SQuirreL to browse my container and contents.

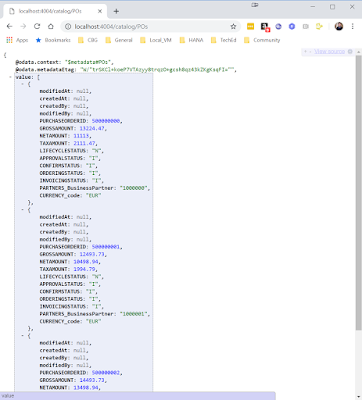

Running Node.js Applications

You are actually not limited to just doing HDI development with this approach. My sample application also has Cloud Application Programming Model CDS artifacts and a Node.js OData V4 service in it. I can run the CDS compile/build commands from the command line as well and even run my Node.js application locally. It will use the same trick to read the connection parameters and credentials from the default-env.json so it runs fine without the XSA/CF service bindings.

Although the service is running locally from my laptop, its making a real connection to the remote HANA DB and retrieving actual data. So I’m development Node.js and potentially debugging all locally without any XSA, but still accessing live data from the HANA DB.

Deleting a Container

Maybe you have messed something up and want to start fresh with your container. Or maybe you want to clean up your local system from unused containers. There is a separate script that calls the HDI APIs to help with dropping a container as well.

CREATE LOCAL TEMPORARY COLUMN TABLE #PARAMETERS LIKE _SYS_DI.TT_PARAMETERS;

INSERT INTO #PARAMETERS ( KEY, VALUE ) VALUES ( 'IGNORE_WORK', true );

INSERT INTO #PARAMETERS ( KEY, VALUE ) VALUES ( 'IGNORE_DEPLOYED', true );

CALL _SYS_DI.DROP_CONTAINER('&2', #PARAMETERS, ?, ?, ?);

DROP TABLE #PARAMETERS;

Closing

So in summary you certain can build a very small HANA Express system and do quite complex HDI and Node.js development all in a local environment with 8Gb of memory. There is some manual setup and work arounds, but it gives you quite a bit of flexibility to work especially if your system is memory constrained. Here are a few pros and cons with this approach.

Cons

◈ Graphical Calculation Views. If you need to do Calculation Views you probably still want the Web IDE. Although the XML format of the Calculation View file could be created and edited manually and it does build and deploy from the local HDI approach, I can’t imagine it would be very comfortable to work with the complex XML by hand. In my work I tend to focus on CDS and SQLDDL artifacts which work perfectly fine with a local editor only approach like this.

◈ No Admin/Database Explorer. As seen you can fill in the gaps here with HDBSQL and/or open source SQL tools. And we are just talking about a local, private developer instances. For a real productive system you’d still want the HANA Cockpit for full admin and monitoring.

◈ Transport: There is no MTA deployment in this approach. Its all manual deployment and you have to remember run things like npm install. And we have to manage the users and passwords locally. However for private, local development this isn’t so bad. You can then commit your code to Git and run a CI chain from there (using the MTA Builder) and deploy to a full HANA system with XSA or Cloud Foundry. The concepts we are using here don’t break any of that. When you deploy the same content “for real” the service brokers will still do their job of creating the users and passwords and doing the binding for you.

Pros

◈ Small memory footprint. Everything I did here could run on a laptop with 8Gb or a very small cloud instance.

◈ Quick build, run and debug. No waiting for XSA/CF deploys. You run almost immediately in your local Node.js environment.

◈ Local IDE. No browser based IDE. Quick response. Split screen editors, SQL syntax plug-ins. There’s a lot to be said for reusing an existing IDE that has so much community support.

No comments:

Post a Comment