Predictive analytics encompasses a variety of statistical techniques from data mining, predictive modelling, and machine learning, that analyze current and historical facts to make predictions about future or otherwise unknown events.

Predictive analytics is an area of statistics that deals with extracting information from data and using it to predict trends and behavior patterns.

Customer churn refers to when a customer (player, subscriber, user, etc.) ceases his or her relationship with a company. Online businesses typically treat a customer as churned once a particular amount of time has elapsed since the customer’s last interaction with the site or service. The full cost of customer churn includes both lost revenue and the marketing costs involved with replacing those customers with new ones. Reducing customer churn is a key business goal of every online business.

The ability to predict that a particular customer is at a high risk of churning, while there is still time to do something about it, represents a huge additional potential revenue source for every business.

This Customer Churn Analysis tutorial will teach you how to perform customer churn analysis for online shop.

Data set for the tutorial is available here.

Following tutorial is done on this environment :

BW 7.5 SP07 (BPC 10.1 is built-in) , HANA 1.0 SP12 (rev 122)

Before getting started make sure that you have the following roles.

1. To execute AFL functions you need to have following roles:

AFL__SYS_AFL_AFLPAL_EXECUTE

AFL__SYS_AFL_AFLPAL_EXECUTE_WITH_GRANT_OPTION

2. To generate or drop PAL procedures:

AFLPM_CREATOR_ERASER_EXECUTE

3. The PAL procedure can be created under any schema once you have the CREATE ANY privileges of it.

Let the USER of database for this example be PALUSER.

Based on this data we want to predict which customers are most likely to churn. To find patterns in historic data and to train this model the following data set is used:

Validation Data set without Contract activity is used for validation and the result is matched against the actual values in the other copy.

1. CREATEDT: It stands for Create decision tree and allow to export in JSON and PMML models, later on we can predict actual data based on this model, and PMML formats can be utilized in R Language.

2. PREDICTWITHDT

This function use the trained JSON model created by the CREATEDT function and the actual data to predict in which class are the new records belongs and their probability of falling in that class.

◈ Open SAP HANA modeler perspective in HANA Studio.

1. Browse the downloaded file.

◈Manage Table Definition and Mapping as follows:

Predictive analytics is an area of statistics that deals with extracting information from data and using it to predict trends and behavior patterns.

Customer churn refers to when a customer (player, subscriber, user, etc.) ceases his or her relationship with a company. Online businesses typically treat a customer as churned once a particular amount of time has elapsed since the customer’s last interaction with the site or service. The full cost of customer churn includes both lost revenue and the marketing costs involved with replacing those customers with new ones. Reducing customer churn is a key business goal of every online business.

The ability to predict that a particular customer is at a high risk of churning, while there is still time to do something about it, represents a huge additional potential revenue source for every business.

This Customer Churn Analysis tutorial will teach you how to perform customer churn analysis for online shop.

Data set for the tutorial is available here.

Following tutorial is done on this environment :

BW 7.5 SP07 (BPC 10.1 is built-in) , HANA 1.0 SP12 (rev 122)

Before getting started make sure that you have the following roles.

Security

1. To execute AFL functions you need to have following roles:

AFL__SYS_AFL_AFLPAL_EXECUTE

AFL__SYS_AFL_AFLPAL_EXECUTE_WITH_GRANT_OPTION

2. To generate or drop PAL procedures:

AFLPM_CREATOR_ERASER_EXECUTE

3. The PAL procedure can be created under any schema once you have the CREATE ANY privileges of it.

Let the USER of database for this example be PALUSER.

About Dataset and Predictive Model Used

Based on this data we want to predict which customers are most likely to churn. To find patterns in historic data and to train this model the following data set is used:

| Customer ID | Unique ID for each customer |

| Usage Category (Month) | Number of time the customer used the online shopping portal in the current month |

| Average Usage (Year) | Average Number of time the customer used the online shopping portal in the past year |

| Usage Category (previous Month) | Number of time the customer used the online shopping portal in the previous month |

| Service Type | Flag whether customer has premium or standard service |

| Product Category | Product category the customers’ orders most frequently in this case only pharma or beauty |

| Message Allowance | Flag whether the customers want to receive messages |

| Average Marketing Activity (Bi-yearly) | Average number of marketing activities for customer in the past two years |

| Average Visit Time (min) | Average time the customer spent on the online shopping portal at each visit |

| Pages per Visit | Average number of pages the customer visits on the online shopping portal at each visit |

| Delta Revenue (Previous Month) | Difference from the revenue by this customer in the current month to the revenues in this month |

| Revenue (Current Month) | Revenue by this customer in the current month |

| Service Failure Rate (%) | Percentage of times the customer used the online shopping portal and certain services failed |

| Customer Lifetime (days) | Number of days since the customer registered |

| Product Abandonment | Number of products the customer has put in shopping cart and the abandoned in the last quarter |

| Contract Activity | Flag whether customer has churned or is active. |

The downloaded dataset was divided into two unequal parts:

1. Training Data set: randomly selected 1000-1200 records.

2. Validation Data set: Two copies of this part is maintained one without Contract activity and one with it.

Validation Data set without Contract activity is used for validation and the result is matched against the actual values in the other copy.

PAL Functions Used:

1. CREATEDT: It stands for Create decision tree and allow to export in JSON and PMML models, later on we can predict actual data based on this model, and PMML formats can be utilized in R Language.

2. PREDICTWITHDT

This function use the trained JSON model created by the CREATEDT function and the actual data to predict in which class are the new records belongs and their probability of falling in that class.

Now that we have downloaded the dataset and know the predictive models to be used in the exercise; let’s get started with the Hands on.

Training Phase

First we will create a decision tree and train it with the training data set.

STEP 1: Import Training and Validation Data set in HANA

◈ Open SAP HANA modeler perspective in HANA Studio.

◈ Click on import in the Quick view.

◈ Select “Data From Local File” >> Select Target System >> Define import properties as below.

1. Browse the downloaded file.

2. Select Worksheet “Training”.

3. Check “Header Row Exist” and equal to 1.

4. Check “import all data” with “Start Line” equal to 2.

5. Select your schema and set table name as ChurnTrainData.

6. Click Next.

7. If you are not able to import correctly save the sheet as single csv file and then impot it.

◈Manage Table Definition and Mapping as follows:

| “CustomerID” | INT, (CHECK KEY) |

| “UsageCategoryMonth” | NVARCHAR(6), |

| “AverageUsageYear” | NVARCHAR(6), |

| “UsageCategorypreviousMonth” | NVARCHAR(6), |

| “ServiceType” | NVARCHAR(21), |

| “ProductCategory” | NVARCHAR(6), |

| “MessageAllowance” | NVARCHAR(3), |

| “AverageMarketingActivityBiyearly” | DOUBLE, |

| “AverageVisitTimemin” | DOUBLE, |

| “PagesperVisit” | DOUBLE, |

| “DeltaRevenuePreviousMonth” | DOUBLE, |

| “RevenueCurrentMonth” | DOUBLE, |

| “ServiceFailureRate” | DOUBLE, |

| “CustomerLifetimedays” | DOUBLE, |

| “ProductAbandonment” | DOUBLE, |

| “ContractActivity” | NVARCHAR(7) |

Here first column is a primary key and it can be only Integer or Bigint.

And PAL function do not support Decimal so we need to change all decimals to Double while importing.

◈ Select Store Type = Column store.

◈ Click Next; and the file will be imported.

◈ Similarly import Validation dataset sheet with table name “ChurnValidData”.

STEP 2: Creating Types for PAL Procedure

1. Creating type for our training data.

DROP TYPE PAL_CHURN_CDT_DATA_T;

CREATE TYPE PAL_CHURN_CDT_DATA_T AS TABLE (

"CustomerID" INT,

"UsageCategoryMonth" NVARCHAR(6),

"AverageUsageYear" NVARCHAR(6),

"UsageCategorypreviousMonth" NVARCHAR(6),

"ServiceType" NVARCHAR(21),

"ProductCategory" NVARCHAR(6),

"MessageAllowance" NVARCHAR(3),

"AverageMarketingActivityBiyearly" DOUBLE,

"AverageVisitTimemin" DOUBLE,

"PagesperVisit" DOUBLE,

"DeltaRevenuePreviousMonth" DOUBLE,

"RevenueCurrentMonth" DOUBLE,

"ServiceFailureRate" DOUBLE,

"CustomerLifetimedays" DOUBLE,

"ProductAbandonment" DOUBLE,

"ContractActivity" NVARCHAR(7)

);

2. Creating type for resulting JSON and PMML model.

DROP TYPE PAL_CHURN_CDT_JSONMODEL_T;

CREATE TYPE PAL_CHURN_CDT_JSONMODEL_T AS TABLE(

"ID" INT,

"JSONMODEL" VARCHAR(5000)

);

DROP TYPE PAL_CHURN_CDT_PMMLMODEL_T;

CREATE TYPE PAL_CHURN_CDT_PMMLMODEL_T AS TABLE(

"ID" INT,

"PMMLMODEL" VARCHAR(5000)

);

3. Creating type for Control table that is used by the procedure to set the PAL function configurations.

DROP TYPE PAL_CHURN_CONTROL_T;

CREATE TYPE PAL_CHURN_CONTROL_T AS TABLE(

"NAME" VARCHAR (100),

"INTARGS" INTEGER,

"DOUBLEARGS" DOUBLE,

"STRINGARGS" VARCHAR(100)

);

STEP 3: Creating Signature for PAL Procedure

PAL procedure will need a signature which specify the inputs/output of the procedure.

Following is the format for creating signature table.

DROP TABLE PAL_CHURN_CDT_PDATA_TBL;

CREATE COLUMN TABLE PAL_CHURN_CDT_PDATA_TBL(

"POSITION" INT,

"SCHEMA_NAME" NVARCHAR(256),

"TYPE_NAME" NVARCHAR(256),

"PARAMETER_TYPE" VARCHAR(7)

);

Then we insert values in the table.

1. If you want to convert decimals to double in the data table type created in the step 1.

INSERT INTO PAL_CHURN_CDT_PDATA_TBL

VALUES (-2, '_SYS_AFL', 'CAST_DECIMAL_TO_DOUBLE', 'INOUT');

INSERT INTO PAL_CHURN_CDT_PDATA_TBL

VALUES (-1, '_SYS_AFL', 'CREATE_TABLE_TYPES', 'INOUT');

2. Insert the INPUT and OUTPUT types.

INSERT INTO PAL_CHURN_CDT_PDATA_TBL VALUES (1, 'PALUSER', 'PAL_CHURN_CDT_DATA_T', 'IN');

INSERT INTO PAL_CHURN_CDT_PDATA_TBL VALUES (2, 'PALUSER', 'PAL_CHURN_CONTROL_T', 'IN');

INSERT INTO PAL_CHURN_CDT_PDATA_TBL VALUES (3, 'PALUSER', 'PAL_CHURN_CDT_JSONMODEL_T', 'OUT');

INSERT INTO PAL_CHURN_CDT_PDATA_TBL VALUES (4, 'PALUSER', 'PAL_CHURN_CDT_PMMLMODEL_T', 'OUT');

STEP 4: Creating PAL Procedure

To create the procedure we will use “ AFLLANG_WRAPPER_PROCEDURE_CREATE” procedure.

CALL SYS.AFLLANG_WRAPPER_PROCEDURE_DROP('PALUSER','PAL_CHURN_CREATEDT_PROC');

CALL SYS.AFLLANG_WRAPPER_PROCEDURE_CREATE('AFLPAL',

'CREATEDT',

'PALUSER',

'PAL_CHURN_CREATEDT_PROC',

PAL_CHURN_CDT_PDATA_TBL);

Syntax of AFLLANG_WRAPPER_PROCEDURE_CREATE:

Param 1 <Area> : Which is always set to AFLPAL.

Param 2 <function name>: PAL function to be used by our procedure for generating the decision tree model.

Param 3 <schema name> : Your Schema name in which the types and tables are created.

Param 4 <procedure name>: User defined name for the procedure.

Param 5 <signature table>: Signature of the procedure.

STEP 5: Creating Control Table

1. Here temporary control table holds the parameters used by the PAL function CREATEDT.

DROP TABLE #PAL_CHURNCONTROL_TBL;

CREATE LOCAL TEMPORARY COLUMN TABLE #PAL_CHURNCONTROL_TBL(

"NAME" VARCHAR(100),

"INTARGS" INTEGER,

"DOUBLEARGS" DOUBLE,

"STRINGARGS" VARCHAR(100)

);

2. Inserting values of the parameters.

--using 100% data for training and 0% for pruning

INSERT INTO #PAL_CHURNCONTROL_TBL

VALUES ('PERCENTAGE', NULL, 1.0, NULL);

-- using 2 threads for processing

INSERT INTO #PAL_CHURNCONTROL_TBL

VALUES ('THREAD_NUMBER', 2, NULL, NULL);

-- to split the string of tree model

INSERT INTO #PAL_CHURNCONTROL_TBL

VALUES ('IS_SPLIT_MODEL', 1, NULL, NULL);

/* JSON model is exported by default but if you also want to export PMML model to be used in other environment like R */

INSERT INTO #PAL_CHURNCONTROL_TBL

VALUES ('PMML_EXPORT', 2, NULL, NULL);

STEP 6: Result: Creating Model Table

DROP TABLE PAL_CHURN_CDT_JSONMODEL_TBL;

CREATE COLUMN TABLE PAL_CHURN_CDT_JSONMODEL_TBL LIKE PAL_CHURN_CDT_JSONMODEL_T;

DROP TABLE PAL_CHURN_CDT_PMMLMODEL_TBL;

CREATE COLUMN TABLE PAL_CHURN_CDT_PMMLMODEL_TBL LIKE PAL_CHURN_CDT_PMMLMODEL_T;

STEP 7: Calling the procedure to generate Decision tree

We will be calling our procedure and will pass the parameters as per the signature defined.

Syntax:

CALL PALUSER.PAL_CHURN_CREATEDT_PROC(

"<schema>"."<tablename>",

"<controll table>",

<json model table>,

<pmml model table>

) WITH OVERVIEW;

CALL PALUSER.PAL_CHURN_CREATEDT_PROC(

"PALUSER"."ChurnTrainData",

"#PAL_CHURNCONTROL_TBL",

PAL_CHURN_CDT_JSONMODEL_TBL,

PAL_CHURN_CDT_PMMLMODEL_TBL

) WITH OVERVIEW;

STEP 8: Checking generated models

SELECT * FROM PAL_CHURN_CDT_JSONMODEL_TBL;

SELECT * FROM PAL_CHURN_CDT_PMMLMODEL_TBL;

Validation Phase

Now that we have trained the model and got the JSON model of the decision tree. We can use it for prediction of new data.

We will be using PREDICTWITHDT PAL function for predicting class for new data.

STEP 1: Importing Validation Dataset in HANA

We have already imported our validation dataset during the training model phase.

STEP 2: Creating types for PAL Procedure

1. Creating type for our validation data. Here we are not including “ContractActivity” field.

DROP TYPE ZPAL_CHURN_PCDT_DATA_T;

CREATE TYPE ZPAL_CHURN_PCDT_DATA_T AS TABLE(

"CustomerID" INT,

"UsageCategoryMonth" NVARCHAR(6),

"AverageUsageYear" NVARCHAR(6),

"UsageCategorypreviousMonth" NVARCHAR(6),

"ServiceType" NVARCHAR(21),

"ProductCategory" NVARCHAR(6),

"MessageAllowance" NVARCHAR(3),

"AverageMarketingActivityBiyearly" DOUBLE,

"AverageVisitTimemin" DOUBLE,

"PagesperVisit" DOUBLE,

"DeltaRevenuePreviousMonth" DOUBLE,

"RevenueCurrentMonth" DOUBLE,

"ServiceFailureRate" DOUBLE,

"CustomerLifetimedays" DOUBLE,

"ProductAbandonment" DOUBLE

);

2. Creating type for input JSON and PMML model. We can also reuse the same model types created during training phase.

DROP TYPE ZPAL_CHURN_PCDT_JSONMODEL_T;

CREATE TYPE ZPAL_CHURN_PCDT_JSONMODEL_T AS TABLE(

"ID" INT,

"JSONMODEL" VARCHAR(5000)

);

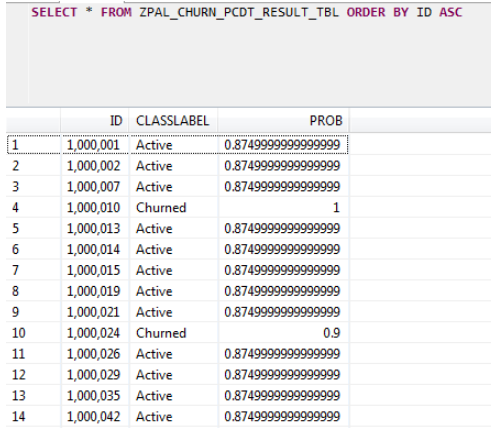

3. Create type for the resultant table. Resultant table is going to hold the Customer id in ID column and “Contract Activity” will be assigned to “CLASSLABEL” field and Probability of falling in that class is assigned to PROB field.

Why do we have this structure for the resultant table?

Because every PAL function has a predefined resultant table structure.

DROP TYPE ZPAL_CHURN_PCDT_RESULT_T;

CREATE TYPE ZPAL_CHURN_PCDT_RESULT_T AS TABLE(

"ID" INTEGER,

"CLASSLABEL" VARCHAR(50),

"PROB" DOUBLE

);

4. Creating type for Control table that is used by the procedure to set the PAL function configurations. We can reuse the PAL_CHURNCONTROL_T created during training phase.

DROP TYPE ZPAL_CHURNCONTROL_T;

CREATE TYPE ZPAL_CHURNCONTROL_T AS TABLE(

"NAME" VARCHAR (100),

"INTARGS" INTEGER,

"DOUBLEARGS" DOUBLE,

"STRINGARGS" VARCHAR (100)

);

STEP 3: Creating Signature for PAL Procedure

In this procedure we will be giving 3 inputs; Validation data set, control parameters of PAL function and Trained JSON model. And procedure is going to return a resultant table which is going to hold the class (active, churned )in which the new customer belongs.

DROP TABLE ZPAL_CHURN_PCDT_PDATA_TBL;

CREATE COLUMN TABLE ZPAL_CHURN_PCDT_PDATA_TBL(

"POSITION" INT,

"SCHEMA_NAME" NVARCHAR(256),

"TYPE_NAME" NVARCHAR(256),

"PARAMETER_TYPE" VARCHAR(7)

);

INSERT INTO ZPAL_CHURN_PCDT_PDATA_TBL

VALUES (1, 'PALUSER', 'ZPAL_CHURN_PCDT_DATA_T', 'IN');

INSERT INTO ZPAL_CHURN_PCDT_PDATA_TBL

VALUES (2, 'PALUSER', 'ZPAL_CHURNCONTROL_T', 'IN');

INSERT INTO ZPAL_CHURN_PCDT_PDATA_TBL

VALUES (3, 'PALUSER', 'ZPAL_CHURN_PCDT_JSONMODEL_T', 'IN');

INSERT INTO ZPAL_CHURN_PCDT_PDATA_TBL

VALUES (4, 'PALUSER', 'ZPAL_CHURN_PCDT_RESULT_T', 'OUT');

STEP 4: Creating PAL Procedure

CALL SYS.AFLLANG_WRAPPER_PROCEDURE_DROP('PALUSER', 'ZPAL_CHURNPREDICTWITHDT_PROC');

CALL SYS.AFLLANG_WRAPPER_PROCEDURE_CREATE('AFLPAL',

'PREDICTWITHDT',

'PALUSER',

'ZPAL_CHURNPREDICTWITHDT_PROC',

ZPAL_CHURN_PCDT_PDATA_TBL);

STEP 5: Creating Control Table

DROP TABLE #ZPAL_CHURNCONTROL_TBL;

CREATE LOCAL TEMPORARY COLUMN TABLE #ZPAL_CHURNCONTROL_TBL(

"NAME" VARCHAR (100),

"INTARGS" INTEGER,

"DOUBLEARGS" DOUBLE,

"STRINGARGS" VARCHAR (100)

);

INSERT INTO #ZPAL_CHURNCONTROL_TBL VALUES ('THREAD_NUMBER', 2, NULL, NULL);

INSERT INTO #ZPAL_CHURNCONTROL_TBL VALUES ('IS_OUTPUT_PROBABILITY', 1, null, null);

INSERT INTO #ZPAL_CHURNCONTROL_TBL VALUES ('MODEL_FORMAT', 0, null, null);

STEP 6: Result: Creating Result Table

DROP TABLE ZPAL_CHURN_PCDT_RESULT_TBL;

CREATE COLUMN TABLE ZPAL_CHURN_PCDT_RESULT_TBL LIKE ZPAL_CHURN_PCDT_RESULT_T;

STEP 7: Calling the procedure to generate result table.

CALL PALUSER.ZPAL_CHURNPREDICTWITHDT_PROC("PALUSER"."ZChurnValidData" ,

"#ZPAL_CHURNCONTROL_TBL",

PAL_CHURN_CDT_JSONMODEL_TBL,

ZPAL_CHURN_PCDT_RESULT_TBL

) WITH OVERVIEW;

STEP 8: Checking result table

SELECT * FROM ZPAL_CHURN_PCDT_RESULT_TBL ORDER BY ID ASC;

Here we see that ID’s are classified as active or churned and with how much probablity it belongs to that class is shown in PROB field.

Cross Validation

Now that we have predicted the contract activity for the validation dataset now its time for cross validating the predicted values with the true values that we have in the “True validation Values” sheet.

Cross validation is done in excel and can be seen in the “Final Output” sheet of the dataset. Here result table data is cross validated with the Validation data and percent of accuracy is calculated at the bottom.

So the model which we created is 90.6% accurate as per the available dataset. In this tutorial we have selected dataset randomly. To get more accuracy you can select proper test cases to train your model and can get 100% accuracy.

No comments:

Post a Comment