In the last article we have discussed how we can easily get big data from the internet, convert it to the required format, massage it a bit, and report on it in SAP Analytics Cloud via SAP HANA which worked pretty well but lacked any sort of proper automation.

In this article we will create an automated flow which can be used to acquire data from the same Petitions website, convert it to the required format and load it to our SAP HANA system which in turn is connected “Live” to SAP Analytics Cloud. Therefore, the reported data in SAP Analytics Cloud would be as recent as possible, depending on our data acquisition flow settings.

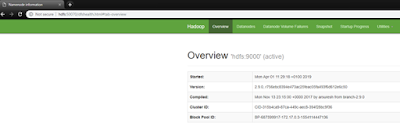

In line with my concept of always learning something new this article will be focused on automating data flows with SAP Data Hub 2.4 pipelines. I will be using the developers edition of SAP Data Hub 2.4 which is delivered as the Docker image, which yet again makes our life so much easier. Since then I moved on and instead of using Ubuntu as a host OS for Docker I started using extremely lightweight Alpine Linux distribution for all my Docker containers. I find that for containerisation the golden rule is “the lighter your host OS – the better”, which makes Alpine a perfect choice and all SAP containers work fine in it (so far, even though it’s not officially supported for HXE or Data Hub containers).

In this article I will start everything from the scratch, creating two separate tables to store Country and Constituency results and adding few new columns to store multiple petitions in one table.

At the end of this article you will have an understanding of how Data Hub works, how to use JavaScript operators in Data Hub, load live data to SAP HANA and compare data from multiple petitions in one SAP Analytics Cloud story.

I won’t be explaining the path all the way to SAP Analytics Cloud as I have explained it in great detail last time.

First thing’s first – go through the following tutorial to download and start SAP Data Hub Developer edition Docker container in your host OS:

The only exception to the above blog post is that I won’t start it with DEV network, but rather bridged and I will expose ports to the host machine, and the run command will look like:

docker run -ti --env VORA_USERNAME=vora --env VORA_PASSWORD=HXEDocker2019 -p 8090:8090 -p 9225:9225 -p 30115:30115 --name datahub --hostname datahub datahub run --agree-to-sap-license

So that we can access it from our host windows machine (make sure to add datahub and hdfs hosts to your windows hosts file):

In this article we will create an automated flow which can be used to acquire data from the same Petitions website, convert it to the required format and load it to our SAP HANA system which in turn is connected “Live” to SAP Analytics Cloud. Therefore, the reported data in SAP Analytics Cloud would be as recent as possible, depending on our data acquisition flow settings.

In line with my concept of always learning something new this article will be focused on automating data flows with SAP Data Hub 2.4 pipelines. I will be using the developers edition of SAP Data Hub 2.4 which is delivered as the Docker image, which yet again makes our life so much easier. Since then I moved on and instead of using Ubuntu as a host OS for Docker I started using extremely lightweight Alpine Linux distribution for all my Docker containers. I find that for containerisation the golden rule is “the lighter your host OS – the better”, which makes Alpine a perfect choice and all SAP containers work fine in it (so far, even though it’s not officially supported for HXE or Data Hub containers).

In this article I will start everything from the scratch, creating two separate tables to store Country and Constituency results and adding few new columns to store multiple petitions in one table.

At the end of this article you will have an understanding of how Data Hub works, how to use JavaScript operators in Data Hub, load live data to SAP HANA and compare data from multiple petitions in one SAP Analytics Cloud story.

I won’t be explaining the path all the way to SAP Analytics Cloud as I have explained it in great detail last time.

Let’s begin

First thing’s first – go through the following tutorial to download and start SAP Data Hub Developer edition Docker container in your host OS:

The only exception to the above blog post is that I won’t start it with DEV network, but rather bridged and I will expose ports to the host machine, and the run command will look like:

docker run -ti --env VORA_USERNAME=vora --env VORA_PASSWORD=HXEDocker2019 -p 8090:8090 -p 9225:9225 -p 30115:30115 --name datahub --hostname datahub datahub run --agree-to-sap-license

So that we can access it from our host windows machine (make sure to add datahub and hdfs hosts to your windows hosts file):

The same applies to HDFS, I will use the same bridged network. In this blog post we won’t be touching HDFS at all, but in anticipation of other articles covering Vora and HADOOP, you might as well create the container now:

docker run -ti -p 50070:50070 --name hdfs --hostname hdfs datahub run-hdfs

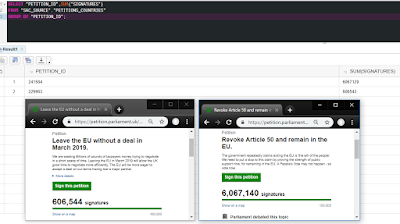

Okay, all good. Now, our patients today will be two petitions, the one that we remember well from the last time –

And it’s antagonist:

What we will be achieving today is extracting data from those two petitions in one go to our SAP HANA system via SAP Data Hub.

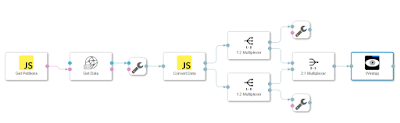

The resulting Data Hub pipeline would look like this:

Don’t be afraid! I will take you through the process of this pipeline creation step by step. There is surprisingly scarce in-depth information about practical Data Hub pipelines online, so I had to discover it piece by piece through trial and error.

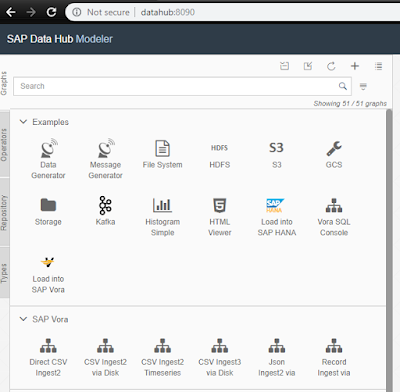

Now, let’s start creating new graph!

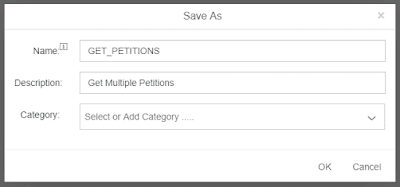

Create new Graph in Data Modeller and save it as “GET_PETITIONS”:

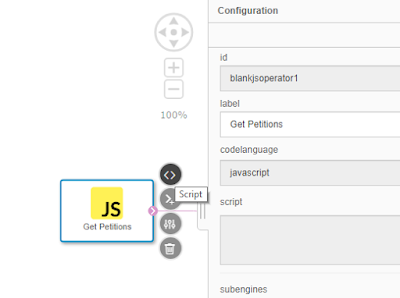

On the left-hand side pane choose “Operators” and drag “Blank JS Operator” to your graph workspace. This would be our starting operator which we will use to start the pipeline and create the GET request headers to acquire petitions from the petitions website.

Rename it to “Get Petitions” and add the following code to it by clicking the “Script” shortcut.

//Set petitions numbers array - adjust as required

var get_petitions = [241584,229963];

//Add DataHub generator

$.addGenerator(gen)

function gen(ctx) {

// Loop through petitions array to set correct GET header for HTTP client

for (i=0; i < get_petitions.length; i++){

var msg = {};

msg.Attributes["message.id"] = "Get_Petition";

msg.Attributes["http.Content-Type"] = "text/json";

msg.Attributes["http.method"] = "GET";

msg.Attributes["http.url"] = "https://petition.parliament.uk/petitions/"+get_petitions[i]+".json";

msg.Body = ("Generate petition" + ' ' + get_petitions[i]);

$.output(msg); //Output message with correct headers

}

};

Here we are specifying the petitions we require in the array variable and then looping through array creating the correct headers.

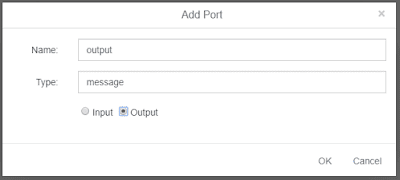

Right click on our JS operator and select “Add Port”, create it as follows.

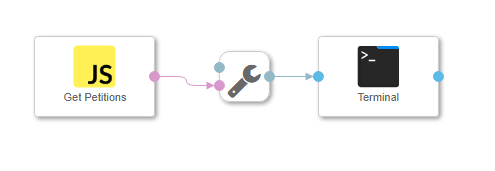

To test how our operator works drag “ToString Converter” and “Terminal” operators to the graph and connect them this way:

What we did is we connected message via string converter to the terminal to check the output of the “Get Petitions” operator. Save your graph and run it.

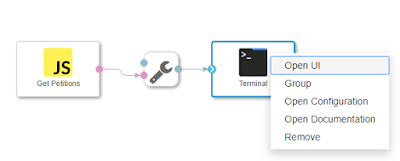

While it’s running right click on the “Terminal” operator and choose “Open UI”:

The UI of the terminal will show the result of JS run:

Wow, that’s cool! Now close the terminal and stop your graph.

Now that we have a properly formatted GET request header, we can actually get data from the URLs specified. Delete “ToString Converter” and “Terminal” operators for now (but we will use this monitoring concept throughout this post). Drag “HTTP client” operator and connect it to JS operator like shown on the image.

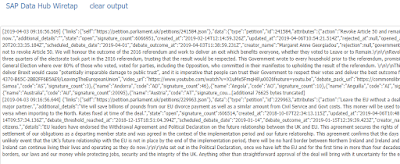

To test the data output from “HTTP client” use another operator – “Wiretap” which can be used for any input data (unlike “Terminal” accepting only strings) and connect it.

Run your graph and access “Wiretap” UI. The result is as expected – we received two JSON sets:

Great stuff! Now what we need to do is to parse this data and load it into SAP HANA tables. Let’s carry on doing that.

Remove “Wiretap” and add “ToString Converter” and “Blank JS Operator” to your graph. Rename the blank operator to “Convert Data”, right click and add three ports – one input port called “input” type string, and two output ports “output” and “output2” type string. Connect your pipeline the following way:

As you can see we have got one input port and two output ports in our “Convert Data” operator.

Add the following JS code to the “Convert Data” operator:

$.setPortCallback("input", onInput); //Initialise input

function onInput(ctx, s) {

var json_data = JSON.parse(s); //Parse JSON data

petition_id = json_data.data.id; //Get petition ID

//Loop through petitions by country and ouptut to the first output port

for (var i = 0; i < json_data.data.attributes.signatures_by_country.length; i++) {

json_data_out = json_data.data.attributes.signatures_by_country[i];

counter = i + 1;

country_name = json_data_out.name.replace(",", "");

$.output(counter + ',' + petition_id + ',' + country_name + ',' + json_data_out.code + ',' + json_data_out.signature_count);

}

//Loop through petitions by constituency and ouptut to the second output port

for (var i = 0; i < json_data.data.attributes.signatures_by_constituency.length; i++) {

json_data_out = json_data.data.attributes.signatures_by_constituency[i];

//Add escape sign or replace special characters

if (json_data_out.mp != null) {

mp = json_data_out.mp.replace("'", "\'");

};

if (json_data_out.name != null) {

const_name = json_data_out.name.replace(/,/g, "").replace(/'/g, "\'");

};

counter = i + 1;

$.output2(counter + ',' + petition_id + ',' + const_name + ',' + mp + ',' + json_data_out.ons_code + ',' + json_data_out.signature_count);

}

};

We are parsing incoming JSON and outputting countries and constituencies data on two separate ports for it to be then into two separate tables in our HANA system.

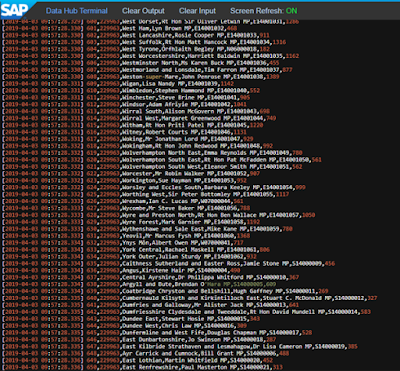

Add “2-1 Multiplexer” operator and connect “Terminal” (adjust “Terminal” config to have Max Size 4096 and spool 100000) to see the results of the run the following way:

Alright, that looks like it should, following JS operator parsing. We have constituency data as “ID, Petition ID, Constituency, MP, ONS Code, Signature Count” and country data as “ID, Petition ID, Country Name, Country Code, Signature Count”. Just the way we want it.

Now that we know that it works, it’s time to load the Country and Constituency data to SAP HANA tables. There is no need to create new tables in Catalogue manually, “SAP HANA Client” operator will do it for us.

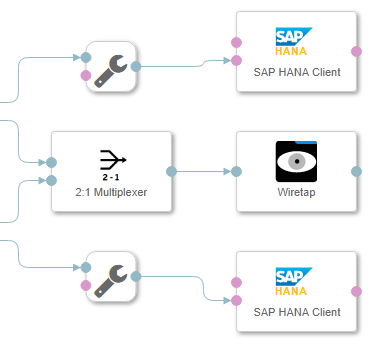

Remove “Terminal” and “Multiplexer” operators and add new “Multiplexers” and “ToString Converters” with “Wiretap” so that the pipeline looks the following way:

The reason we are doing this is so that we can monitor the outputs into HANA via a single “Wiretap” writing data into tables simultaneously. Remember that the first output is Country relevant information and the second output is Constituency relevant information.

Add “SAP HANA Client” operators and connect them to the outputs of string converters like this:

Make sure to connect string output to “data” inputs of SAP HANA Clients. Rename them accordingly, so you remember which is which:

Open config of the “Countries” client and set the connection the following way:

Save and set table name as “SAC_SOURCE.PETITIONS_COUNTRIES” and the following JSON definition of the table columns:

[

{

"name": "ID",

"type": "INTEGER"

},

{

"name": "PETITION_ID",

"type": "INTEGER"

},

{

"name": "COUNTRY_NAME",

"type": "VARCHAR",

"size": 60

},

{

"name": "COUNTRY_CODE",

"type": "VARCHAR",

"size": 3

},

{

"name": "SIGNATURES",

"type": "INTEGER"

}

]

Set table initialisation to “Drop (Cascade)” – this means that HANA will check whether the table exists and drop it (there are of course ways of just updating the values in table).

Do the same for the Constituencies client with the table name “SAC_SOURCE.PETITIONS_CONSTITUENCIES” and column definitions:

[

{

"name": "ID",

"type": "INTEGER"

},

{

"name": "PETITION_ID",

"type": "INTEGER"

},

{

"name": "CONST_NAME",

"type": "VARCHAR",

"size": 60

},

{

"name": "MP",

"type": "VARCHAR",

"size": 60

},

{

"name": "ONS_CODE",

"type": "NVARCHAR",

"size": 10

},

{

"name": "SIGNATURES",

"type": "INTEGER"

}

]

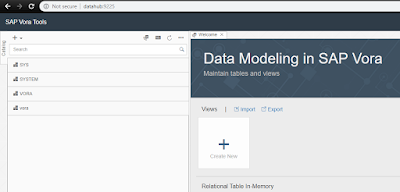

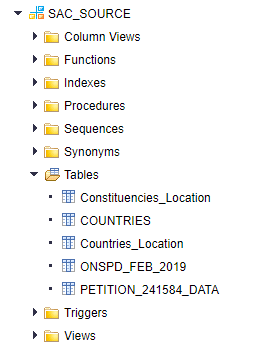

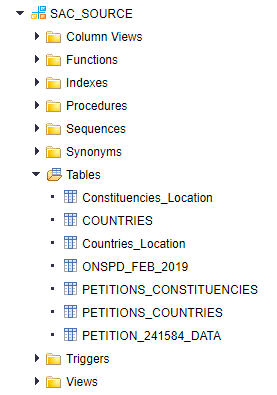

Now, let’s save the graph and check our schema catalogue in SAP HANA. Everything seems as it was last time.

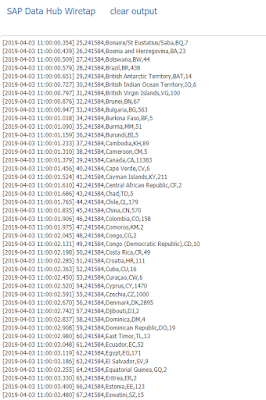

Let’s run our graph and check the output in the “Wiretap”:

It produces the desired output! (not that I am surprised)

Check HANA catalogue and find two newly created tables in there.

Check that the “Wiretap” stopped refreshing – this means all the data should be passed to the tables.

It’s important to note that sometimes pipelines just stall and do nothing even though the status is “Running”. This can be resolved by stopping Data Hub container and restarting it. I am not sure if it’s only happening in Dev version, but it’s a bit annoying, for sure.

Check total signatures by countries in petitions table by issuing the following SQL command:

SELECT "PETITION_ID", SUM("SIGNATURES")

FROM "SAC_SOURCE"."PETITIONS_COUNTRIES"

GROUP BY "PETITION_ID";

Compare the results with the live petitions website data:

The petitions online aren’t completely stale, so while I was taking the screenshots, they have moved a bit, but you get the point – it is as close to the real data as the processing time of Data Hub pipeline allows. For the purposes of this analysis we actually don’t really need the real live data, time lag of 30 minutes to a day or so will be fine.

The pipeline graph can be scheduled to run every day, every hour, or any other time period you require to process live data from the system.

In this article I won’t be going into the details of SAC side, you can figure out the rest by yourself, the next steps will include:

◈ Create two calculation views for Countries and Constituencies based on their respective tables.

◈ Country locations table is fine as we covered all the countries, but constituencies could change, therefore you will have to run the updated script:

DROP TABLE "SAC_SOURCE"."Constituencies_Location";

CREATE COLUMN TABLE "SAC_SOURCE"."Constituencies_Location" (

"ONS_CODE_LD" VARCHAR(10) PRIMARY KEY, "Location" ST_GEOMETRY(3857));

UPSERT "SAC_SOURCE"."Constituencies_Location" ("ONS_CODE_LD")

SELECT DISTINCT "PCON" FROM "SAC_SOURCE"."ONSPD_FEB_2019"

GROUP BY

"PCON";

UPDATE "SAC_SOURCE"."Constituencies_Location"

SET "Location" = new ST_GEOMETRY('POINT(' || "LONG" || ' ' || "LAT" ||

')', 4326).ST_Transform(3857)

FROM (

SELECT DISTINCT MIN("LAT") "LAT", MIN("LONG") "LONG", "PCON"

FROM "SAC_SOURCE"."ONSPD_FEB_2019" GROUP BY

"PCON"),

"SAC_SOURCE"."Constituencies_Location"

WHERE "PCON" = "ONS_CODE_LD";

This script will update the constituencies locations table to have the location of all the constituencies in the country and not only the ones from the particular petition.

◈ Create SAP Analytics models based on the new Calculation Views or update the existing ones.

◈ Create a new story and add Geo map there, filtering based on the petition ID – that way country and constituency will always be in sync based on petition id.

No comments:

Post a Comment