In this blog I’d like to revisit the topic of using alternative IDEs but this time also look at how to connect to and use a HANA DB. I think we’ve perhaps created the incorrect impression that only way to perform modern development with HANA (HDI in particular) is to use XSA/Cloud Foundry and the SAP Web IDE. While there is a strong focus on making development easy in the SAP Web IDE and deploying to XSA/CF; that certainly isn’t the only way to develop on HANA. So in this blog I’ll also dive deep into the topic of using the SAP Cloud Platform based HANA As A Service from outside the Cloud Platform tooling.

So for the purpose of this blog, I’m going to focus on HANA As A Service running in SAP Cloud Platform. As some readers might not be that familiar with this offering of HANA, I’ll begin by looking at the provisioning and setup from scratch.

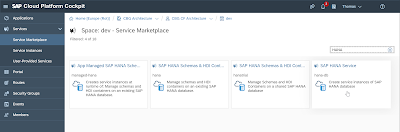

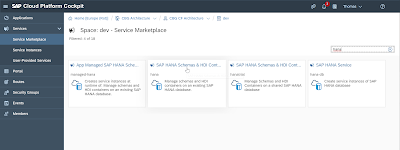

I start by going into the SAP Cloud Platform Cockpit and drilling into my Cloud Foundry sub-account. From there I can navigate to a Cloud Foundry Space and then from the Service Marketplace I find all available services which I can provision/create an instance of. The naming here might be a little confusing as there are several with the name HANA in them. But the hana-db service is the one that creates an entire HANA Database as a service. By contrast the hana and managed-hana services only create containers/schemas on an existing HANA DB instance.

HANA As A Service Setup

So for the purpose of this blog, I’m going to focus on HANA As A Service running in SAP Cloud Platform. As some readers might not be that familiar with this offering of HANA, I’ll begin by looking at the provisioning and setup from scratch.

I start by going into the SAP Cloud Platform Cockpit and drilling into my Cloud Foundry sub-account. From there I can navigate to a Cloud Foundry Space and then from the Service Marketplace I find all available services which I can provision/create an instance of. The naming here might be a little confusing as there are several with the name HANA in them. But the hana-db service is the one that creates an entire HANA Database as a service. By contrast the hana and managed-hana services only create containers/schemas on an existing HANA DB instance.

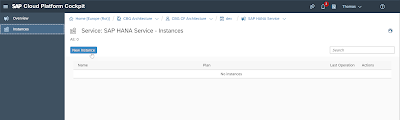

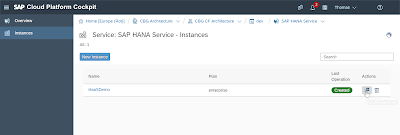

Once we are sure which service will give us what we want we can begin the wizard to provision the service by pressing pressing the New Instance button.

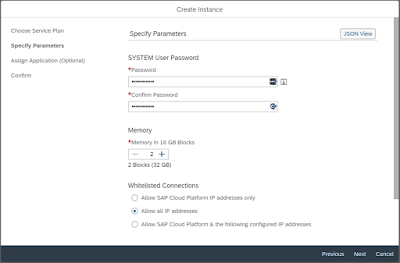

During the Create Instance wizard you will make several choices about the security and sizing of your HANA DB. You must set the initial password for the SYSTEM user, set the amount of memory (I’ll stick with the minimum of 32Gb) and the Whitelisted Connections. The Whitelisted Connections is probably the most important setting for our discussion. If your HANA DB was only going to be used from the SAP Cloud Platform, then its safest to only allow SAP Cloud Platform IP addresses. This will block traffic from any other sources. But since we want to develop from other clouds or even our local laptop we need to allow more connectivity. For maximum projection of a production system, we’d want to build a custom list of allowed IP addresses. But since this is just a development sandbox, we will allow all IP addresses for simplicity. This setting can be changed later after the system is provisioned.

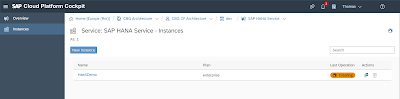

After completing the wizard, you will see the status of Creating. Now you might have to be a little bit patient at this step. The compute and storage are being provisioned on the underlying hyperscaler, HANA is being provisioned and configured. In my experience it takes several minutes at least for this to complete.

Once the system is ready the status will change to Created. Notice the Icon next to the status in the Actions column. This button will launch the SAP HANA Service Dashboard.

The HANA Service Dashboard has a lot of key information for us. From here we can launch the SAP HANA Cockpit and connect as the SYSTEM user. If this were more than a private development sandbox, I would probably do that now and create some development users. Since this is just private, I’ll use SYSTEM for a few test and setup steps. But the real important stuff is the Direct SQL Endpoints. This is the URL and Port for your HANA DB. You can’t rely upon the port calculation (based upon instance or tenant number) that you might know from on premise HANA or HANA Express.

Now that we know the connectivity settings for our HANA service, let’s return to the Service Marketplace. From here we can use the hana service type to create our HDI Container/Schema and Users. Note, you can also do this completely without the service broker and via SQL. I detailed that approach here: https://www.hanaexam.com/2019/04/developing-with-hana-deployment.html. But for our purposes, let’s let the SAP Cloud Platform service broker create the instance and then use that from a different cloud environment.

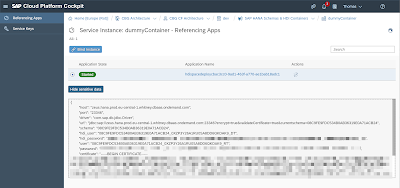

So I create an hdi-shared instance of the hana service type. I do go ahead and bind it to an existing application in my space – not because I need the binding but because this is the only way we can view the service instance details in the Cockpit. What we see here are all the important technical details of the schema which was created for us as well the HDI owner and application users/passwords and connectivity certificate. In the SAP Cloud Platform and SAP Web IDE the binding of this instance to our applications just injects this information into the Environment of our application. But there is nothing “magical” about that step. You’ll soon see we can do the same sort of thing in other environments as well.

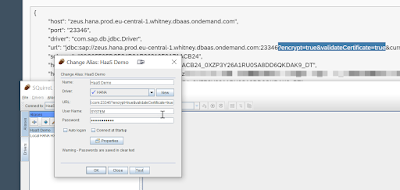

The last note here is to pay attention to the url. Notice the JDBC url parameters of encrypt=true&validateCertificate=true. This is the part that trips up many people when trying to access HANA As A Service. This offering of HANA requires that all connections be secure/encrypted. I find that most on premise HANA development systems often don’t have this requirement and developers are not used to needing the certificate to make connections from the client side. But for HANA As A Service this is an absolute requirement.

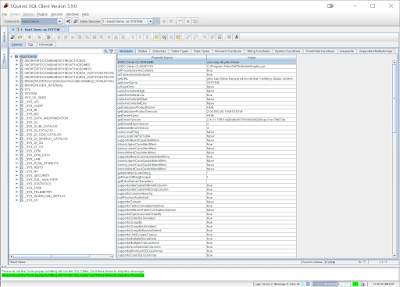

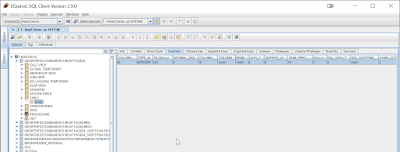

So let’s test out connectivity to our HANA As A Service instance from outside of the SAP Cloud Platform. I’ll just use the SQuirreL SQL Client which I have installed on my laptop. Be sure to use the full URL we saw above in the service broker instance with the encrypt and validateCertificate options and not the just the URL part shown in the Dashboard earlier. Connect with the SYSTEM user and the password chosen during service provisioning.

If everything’s working right, you should get connected and see a list of all the schemas in the system (including the generated schemas for the HDI container we created in the previous step).

We also have a SQL Console we can use from this tool. This can be a nice alternative to opening the HANA Cockpit and navigating into the Database Explorer if as a developer you need more access than just HDI Containers to the HANA As A Service instance.

Development Access from a Different Cloud Environment

We have a HANA DB instance, an HDI container (with all the necessary users) and we know we can access the DB instance from outside of the SAP Cloud Platform. We are ready to start application development but from an environment outside of SAP Cloud Platform. For this blog, I’ve decided to use Google Cloud Shell. I’ve detailed the basic setup I’m using for Cloud Shell here. But the general steps I’m using can be used in other environments (local laptop, other cloud containers – such as AWS Cloud9).

One thing that isn’t required but is rather handy is to install the HANA Client in your cloud development environment. Getting the client is easy enough as I just download the Linux version of the client from https://tools.hana.ondemand.com/#hanatools. But the key to working with HANA As A Service is once again the encrypted connection requirement.

When I connected from SQuirreL on my laptop, it used the trusted root certificate from my OS certificate store. But in the cloud shell development environment this certificate won’t be present. Then you can use openssl to convert it to the PEM format required for usage in the HANA Client. We can then use hdbsql (which is installed as part of the HANA Client) and pass it the ssl parameters as you see in the following screenshot:

This is helpful because now from a shell/console in our cloud development environment we can directly run SQL commands to alter or display DB artifacts or the data within them. This is great for quick testing during the development process without having to jump into some other tool.

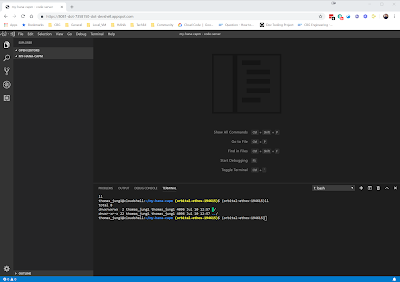

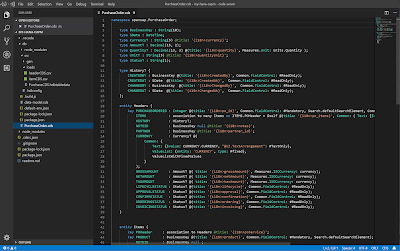

So for the rest of my development process I will use a combination of command shell but I also want some help of an IDE. I could use the built in web based editor of Cloud Shell but as I’ve learned recently there is a cool project called code-server that in a few easy steps makes it possible to run Visual Studio Code from a container environment like Cloud Shell. https://medium.com/@chees/how-to-run-visual-studio-code-in-google-cloud-shell-354d125d5748. I’ve used that setup to run VS Code in my Google Cloud Shell and I’ll do the rest of my application development for this project in that tool. Notice I still have the bash terminal to the Cloud Shell where I can access tools like the CDS command line or hdbsql.

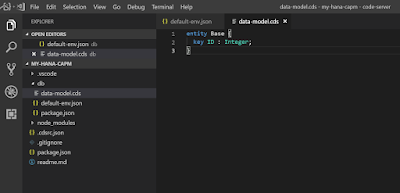

Right now I have an empty project named MY-HANA-CAPM. But I can use the cds command line tool (installed via NPM as detailed in my earlier blog). I’ll use cds init to configure this empty project. This is very similar to running the new project wizard within the SAP Web IDE.

Unlike the earlier blogs I don’t want the Cloud Application Programming Model to use SQLite for local persistence but really create DB artifacts within HANA. If I were developing from the SAP Web IDE it would bind the HDI deployer to my HDI instance automatically. But remember earlier we said this is really just injecting this information into the environment of our application.

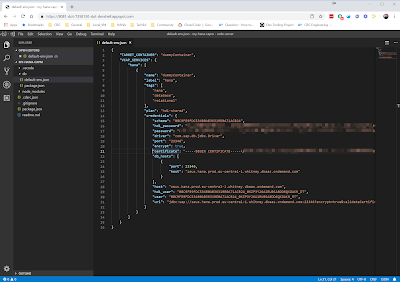

For development purposes we can simulate this binding and environment via a local file in our project. The SAP modules like the hdi-deployer and the xsenv, all are designed to look for a file named default-env.json to simulate these environment binding settings. Therefore we can create such a file and copy the details from the binding we saw in the HANA Cockpit earlier.

This is a very nice solution for private testing, but isn’t intended to be a productive solution. Notice even the .gitignore generated by the cds init command has a filter to make sure these default*json files don’t get sent back to the central code repository. This is where for real deployment to a cloud environment outside of the SAP Cloud Platform you’d need to use a platform specific capability for storing such “secrets” and injecting them into the environment. Most of the cloud providers have such secret functionality but that’s perhaps a broader topic than I want to cover here.

We will add a data-model.cds file with a very simple entity. Notice the syntax highlighting. That’s one of the beautiful things of running VS Code from within the Cloud Shell: I’m even able to load extensions such as the SAP CDS tooling.

Now if you are used to developing in the SAP Web IDE, you would probably expect to perform a build CDS and then a build on the db module. This will “compile” the CDS to HDBCDS and then deploy the artifacts into the HANA DB. But obviously working in this environment there is no such build buttons. We have to understand what the SAP Web IDE is really doing when it builds. In fact the majority of what its doing is just calling operations from the package.json via NPM. We can do the same from the terminal here. So we need a package.json file in our db module to list the dependency to the hdi-deploy module and add the postinstall and start scripts:

{

"name": "deploy",

"dependencies": {

"@sap/hdi-deploy": "^3.11.0"

},

"engines": {

"node": "^10"

},

"scripts": {

"postinstall": "node .build.js",

"start": "node node_modules/@sap/hdi-deploy/deploy.js --auto-undeploy"

}

}

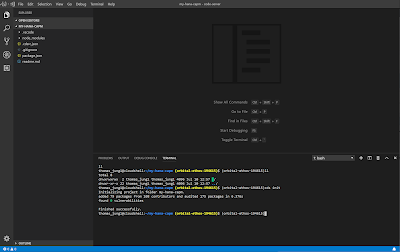

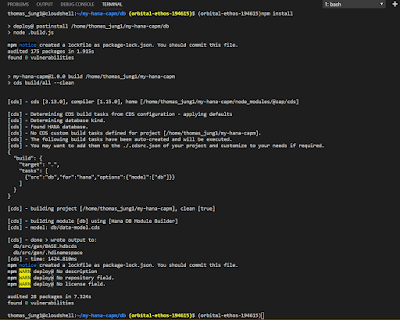

From the terminal we can then use NPM to run the install command. It will download the hdi-deploy module and then perform the CDS build (which compiles to the HDBCDS). This is the same as choosing CDS Build from the SAP Web IDE.

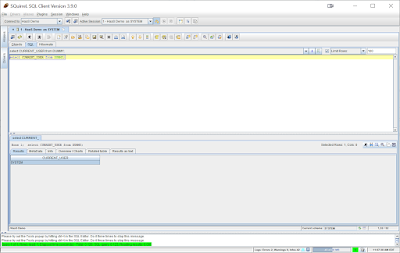

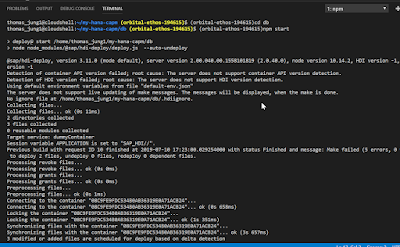

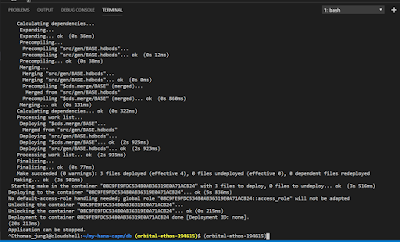

We can then run the NPM start command. This is the same thing as choosing Build on the db module in the SAP Web IDE. It will start the HDI Deploy. It uses the default-env.json file automatically to know how to connect to our HANA DB and deploys all the DB artifacts into it.

If you want to ensure that the table was really created, we can switch back over to SQuirreL SQL Client and sure enough there’s the new table we defined via CDS Entity:

Its important to note that no Cloud Foundry or SAP Cloud Platform application services were utilized here. The deployer ran completed in the Node.js runtime of our Google Cloud Shell service. We are only remotely connecting to the HANA DB.

But one table isn’t very fun. I continue to build out my project adding more CDS entities, data loading from CSV, etc. I can really create any DB artifact I could from the SAP Web IDE; although things like Calculation Views would be challenging without the dedicated editors. Luckily nearly all other DB artifacts are simple text, json or SQL DDL based syntax which work great in any editor.

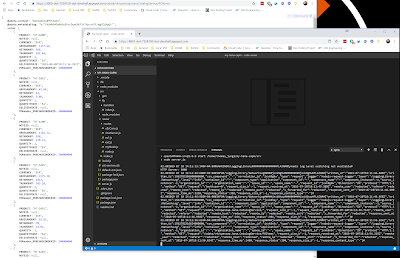

But I’m not limited to DB artifact development. I can also perform Node.js or UI5 development easily from here as well. For example I add a srv module for both custom Node.js REST services and CDS OData V4 services. The XSENV module we use to load HANA connectivity options work just as well with the default-env.json as the HDI deployer. So we can run the Node.js service directly from the Cloud Shell and when it runs, it also remotely connects to the HANA DB.

And there’s really no special coding in the Node.js code needed to make this happen. You can look at the code here: https://github.com/jungsap/my-hana-capm/blob/master/srv/server.js

The exact same code would run on Cloud Platform directly and work the same way. As long as you have some way to fill the environment or can pass the hanaOptions directly; the connectivity is going to work. This gives you a variety of deployment and hosting options for your application logic that can still connect securely and easily to a remote HANA DB instance.

We’ve also seen that you don’t have to be afraid to venture outside the SAP Web IDE as your only development environment either. The SAP Web IDE obviously provides a lot of convenience in its dedicated nature, but once you understand what its doing when performing operations like building and running; you see you can replicate the same operations outside the SAP Web IDE as well. As long as you don’t mind working without a few of the wizards and templates, there is a wide world of options. So much of the future of cloud native development is about combining the best capabilities from a variety of sources into the maximum value.

No comments:

Post a Comment