Welcome to HANA ML Python API for sequential pattern mining aka (SPM) method.I explained first four methods of association analysis in my previous blog post.

Note: Make Sure your python environment with HANA ML is up and running ,if not please follow the steps mentioned in previous blog post.

The sequential pattern mining algorithm (SPM) searches for frequent patterns in sequence databases. A sequence database consists of ordered elements or events. For example, a customer first buys bread, then eggs and cheese, and then milk. This forms a sequence consisting of three ordered events. We consider an event or a subsequent event is frequent if its support, which is the number of sequences that contain this event or sub-sequence, is greater than a certain value. This algorithm finds patterns in input sequences satisfying user defined minimum support.

Understand Sequence Pattern Mining before going into practice :

◈ Find all subsets of items that occur with a specific sequence in all other transactions:

e.g {Playing cricket -> high ECG -> Sweating}.

◈ Find all rules that correlate the order of one set of items after that another set of items in the transaction database:

e.g 72% of users who perform a web search then make a long eye gaze

over the ads follow that by a successful add-click.

Prerequisites:

◈ The input data does not contain null value.

◈ There are no duplicated items in each transaction

Let’s Start:

Import Packages

##First, import packages needed in the data loading.

from hana_ml import dataframe

from data_load_utils import DataSets, Settings

Setup Connection

In our case, the data is loaded into a table called “PAL_APRIORI_TRANS_TBL” in HANA from a csv file “apriori_item_data.csv”. To do that, a connection to HANA is created and then passed to the data loader. To create a such connection, a config file, config/e2edata.ini is used to control the connection parameters. A sample section in the config file is shown below which includes HANA url, port, user and password information.

***************************

[hana]

url=host-url

user=username

passwd=userpassword

port=3xx15

***************************

Maintain the login information in one config file & have it ready in your root folder

url, port, user, pwd = Settings.load_config("../config/e2edata.ini")

connection_context = dataframe.ConnectionContext(url, port,user,pwd)

print(connection_context.connection.isconnected())

If connection is successful – “True”

Data-Set:

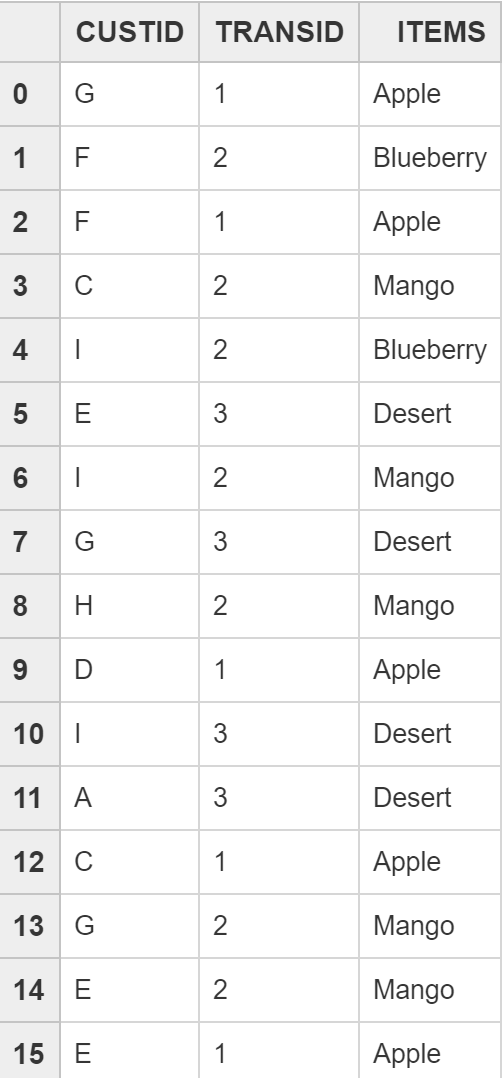

we will analyze the store data for frequent pattern mining ,this is the sample data which is available on SAP’s help webpage.

Attribute Information:

1. CUSTID – Customer ID

2. TRANSID – Transaction ID

3. ITEMS – Item of Transaction

Check if table already exists in your schema Select * from PAL_SPM_DATA_TBL

Note: Make Sure your python environment with HANA ML is up and running ,if not please follow the steps mentioned in previous blog post.

Introduction and Algorithm description:

The sequential pattern mining algorithm (SPM) searches for frequent patterns in sequence databases. A sequence database consists of ordered elements or events. For example, a customer first buys bread, then eggs and cheese, and then milk. This forms a sequence consisting of three ordered events. We consider an event or a subsequent event is frequent if its support, which is the number of sequences that contain this event or sub-sequence, is greater than a certain value. This algorithm finds patterns in input sequences satisfying user defined minimum support.

Understand Sequence Pattern Mining before going into practice :

◈ Find all subsets of items that occur with a specific sequence in all other transactions:

e.g {Playing cricket -> high ECG -> Sweating}.

◈ Find all rules that correlate the order of one set of items after that another set of items in the transaction database:

e.g 72% of users who perform a web search then make a long eye gaze

over the ads follow that by a successful add-click.

Prerequisites:

◈ The input data does not contain null value.

◈ There are no duplicated items in each transaction

Let’s Start:

Import Packages

##First, import packages needed in the data loading.

from hana_ml import dataframe

from data_load_utils import DataSets, Settings

Setup Connection

In our case, the data is loaded into a table called “PAL_APRIORI_TRANS_TBL” in HANA from a csv file “apriori_item_data.csv”. To do that, a connection to HANA is created and then passed to the data loader. To create a such connection, a config file, config/e2edata.ini is used to control the connection parameters. A sample section in the config file is shown below which includes HANA url, port, user and password information.

***************************

[hana]

url=host-url

user=username

passwd=userpassword

port=3xx15

***************************

Maintain the login information in one config file & have it ready in your root folder

url, port, user, pwd = Settings.load_config("../config/e2edata.ini")

connection_context = dataframe.ConnectionContext(url, port,user,pwd)

print(connection_context.connection.isconnected())

If connection is successful – “True”

Data-Set:

we will analyze the store data for frequent pattern mining ,this is the sample data which is available on SAP’s help webpage.

Attribute Information:

1. CUSTID – Customer ID

2. TRANSID – Transaction ID

3. ITEMS – Item of Transaction

Load Data for SPM:

Check if table already exists in your schema Select * from PAL_SPM_DATA_TBL

data_tbl = DataSets.load_spm_data(connection_context)

The function DataSets.load_spm_data() is used to decide load or reload the data from scratch. If it is the first time to load data, an example of return message is shown below:

ERROR:hana_ml.dataframe:Failed to get row count for the current Dataframe, (259, ‘invalid table name: Could not find table/view

PAL_SPM_DATA_TBL in schema DM_PAL: line 1 col 37 (at pos 36)’)

Table PAL_SPM_DATA_TBL doesn’t exist in schema DM_PAL

Creating table PAL_SPM_DATA_TBL in schema DM_PAL ….

Drop unsuccessful

Creating table DM_PAL.PAL_SPM_DATA_TBL

Data Loaded:100%

#####################

if data is already loaded into HANA:

data_tbl = DataSets.load_spm_data(connection_context)

print("Table Name is: " +str(data_tbl))

import pandas as pd

Table PAL_SPM_DATA_TBL exists and data exists

Table Name is: PAL_SPM_DATA_TBL

Create dataframes using Pandas Dataframes for data load from SAP HANA

##Create a dataframe df from PAL_SPM_TRANS_TBL for the following steps.

df_spm = pd.DataFrame(columns=['CUSTID' , 'TRANSID' , 'ITEMS'])

df_spm = dataframe.create_dataframe_from_pandas(connection_context=connection_context, pandas_df=df_spm, table_name=data_tbl, force=False, replace=True)

Re-execute the below command and check what message it returns

data_tbl = DataSets.load_spm_data(connection_context)

print("Table Name is: " +str(data_tbl))

Creating table DM_PAL.PAL_SPM_DATA_TBL

Data Loaded:100%

Table Name is: PAL_SPM_DATA_TBL

df = df_spm

Display dataframe records

df.collect().head(100) ##Display Data

df.dropna() ##Drop NAN if any of the blank record is present in your dataset

print("Toal Number of Records : " + str(df.count()))

print("Columns:")

df.columns

Data Operations:

Get insights of dataset using the following few operations , you can play more by applying few more functions to get more insights.

Filter Data:

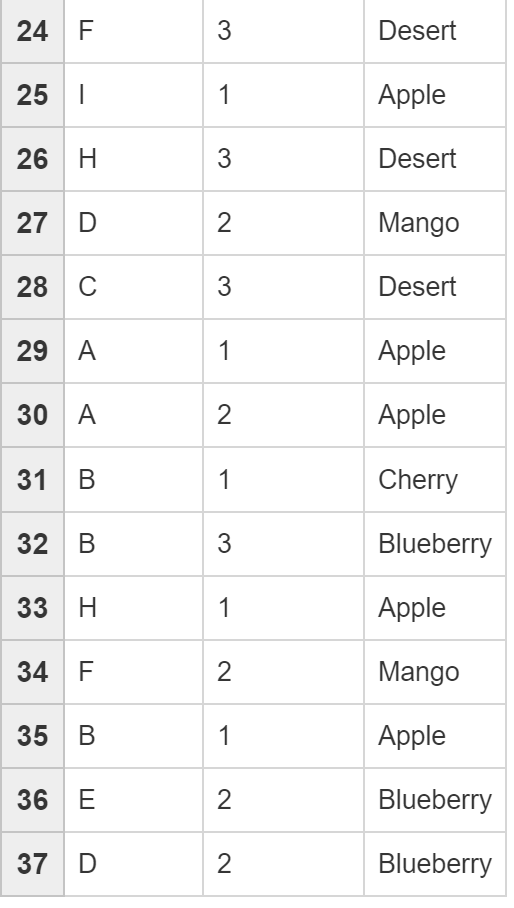

Fetch all customers who has CUSTID = ‘A’

df.filter("CUSTID = 'A'").head(10).collect()

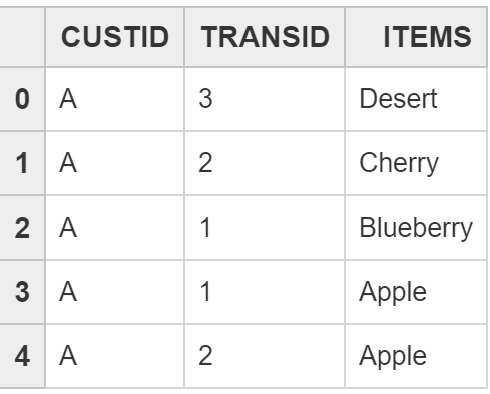

Fetch all customers having transaction id = 1

df.filter('TRANSID = 1').head(10).collect()

Fetch all customers & transaction having item id = ‘Apple’

df.filter("ITEMS = 'Apple'").head(10).collect()

Group by on Data:

GroupBy ITEMS

df.agg([('count' , 'ITEMS' , 'TOTAL TRANSACTIONS')] , group_by='ITEMS').head(100).collect()

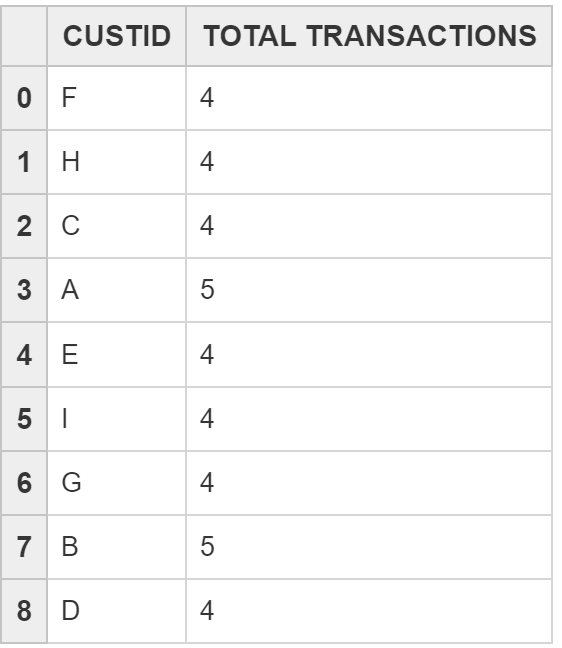

GroupBy CUSTID

df.agg([('count' , 'CUSTID', 'TOTAL TRANSACTIONS')] , group_by='CUSTID').head(100).collect()

GroupBy TRANSID

df.agg([('count' , 'TRANSID', 'TOTAL TRANSACTIONS')] , group_by='TRANSID').head(100).collect()

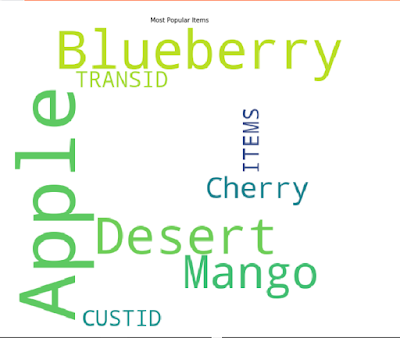

Display the most popular items:

Import matplotlib library to display the plot having the most popular items in our dataset

import matplotlib.pyplot as plt

from wordcloud import WordCloud

plt.rcParams['figure.figsize'] = (10, 10)

wordcloud = WordCloud(background_color = 'white', width = 500, height = 500, max_words = 120).generate(str(df_spm.head(100).collect()))

plt.imshow(wordcloud)

plt.axis('off')

plt.title('Most Popular Items',fontsize = 10)

plt.show()

The most popular items from plot are Apple & Blueberry and if we try to find the count of transactions for both of them :

Count of Apple – df.filter(“ITEMS = ‘Apple'”).head(100).count() = 10

Count of Blueberry – df.filter(“ITEMS = ‘Blueberry'”).head(100).count() = 10

Import SPM Method from HANA ML Library:

from hana_ml.algorithms.pal.association import SPM

Setup SPM instance:

sp = SPM(conn_context=connection_context,

min_support=0.5,

relational=False,

ubiquitous=1.0,

max_len=10,

min_len=1,

calc_lift=True)

Method

fit(data, customer=None, transaction=None, item=None, item_restrict=None, min_gap=None)

Sequential pattern mining from input data.Execute the method

Training of model with dataset.

sp.fit(data=df_spm, customer='CUSTID', transaction='TRANSID', item='ITEMS')

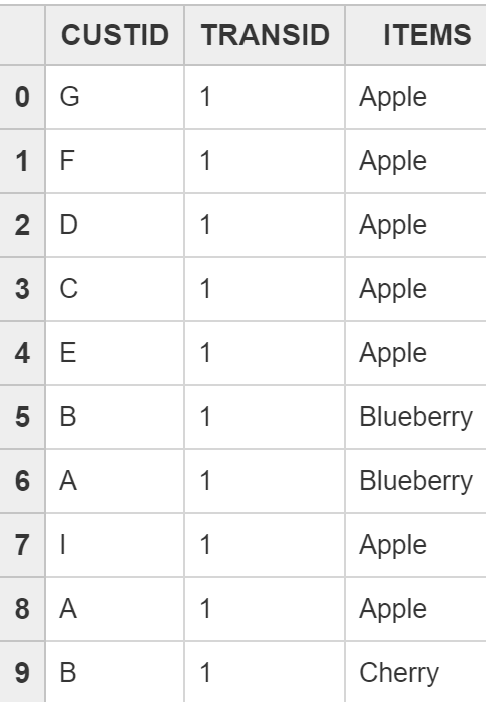

Collect the result

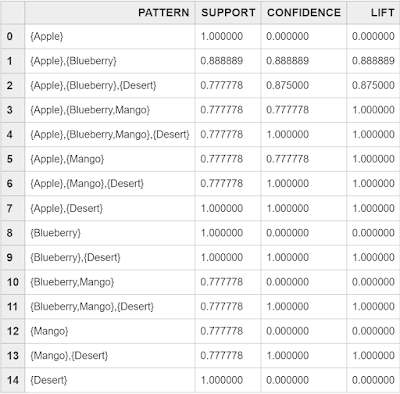

sp.result_.collect()

Result Analysis:

◈ Item-set Apple has support 1.0 indicates the frequency of the item in all the transactions , most frequent item – confidence & lift is 0 for all the single items which states there is no antecedent & consequent item of them

◈ Consider (Apple , Blueberry): Support is .88 (Frequency of these items together is 88%) , Confidence is 88% means if someone is buying Apple then 88% chances they will also have blueberry in their bucket , lift is .89 close to 1 indicates high Association among items purchase.

◈ Result displays most of the items are having support , Confidence & Lift more than 70% indicates the mining of highly frequent items & can be considered strong association rules.

No comments:

Post a Comment