It took me nearly two years to write the second part of this partition series. The first one for BW partitioning can be found here.

The main question of this blog: How to determine the best partitioning combination?

In an ERP or S/4 HANA system it is possible that a table is approaching the 2 billion mark. This is a HANA design limit for a column store table (per partition). In this case you have to partition the table. But different than on a BW system there are no standard rules for a partitioning. This means you have to choose a partitioning rule by your own. But how to determine the best partitioning combination?

1. Alerts

2. Find tables with high amount of records

3. Term clarification

i. selectivity

ii. cardinality

iii. HASH Partitioning

iv. Range Partitioning

4. Designing Partitions

5. Example BSEG

6. Example BSIS

7. Example <tbd>

8. Parameter for parallel splitting

At first check if partitioning is needed at all which means check the tables with the most row entries. There is a limit per table/partition of 2 billion rows by the design of HANA.

If your alerting is working correct you will receive an alert (ID 27). It looks like this:

“Record count of column-store table partitions ( ID 27 )

Determines the number of records in the partitions of column-store tables. A table partition cannot contain more than 2,147,483,648 (2 billion) rows.”

◉ Interval 1 hour

◉ High: 1,900,000,000

◉ Medium: 1,800,000,000

◉ Low: 1,500,000,000

The limitations are described in SAP note 2154870.

The main question of this blog: How to determine the best partitioning combination?

In an ERP or S/4 HANA system it is possible that a table is approaching the 2 billion mark. This is a HANA design limit for a column store table (per partition). In this case you have to partition the table. But different than on a BW system there are no standard rules for a partitioning. This means you have to choose a partitioning rule by your own. But how to determine the best partitioning combination?

1. Alerts

2. Find tables with high amount of records

3. Term clarification

i. selectivity

ii. cardinality

iii. HASH Partitioning

iv. Range Partitioning

4. Designing Partitions

5. Example BSEG

6. Example BSIS

7. Example <tbd>

8. Parameter for parallel splitting

1. Alerts

At first check if partitioning is needed at all which means check the tables with the most row entries. There is a limit per table/partition of 2 billion rows by the design of HANA.

If your alerting is working correct you will receive an alert (ID 27). It looks like this:

“Record count of column-store table partitions ( ID 27 )

Determines the number of records in the partitions of column-store tables. A table partition cannot contain more than 2,147,483,648 (2 billion) rows.”

◉ Interval 1 hour

◉ High: 1,900,000,000

◉ Medium: 1,800,000,000

◉ Low: 1,500,000,000

The limitations are described in SAP note 2154870.

2. Find tables with high amount of records

Check all tables by high amount of records. Therefore, you can use the SQL from 1969700 (HANA_Tables_TopGrowingTables_Records_History).

Rule of thumb for the initial partitioning:

◉ 100 mio entries per partition

◉ 500 mio entries per partition

◉ if you choose too many partitions you can achieve a bad performance, because one thread per partition have to be triggered (e.g. you have 20 threads as statement concurrency limit and 40 partition have to be scanned which results in waiting for resources)

◉ if you choose too less partitions, it can be that you have to repartition pretty timely which means another maintenance window / downtime

◉ recommendation: HASH partitioning on a selective column, typically part of primary key

In our example we determined BSEG and BSIS as tables with high amount of rows. For timely planning of maintenance you should act when the table exceeds 1,5 billion entries.

For our scenario BSEG has 4,5 billion and BSIS has 4,1 billion rows. So, they are already partitioned. But is this partitioning over the time since the initial creation still optimal?

3. Term clarification

To determine the optimal partitioning combination, we have to clarify some terms:

◉ selectivity

◉ cardinality

◉ HASH Partitioning

◉ Range Partitioning

Cardinality

In the context of databases, cardinality refers to the uniqueness of data values contained in a column. High cardinality means that the column contains a large percentage of totally unique values. Low cardinality means that the column contains a lot of “repeats” in its data range. In a customer table, a low cardinality column would be the “Gender” column. This column will likely only have “M” and “F” as the range of values to choose from, and all the thousands or millions of records in the table can only pick one of these two values for this column. Ok, to be accurate nowadays we have to add a third value for diverse “D”.

Note: Partitioning works better when the cardinality of the partitioning field is not too high

Selectivity

The selectivity basically is a measure of how much variety there is in the values of a given table column in relation to the total number of rows in a given table. The cardinality is just part of the formula that is used to calculate the selectivity.

Selectivity = cardinality/(number of rows) * 100

Hash Partitioning

Hash partitioning is used to distribute rows to partitions equally for load balancing. The number of the assigned partition is computed by applying a hash function to the value of a specified column. Hash partitioning does not require an in-depth knowledge of the actual content of the table.

Source: openHPI: In-Memory_Data_Management_2017

Range Partitioning

Range partitioning creates dedicated partitions for certain values or value ranges in a table. For example, a range partitioning scheme can be chosen to create a partition for each calendar month. Partitioning requires an in-depth knowledge of the values that are used or are valid for the chosen partitioning column.

Partitions may be created or dropped as needed and applications may choose to use range partitioning to manage data at a fine level of detail, for example, an application may create a partition for an upcoming month so that new data is inserte

Source: openHPI: In-Memory_Data_Management_2017

4. Designing Partitions

For details please read the “Designing Partitions” section in the documentation.

As summary:

◉ Use partitioning columns that are often used in WHERE clauses for partition pruning

◉ If you don’t know which partition scheme to use, start with hash partitioning

◉ Use as many columns in the hash partitioning as required for good load balancing, but try to use only those columns that are typically used in requests

◉ Queries do not necessarily become faster when smaller partitions are searched. Often queries make use of indexes and the table or partition size is not significant. If the search criterion is not selective though, partition size does matter.

◉ Using time-based partitioning often involves the use of hash-range partitioning with range on a date column

◉ If you split an index (SAP names the CS tables also as index), always use a multiple of the source parts (for example 2 to 4 partitions). This way the split will be executed in parallel mode and also does not require parts to be moved to a single server first.

◉ Do not split/merge a table unless necessary.

Ideally tables have a time criterion in the primary key. This can then be used for time-based partitioning.

◉ Single level partitioning limitation: the limitation of only being able to use key columns as partitioning columns

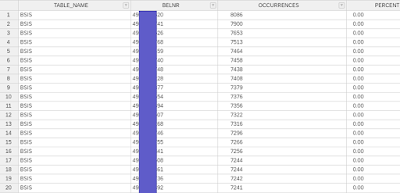

5. Example BSEG

We determined that BSEG has 4,5 billion rows. Now we need details on which column the table has to be partitioned.

The recommendation of SAP is to use a HASH partitioning on BELNR in note 2044468.

Note 2289491 describes a range partitioning on BUKRS. As you can see it always depends on how your system is being used. Ok, let’s find it out in a test environment.

Now we know:

◉ table has to be partitioned (4,5 billion rows)

◉ most scanned columns

◉ recommendation for partitioning by SAP

Questions to success:

◉ what is the primary key of the table?

◉ which columns are often selected?

◉ which columns are often used in where clause

◉ what is the cardinality of this columns?

◉ how many rows are inside the tables?

◉ how many partitions are needed?

To quote the HPI course on the partitioning details (In-Memory_Data_Management_2017):

“There are number of different optimization goals to be considered while choosing a suitable partitioning strategy. For instance, when optimizing for performance, it makes sense to have tuples of different tables, that are likely to be joined for further processing, on one server. This way the join can be done much faster due to optimal data locality, because there is no delay for transferring the data across the network. In contrast, for statistical queries like counts, tuples from one table should be distributed across as many nodes as possible in order to benefit from parallel processing.”

To sum up, the best partitioning strategy depends very much on the specific use case.

The main challenge for hash-based partitioning is to choose a good hash function, that implicitly achieves locality or access improvements.

Primary key BSEG:

MANDT

BUKRS

BELNR

GJAHR

BUZEI

The following columns have a particularly high amount of scanned records (SQL: “HANA_Tables_ColumnStore_Columns_Statistics”, MIN_SCANNED_RECORDS_PER_S = 5000000):

◉ To achieve this just fill in your table inside the modification section

There are recommendations for the most famous SAP standard tables within note 2044468. If you are close to the standard and don’t use a lot of custom code try this partitioning recommendations first. If you have heavy load with own statement which uses a totally different execution plan you may have to determine your own partitioning columns.

If you want to check the optimal partitioning combinations use this statement:

select statement_hash, statement_string,

execution_count, total_execution_time from m_sql_plan_cache

where statement_string like '%BSEG%'

order by total_execution_time desc;

From the result you have to analyze the “where” clause and find a common pattern.

Take as few fields as needed. Normally fields like MATNR, BELNR or DOCNR are selective fields and well suited for partitioning.

For a deep dive you can additionally run the DataCollector with the selected statement_hash (HANA_SQL_StatementHash_DataCollector) within the statement collection (1969700).

Additionally, you can use the ColumnStore statistics (HANA_Tables_ColumnStore_Columns_Statistics_2.00.030+ ) which is also part of the SQL collection to analyze how many scans are on which column. If you are using multiple clients, it may be wise to add the field MANDT as partitioning criteria.

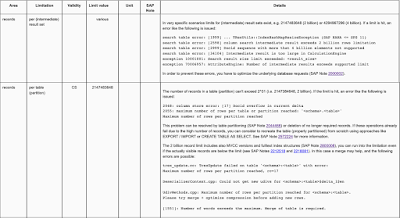

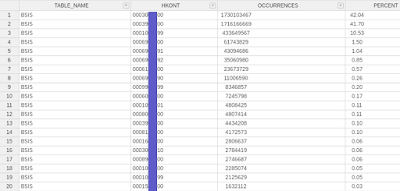

HANA_Data_ColumnValueCounter_CommandGenerator

SELECT TOP 100

'BSEG' TABLE_NAME,

S.*

FROM

( SELECT

BUKRS,

LPAD(COUNT(*), 11) OCCURRENCES,

LPAD(TO_DECIMAL(COUNT(*) / SUM(COUNT(*)) OVER () * 100, 10, 2), 7) PERCENT

FROM

( SELECT

BUKRS

FROM

"SAPREQ"."BSEG

)

GROUP BY

BUKRS

ORDER BY

OCCURRENCES DESC

) S

Only 2 values for BUKRS “0010” and “0020”. This means we have a too low cardinality and partitioning won’t work well on this attribute. So, this means both extreme too high and low are bad.

Most scanned columns are PCTR, HKONT and BELNR.

We have about 30 clients in this test system which means it makes sense to use it in the partitioning clause on the first level as entry point.

1. MANDT first level range partitioning

2. BELNR second level hash partitioning

With 4,5 billion partitions we need about 12-15 partitions which means 300 million rows per table (rule of thumb: between 100 – 500 million), if we would use only single partitioning. With multilevel partitioning the fill degree of the partitioning depends on the single column data cardinality of each level.

Note: At the end it is just a indication for the given analyzed timeframe and no guarantee for the future. This is a process you should repeat if the application and selections are changing. This can be due user behaviour or new SAP packages or custom code.

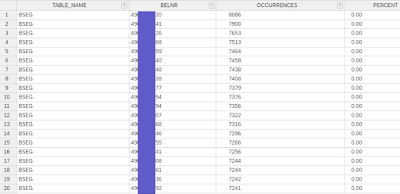

6. Example: BSIS

Primary Key

MANDT

BUKRS

HKONT

AUGDT

AUGBL

ZUONR

GJAHR

BELNR

BUZEI

Column Stats

often executed statements on BSIS

cardinality

Facts:

◉ 4,1 billion rows

◉ About 30 client values

◉ Only one value for BUKRS

◉ The top occurrences in BELNR have summed up not 0,001%

The recommendation of SAP is a RANGE partitioning on BUKRS. If RANGE on BUKRS or a similar range partitioning is not possible, use HASH partitions on BELNR.

We can’t use BUKRS as partitioning object due too low cardinality. BELNR alone has a high cardinality and could be not efficient enough.

2 recommendation is in this scenario:

1. MANDT first level range partitioning

2. HKONT second level range partitioning

1. MANDT first level range partitioning

2. BELNR second level hash partitioning

With 4,1 billion partitions we need about 12-14 partitions which means 300 million rows per table (rule of thumb: between 100 – 500 million), if we would use only single partitioning. With multilevel partitioning the fill degree of the partitioning depends on the single column data cardinality of each level.

7. another example

<tbd>

8. Parameter for splitting

As already mentioned in the first part of this series, we can optimize the splitting procedure with some parameters which have to be adjusted according your hardware:

indexserver.ini -> [partitioning] -> split_threads (default: 16)

indexserver.ini -> [partitioning] -> bulk_load_threads (default: 4)

◉ Under a system with 176 CPU cores, the repartitioning was failed due to this error and completed in 9.18H with settings below.

indexserver.ini -> [partitioning] -> split_threads =50

indexserver.ini -> [partitioning] -> bulk_load_threads = 8

◉ With 120 CPU cores, it took 14.40H without the error after setting as below.

indexserver.ini -> [partitioning] -> split_threads =95

indexserver.ini -> [partitioning] -> bulk_load_threads = 10

No comments:

Post a Comment