Overview

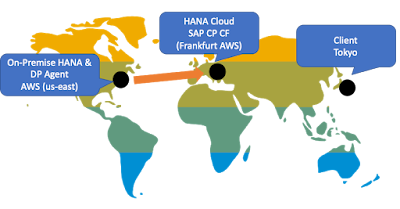

In this blog, I will transfer large volume of data from On-Premise SAP HANA to SAP HANA Cloud. I will use Smart Data Integration (SDI), which is ETL function of SAP HANA Cloud, to transfer data.

Environment:

◉ SAP HANA 2.0 Revision 42 : On-Premise、running on AWS us-east

◉ Data Provisioning Agent 2.0 SP04 Patch24 : running on AWS us-east

◉ SAP HANA Cloud on SAP Cloud Platform Cloud Foundry : running on AWS Frankfurt

I used “LINEITEM” table of TPC-H. I generated 50GB TPC-H data.

◉ Record Count : 300,005,811

◉ CSV File Size : 36.8GB

◉ Table Size (On-Premise SAP HANA) : 7.8GB

1. Transfer data (default settings)

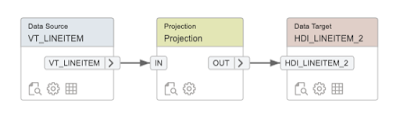

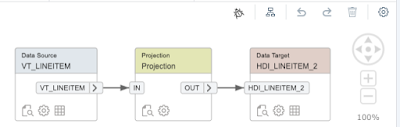

Create a simple flowgraph which just transfer data from On-Premise to Cloud.

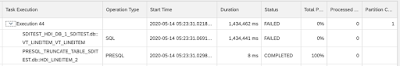

Execute the job without changing any settings. However, it failed.

According to the console message, it shows Out of Memory error occurred. It seems to be occurred on DP Agent side.

Check the trace file, <DP Agent installation folder>/log/framework.trc. I shows Out of Memory error occurred when fetching data.

2. Change JAVA Heap Size of DP Agent

First, change JAVA Heap Size of DP Agent with reference to “6.1.1 Sample Data Provisioning Agent Tuning” of Best Practices Guide.

Open INI file (<DP Agent installation folder>/dpagent.ini) and change the value of Xmx to 16GB.

Default is 4GB.

Changed to 16GB. (The DP Agent server has 32GB memory)

Restart the DP Agent and execute the folowgraph again. Unfortunately it failed again.

It seems more data are fetched but it is still not enough. It is necessary to take other action because there is a limit to increasing the heap memory size.

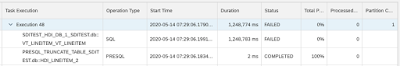

3. Create Task Partitions

Next, create task partitions to the flowgraph with reference to “6.1.3 Task Partitioning” of Best Practices Guide. By this, the job does not acquire and load the all data at once, but it repeatedly acquire and load the divided data.

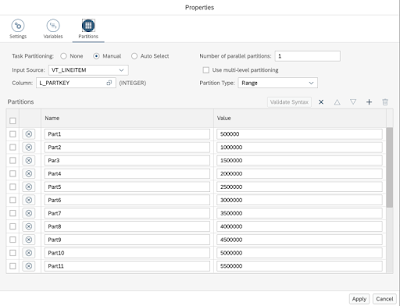

Open the flowgraph and click the “Properties” icon (top right gear icon).

Go to “Partitions” tab to configure the partition settings. As the LINEITEM table has a sequential number column “L_PARTKEY”, use this to create a Range Partition. Create 20 partitions and set “Values” to the maximum value of “L_PARTKEY” for the partition. (Since the table has 300M records, each partition has 15M records)

You can process the partitions in parallel, but this time I will process them serially. Choose “Manual” to “Task Partitions” and set “1” to “Number of parallel partitions”.

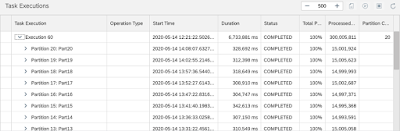

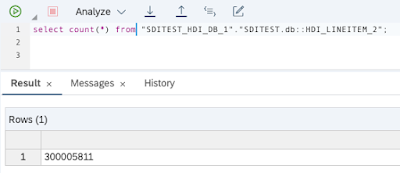

Now, execute the flowgraph. Completed successfully!

300M data was transferred from On-Premise SAP HANA to SAP HANA Cloud.

4. Create Partitions on Target Table

According to “6.1.4 Target Table Partitioning” of Best Practices Guide, it also mentions partitioning the target table. If the number of table data is large, it is necessary to consider partitioning the target table. By setting partition, the delta merge process is performed for each partition, so it is possible to execute the delta merge process with lower resources.

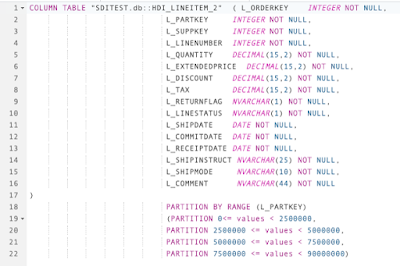

You can create partitions with .hdbtable file as follows.

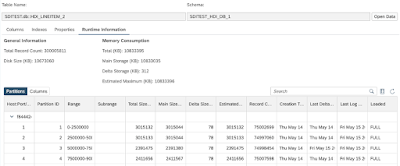

Data is stored in each partitions.

No comments:

Post a Comment