Description about the activity performed:

This document provides detailed information on how to downsize an existing Hana VM built on Azure to a VM which has lower hardware configuration. While reducing VM size in Azure is relatively easy, Hana being an in-memory database, the disks are usually proportional to the VM memory size.This document outlines how to right-size the VM and disks.

High level steps:

◉ Gathering File System Information from Hana Database

◉ Gathering Memory Statistics from Hana Database

◉ Data Footprint Calculation

◉ Cost Calculation for New Hardware

◉ Adding New disks to VM

Gathering File System Information from Hana Database:

Check the current File system layout at the OS level.

This is to get detailed information of the type of FS used, current usage level of all the FS associated to Hana Application & also the existing level of sizes allocated to each FS.

Below are the FS that must be considered when you downsize a Hana VM.

/usr/sap/SID

/hana/shared

/hana/data/SID

/hana/log/SID

/backup

The below table is an output of collective information from the below commands.

df -h --> list the FS in the VM

lvdisplay --> Logical Volumes available in the VM

vgdisplay --> Volume groups available in the VM

pvdisplay --> Physical Volumes available in the system

lsscsi --> Display LUN numbers to disk name mapping

lsblk --> Display disk names to xxx mapping

Gathering Memory Statistics from Hana Database:

To downsize the current VM it is more important to collect the information on the current memory usage of the Hana application.

Read through the below terminologies to understand the different memory concepts used by Hana application before you proceed.

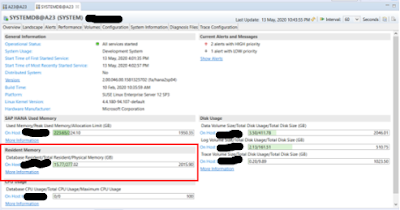

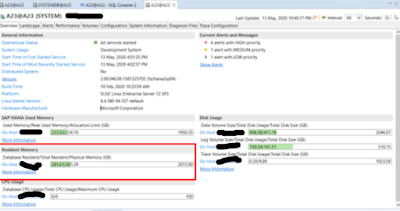

Resident Memory the physical memory in operational use by a process.

Pool Memory When SAP HANA started, a significant number of memory is requested from OS to this memory pool to store all the in memory data and system tables, thread stacks, temporary computation and other structures that need for managing HANA Database.

only part of pool memory is used initially. When more memory is required for table growth or temporary computations, SAP HANA Manager obtain it from this pool. When the pool cannot satisfy the request, the memory manage will increase the pool size by requesting more memory from the OS, up to the pre-defined Allocation Limit. Once computations completed or table dropped, freed memory is returned to the memory manager, who recycles it to its pool.

SAP HANA Used Memory à total amount of memory currently used by SAP HANA processes, including the currently allocated Pool Memory. The value will be dropped when freed memory after each temporary computation and increase when more memory is needed and requested from Pool.

To collect the memory statistics, you can collect it initially at the OS level to collect the information on the RAM size of the server and then into Hana Studio to collect the memory statistics for individual System & Tenant Database level.

free -g to collect info at OS level.

Command to create logical volume

lvcreate -i2 -I32K -L256G -n lv_log_new vg_log_new

Explanation

Once the logical volume is created use the below command to make an FS entry.

Explanation

Now Rename the old File system entries from /etc/fstab file, provide the new File system entries and mount them.

This document provides detailed information on how to downsize an existing Hana VM built on Azure to a VM which has lower hardware configuration. While reducing VM size in Azure is relatively easy, Hana being an in-memory database, the disks are usually proportional to the VM memory size.This document outlines how to right-size the VM and disks.

High level steps:

◉ Gathering File System Information from Hana Database

◉ Gathering Memory Statistics from Hana Database

◉ Data Footprint Calculation

◉ Cost Calculation for New Hardware

◉ Adding New disks to VM

Gathering File System Information from Hana Database:

Check the current File system layout at the OS level.

This is to get detailed information of the type of FS used, current usage level of all the FS associated to Hana Application & also the existing level of sizes allocated to each FS.

Below are the FS that must be considered when you downsize a Hana VM.

/usr/sap/SID

/hana/shared

/hana/data/SID

/hana/log/SID

/backup

The below table is an output of collective information from the below commands.

df -h --> list the FS in the VM

lvdisplay --> Logical Volumes available in the VM

vgdisplay --> Volume groups available in the VM

pvdisplay --> Physical Volumes available in the system

lsscsi --> Display LUN numbers to disk name mapping

lsblk --> Display disk names to xxx mapping

Gathering Memory Statistics from Hana Database:

To downsize the current VM it is more important to collect the information on the current memory usage of the Hana application.

Read through the below terminologies to understand the different memory concepts used by Hana application before you proceed.

Resident Memory the physical memory in operational use by a process.

Pool Memory When SAP HANA started, a significant number of memory is requested from OS to this memory pool to store all the in memory data and system tables, thread stacks, temporary computation and other structures that need for managing HANA Database.

only part of pool memory is used initially. When more memory is required for table growth or temporary computations, SAP HANA Manager obtain it from this pool. When the pool cannot satisfy the request, the memory manage will increase the pool size by requesting more memory from the OS, up to the pre-defined Allocation Limit. Once computations completed or table dropped, freed memory is returned to the memory manager, who recycles it to its pool.

SAP HANA Used Memory à total amount of memory currently used by SAP HANA processes, including the currently allocated Pool Memory. The value will be dropped when freed memory after each temporary computation and increase when more memory is needed and requested from Pool.

To collect the memory statistics, you can collect it initially at the OS level to collect the information on the RAM size of the server and then into Hana Studio to collect the memory statistics for individual System & Tenant Database level.

free -g to collect info at OS level.

Hana Resident memory occupied by System DB

Hana Resident memory occupied by Tenant DB

Data Footprint Calculation:

The actual data occupied at the FS level by Hana application level is the non-defragmented data. Data footprint calculation on your Hana DB will give you the actual data occupied at OS level. To calculate this, we need to follow the below procedure.

Before we defragment the /hana/data disk it is always good to take a complete data backup of both System & Tenant DB. Make sure the application is stopped at this stage.

Use the below command to defragment the disk. We must defragment the index server so make sure you provide the hostname and port number of the index server.

Port number of the index server can be obtained from the landscape tab by Right clicking the Configuration & Administration tab of the SYSTEMDB entry in the landscape panel of your Hana studio.

alter system reclaim datavolume ’hostname:portnumber’ 120 defragment

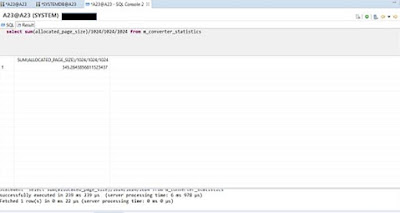

Now use the below command to calculate the data footprint.

select sum(allocated_page_size)/1024/1024/1024 from m_converter_statistics

To calculate the RAM size for the new Hardware below formula has been suggested by SAP.

1.5 * SOURCE_DATA_FOOTPRINT

1.5 * 345 = 518GB

In our current scenario the new system is going to be used only by 10 concurrent users and load on the system will be very less, so we have decided to move go with RAM size of 256GB for the new server. Otherwise you can go to the nearest highest value of the output from the formula

As per SAP’s recommendation below is the proportion in which the above mentioned diskspace must be decided.

/hana/data --> 1.2 * RAM Application specific, 1 * RAM no application specific

/hana/log --> systems <= 512GB ½ of RAM, systems > 512GB minimum 512GB

/hana/shared --> 1*RAM max 1TB (single node)

/backup --> datasize + logsize

Root file system --> 60GB btrfs file system BTREE filesystem developed in 2011.

/usr/sap --> 50GB (10GB min recommended)

Swap space 2GB

Cost Calculation for New Hardware:

Initially used VM & disks before downsizing

M128s (128 vcpus, 2048 GiB memory)

1024GB Premium SSD Disk --> /backup

64GB Premium SSD Disk --> /usr/sap/SID

2048GB Premium SSD Disk --> /hana/data

2048GB Premium SSD Disk --> /hana/data

2048GB Premium SSD Disk --> /hana/data

512GB Premium SSD Disk --> /hana/log

512GB Premium SSD Disk --> /hana/log

2048GB Premium SSD Disk --> /hana/shared

30GB Premium SSD Disk --> /root

You may see there are three disks for data & two for logs these are called Striped disks.

In azure there is an option to combine three disks to get IOPS & throughput of three disks into one that functionality is named as Striped disks.

For eg:

If there is disk P10 which has a Throughput & IOPS of 5000 & 60 & 512GB it may cost $400 a month.

You have a disk P6 which has Throughput & IOPS of 2000 & 20 & 512GB it may cost $100 a month.

P6 + P6 + P6 = 6000 + 60 Throughput & IOPS with same 512GB in that way we can save $100 a month by striping the disks together.

SAP suggests striped disks only for /hana/data & /hana/log for improved performance.

You can stripe the data disk at 256K & log disk at 32K as of now.

Adding New disks to VM:

Below is the RAM of the new VM.

LUNS has been assigned like below from the AZURE portal to the VM.

/hana/shared

hostname_1a, 256G, LUN: 8

/hana/data

hostname_3a, 512G, LUN: 9

hostname_4a, 512G, LUN: 10

hostname_5a, 512G, LUN: 11

/hana/log

hostname_6a, 256G, LUN: 12

hostname_7a, 256G, LUN: 13

/backup

hostname_8a, 512G, LUN: 14

Below command can be used to check the LUNS mapped to Physical volume.

hostname:~ # lsscsi

[2:0:0:0] disk Msft Virtual Disk 1.0 /dev/sda

[3:0:1:0] disk Msft Virtual Disk 1.0 /dev/sdb

[5:0:0:1] disk Msft Virtual Disk 1.0 /dev/sde

[5:0:0:8] disk Msft Virtual Disk 1.0 /dev/sdc

[5:0:0:9] disk Msft Virtual Disk 1.0 /dev/sdg

[5:0:0:10] disk Msft Virtual Disk 1.0 /dev/sdd

[5:0:0:11] disk Msft Virtual Disk 1.0 /dev/sdi

[5:0:0:12] disk Msft Virtual Disk 1.0 /dev/sdf

[5:0:0:13] disk Msft Virtual Disk 1.0 /dev/sdj

[5:0:0:14] disk Msft Virtual Disk 1.0 /dev/sdh

Command to create Physical volume

pvcreate /dev/sdp

Explanation

| pvcreate | Command |

| /dev/sdp | Name of Physical Volume |

Command to create volume group

vgcreate vg_log_new /dev/sdm /dev/sdp

Explanation

| vgcreate | Command |

| vg_log_new | Name of the volume group |

| /dev/sdm /dev/sdp | Space delimited list of disk names |

Command to create logical volume

lvcreate -i2 -I32K -L256G -n lv_log_new vg_log_new

Explanation

| lvcreate | Command |

| -i2 | Create a stripe set of two |

| -I32K | Strip size is 32K |

| -L256G | Final size of volume in GB |

| -n lv_log_new | Name of new logical volume |

| vg_log_new | Source volume group |

Once the logical volume is created use the below command to make an FS entry.

mkfs.xfs /dev/vg_log_new/lv_log_new

Explanation

| mkfs.xfs | Command |

| /dev/vg_log_new/lv_log_new | Complete path of Logical volume to be added to fs |

Now Rename the old File system entries from /etc/fstab file, provide the new File system entries and mount them.

Copy the files through cp or rsync command under respective directories.

Now we are good to start the downsized VM.

No comments:

Post a Comment