The agenda for this blog is to demonstrate SAP data replication to the Snowflake platform to build an independent data warehousing scenario.

As we all know, Santa Clause runs on SAP, and his North pole’s supply chain data is growing every year, and the Elfs IT team started exploring Industry 4.0, Machine learning, and tractions for serverless services, including high-performance data warehouses like a Snowflake, Azure Synapse with fully automated, zero administration, and combined with a data lake so that they could focus on increasing Line of Business(LoB) on SAP S/4HANA without any trouble.

Sounds very interesting…Let’s keep scrolling to see what’s next!

1. Preamble

According to Gartner(my favorite one!), the public cloud services market continues to grow, largely due to the data demands of modern applications and workloads. And data is one of the leading factors in this transition. In recent years, organizations have struggled with processing big data, sets of data large enough to overwhelm commercially available computing systems. (Source: Gartner news)

We know that all Data engineers & Data scientists love to use SQL to solve all kinds of data problems, and it gives them a full grip to manipulate the data views. To get insights into this data, you’d extract and load the data from various sources into a data warehouse or data lake. To connect or build what can sometimes be fairly complex ETL/ELT data pipelines, enterprises prefer to use modern tools like Azure Data Factory, Talend, Stitch, Qlik, and many more… Depending on your architecture and data requirements, you might choose one or multiple ETL/ELT tools for your use case. I’m going to leverage my favorite Azure Service – Azure Data Factory(ADF) – Which is Microsoft’s fully managed ‘serverless data integration tool.

It allows developers to build ETL/ELT data processes called pipelines, with drag and drop functionality using numerous pre-built activities. There are dozens of native connectors for your data sources and destinations from on-prem file systems and databases, such as Oracle, DB2, and SQL Server to applications such as Dynamics 365, and Salesforce to cloud solutions such as AWS S3, Azure Data Lake Storage, and Azure Synapse. It delivers the most significant value when your data pipelines provide the fuel to power analytics efforts. We know that without data, you have nothing to load into your system and nothing to analyze. Azure Data Factory provides 90+ built-in connectors allowing you to easily integrate with various data stores regardless of the variety of volume, whether they are on-premises or in the cloud. Snowflake connector is the latest one added and available as well. I’m still exploring it!

The aim is to load our SAP data on Snowflake in batches or near to real-time option using Azure Data Factory using the plug & play method.

For newbies to the Snowflake, it is a cloud-based data warehouse solution offered on all big hyperscalers like Microsoft Azure, AWS & GCP. Once you’ve configured your account and created some tables using your Snowflake account, you most likely have to get data into your data warehouse. But sometimes, you also have to export data from Snowflake to another source, for example providing data for third parties. You always receive a 30-Day Free Trial with $400 credit from Snowflake while opening a new trial account to explore and test your data, and it’s a huge advantage for anyone looking to explore for the first time, just like me!

2. High-Level Architecture scenarios on Azure

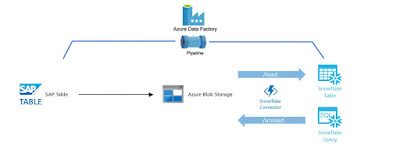

SAP data to Snowflake Cloud Data warehouse on Azure

(SAP specific scenario, we going to explore in this blog)

SAP Data >Azure Blog Storage >Snowflake using SAP Table Connector on Azure Data Factory(ADF)

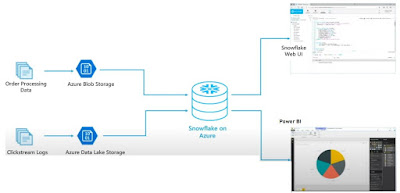

◉ Example of ERP/CRM Order processing and Social data landing on Snowflake on Azure with integrated dashboards to leverage

Source: Snowflake

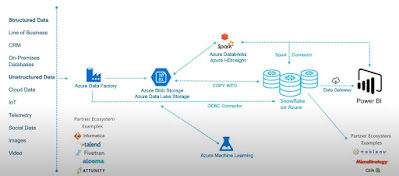

All Structured/unstructured data flow to Snowflake Cloud Data warehouse on Azure

(End to End scenario with 3rd party options to explore!)

Source: Snowflake

3. Getting Started

For this SAP to Snowflake integration scenario, the following Azure services are used: Azure Blob storage, Azure Data Factory (Linked Services, Datasets, and Data flows) and Snowflake account on Azure Cloud

Let’s list the prerequisites in order:

◉ Access to SAP App Table (ECC, S/4HANA, Netweaver. Minimum SAP_BASIS701 or above)

◉ Azure Subscription with running blob storage and Azure Data Factory access

◉ Snowflake Subscription with AccountAdmin ($400 credit for a new account w/o credit card!)

◉ Wind1ows Remote desktop with admin access (It’s optional, I used my laptop!)

◉ 64-bit SAP Connector for Microsoft .NET 3.0 from SAP’s website

3. Steps

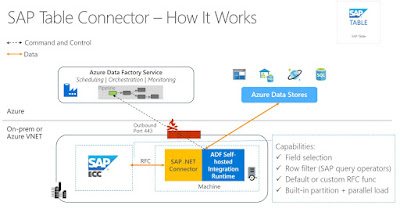

SAP Table Connector utility leveraged to fetch the SAP table in this run demonstration.

Source: Microsoft

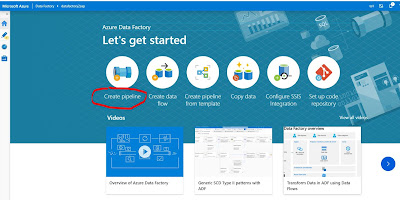

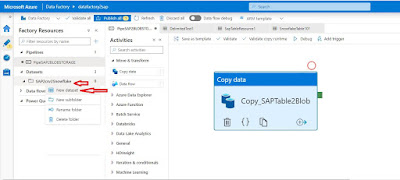

1.Open Azure Data Factory in Azure Portal and create a pipeline

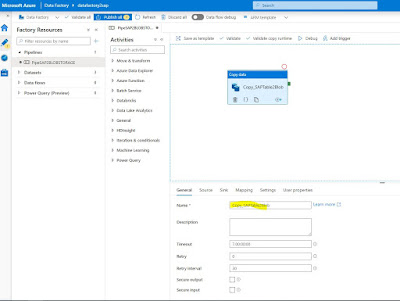

2. Right-click and create under the “Pipelines” section, provide the name: Copy_SAPTable2Blob of the pipeline as shown in the picture below.

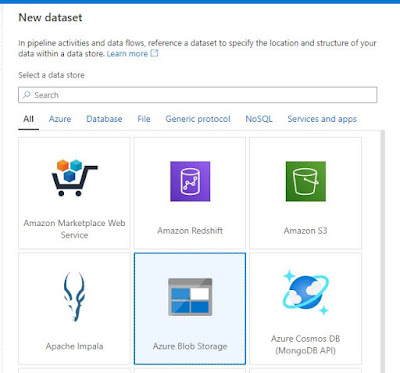

3. Create a new folder: SAP2csv2Snowflake under the Datasets section, and right-click on it, and create a new dataset: Azure Blob Storage as shown below in the screenshots.

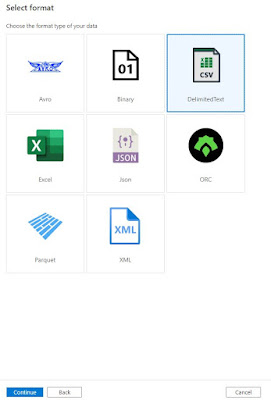

4. It will appear on the right panel of Portal for selection. Select Azure Blog Storage and then subsequently pick up CSV format type for the dataset.

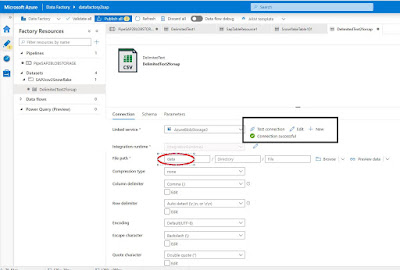

Select DelimitedText CSV as shown below and click the “Continue” button.

Snowflake reads data in certain formats like CSV, Excel in general.

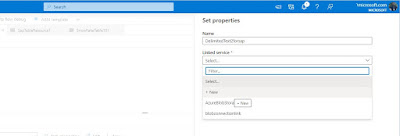

5. Set the properties name of the CSV file “DelimitedText2sap” and link the services by clicking the +New option.

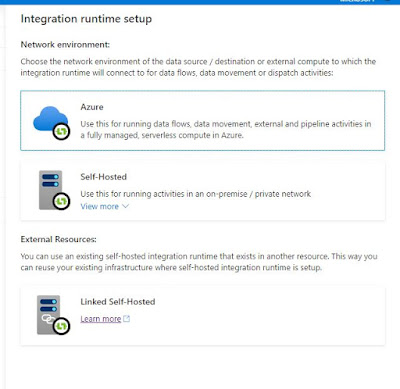

6. Select “Azure” under Integration runtime setup and click on Create. This step required to install .Net Connector on your local machine or Remote desktop (from Azure Portal)

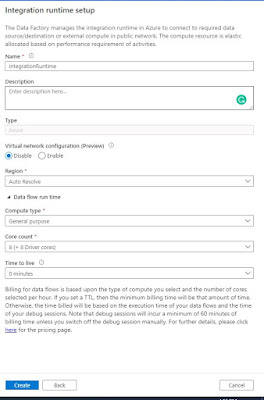

7. Provide Integration runtime name and other options as shown below

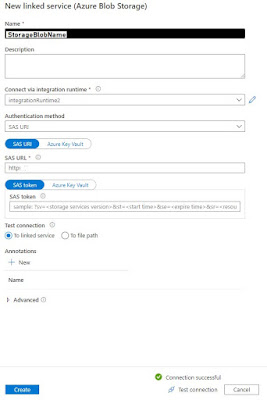

8. Connect Blob storage using the SAS URI Authentication method. (Snowflake requirement!)

9. Connection established for Blob Storage for CSV file.

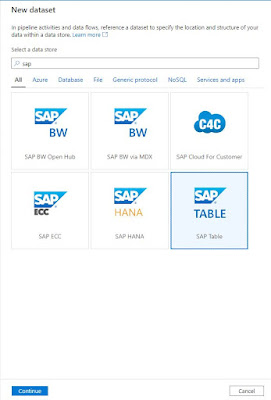

10. Create New SAP dataset for SAP Table Connection

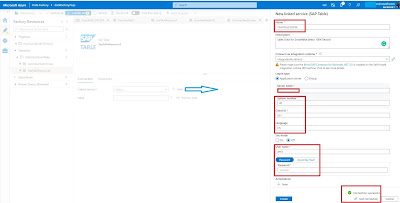

11. Click on “+New” Linked Connection and fill in SAP login details and run “Test Connection” and connection established with SAP application now.

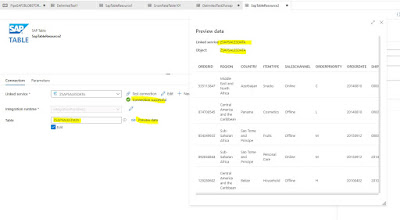

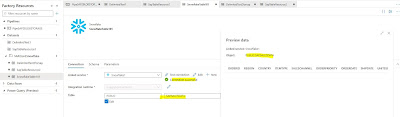

12. Now check the “test connection” and Table – “Preview data” to ensure SAP Connection and data is visible.

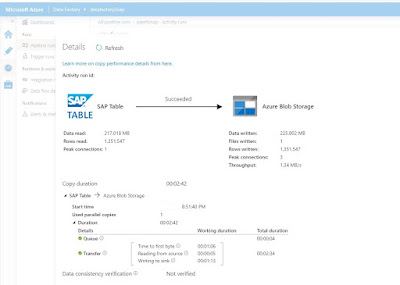

SAP Table – ZSAPSALESDATA contains 100K records for the initial run then we’ll trigger 1 Million records as the second round of demo.

(Imp. Note, use SAP Table name always in upper case else it might not able to fetch data from SAP App)

13. Login to your snowflake trial account and create the SAP table structure with the below SQL Script.

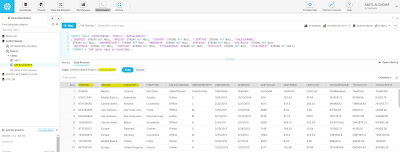

CREATE TABLE "SAPDATABASE"."PUBLIC"."SAPSALESDATA" ("ORDERID" STRING NOT NULL, "REGION" STRING NOT NULL, "COUNTRY" STRING NOT NULL, "ITEMTYPE" STRING NOT NULL, "SALESCHANNEL" STRING NOT NULL, "ORDERPRIORITY" STRING NOT NULL, "ORDERDATE" STRING NOT NULL, "SHIPDATE" STRING NOT NULL, "UNITSSOLD" STRING NOT NULL, "UNITPRICE" STRING NOT NULL, "UNITCOST" STRING NOT NULL, "TOTALREVENUE" STRING NOT NULL, "TOTALCOST" STRING NOT NULL, "TOTALPROFIT" STRING NOT NULL) COMMENT = 'SAP sales data in Snowflake';

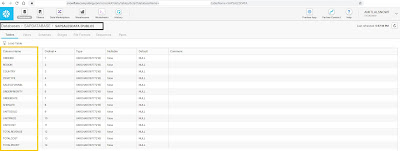

Check Table structure created successfully.

14. Click on Snowflake dataset – Verify by Test connection and preview data, you can see the structure of the table in preview data as shown below.

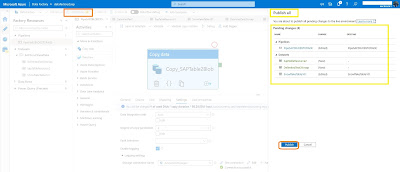

15. All 3 datasets are created successfully and time to publish all.

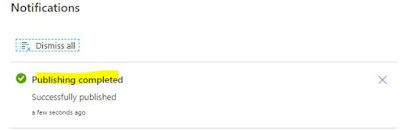

Click on Publish All button on the header. And you will be receiving the notification of activation.

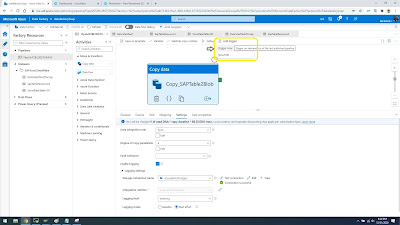

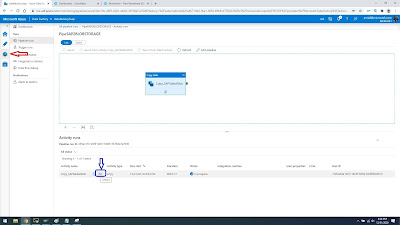

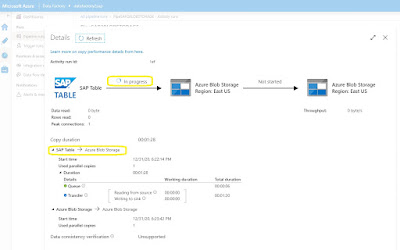

15. Click on Trigger now after Publishing completed. And monitor the load from SAP Table to Azure Blog Storage.

16. Go to Monitor on the Left panel and select the Details link to capture the real-time loading.

17. Monitor the flow of SAP Table in progress!

18. Once SAP Table is loaded into Blog, verify the details in blog storage if the csv/txt file is available.

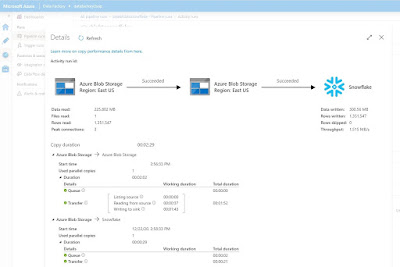

19.Once Blog storage is connected to Snowflake, you can trigger a pipeline using snowflake as a sink. (created on step #14 earlier)

20. Verify data in snowflake account, the table created and data loaded

21. Final data preview on Snowflake account! Now we can manipulate the data to the next level based on limitless analytics requirements in the future.

No comments:

Post a Comment