SAP implementation project delivery is heavy lifting, when you look to implement S/4HANA or SAP IBP full suite just by itself. It’s order of magnitude more complex when you need to implement S/4HANA and IBP full suite together in a greenfield environment. This is even more so, if you are looking to implement IBP Order Based Planning along with S/4HANA against complex business requirements, such as configurable products.

I would like to share lessons learned in planning User Acceptance Test UAT across S/4HANA and IBP. Learn how to plan when you have common testers across S/4 and IBP – how to mitigate the bottleneck risk, how to develop a test plan for a highly complex solution, and how to balance the conflicting needs of Business Teams vs. IT/PMO teams when it comes to substance vs. schedule.

Business teams’ satisfaction drives solution acceptance, which drives project success and value. Invest needed time and energy to plan for and execute your UAT well. Do not fall into the trap of rehashing the System Integration Test SIT test cycle with the same core team users one more time and call it UAT. This is not a ceremonial activity to check-off your list before you rush into End User Training and Final Cutover to score that immovable go-live date. Remember this fundamental choice: sweat in UAT or bleed in hypercare.

Do not shortchange the UAT planning process. Test Planning is 50% or more about listening to your superusers. What test cases are important to them and why? You want to capture as much of their day in the life test during UAT. Test cases are not a loose collection of ideas your consultants or business teams come up with in a single meeting, so the team can just get on with it.

When S/4HANA and IBP full suite are deployed together, you are looking at a highly complex solution, which needs to be properly decomposed for scope to ensure both comprehensiveness of test cases and testability of any given test case. Test sessions should not become unending meetings from hell.

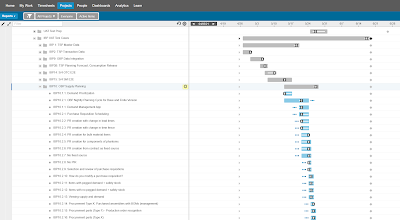

See example below of how to decompose IBP test cases across test areas, test processes, test cases, and test steps.

Have a clear definition of acceptance. Decompose test cases into test steps with expected results. Align with business on all aspects of expected results, which if matched by actual results will lead to automatic acceptance of the test case. This goes beyond functionality, and should include OCM aspects of change understanding, user experience, system performance, etc.

Do not plan in a PMO ivory tower. Communicate, collaborate, communicate. Have a structured process to effectively and visibly incorporate business team’s feedback during the planning process. Alignment during Planning stage goes a long way in mutual understanding and flexibility across business and IT/PMO teams during UAT execution.

Tester selection and recruiting is key. Do not just revert back to having the same core team of superusers run the final acceptance of the solution: they are necessary, but not sufficient. Fresh eyes are key to judging solution efficacy. Prepare your superusers well for this: they need teaching skills and thought clarity in communicating key planning concepts, act as first line of support when end users struggle with editing planning views or understanding OBP planning results, and handle objections as they come across the eventual change-resistant crowd.

Lesson # 2: Invest in Robust Collaboration Platforms Purpose-Built for Collaboration and Dynamic Planning

Still planning/tracking with online/offline spreadsheet solutions? Dates entered in spreadsheets produce static plans, which just do not scale during execution in dynamic complex environments. Invest in a web based platform like LiquidPlanner that can seamlessly deliver team collaboration capabilities along with dynamic scheduling. LiquidPlanner generates a bottoms-up predictive range schedule based on test case prioritization, resource bandwidth, and test effort. See a sample snapshot below.

LiquidPlanner enables testers to capture test case execution results and detailed feedback along with attachments throughout a well organized test case library. This results in seamless collaboration between business testers and IT team / consultants across test execution and defect resolution.

Test case type and resource requirement map is key. Understand there will be S/4HANA 4-wall test cases, IBP 4-wall test cases, and integrated S/4-IBP end to end test cases. You need to manage them in separate test tracks for increased flexibility in test scheduling. Identify tester groups who will be a bottleneck to effective scheduling. Dedicate individual testers to test tracks.

Have 3 test sessions of 60-90 minutes each across the day separated by 60 minutes to allow testers time to breathe to avoid tester burnout. Have a test day recap meeting end of the day to ensure all feedback has been captured.

Test scheduling is one of the most important steps often left to manual spreadsheet-based inefficient approaches. This approach delivers static schedules that are obsolete within first week of testing. Understand the test case prep and execution effort. Understand resource bottlenecks across testers and functional or technical consultants who need to resolve defects quickly. Plan for 1/3rd of the test schedule duration for unplanned work: retesting to ensure defects are resolved and defect resolution under time pressure did not create new defects. This is specially a challenge with ABAP code in interfaces.

Leverage automated dynamic scheduling tools to manage your test schedules against a volatile test environment: testers are pulled out to deal with fires, test sessions cannot be executed effectively due to poor prep, change requests are dressed up as defects, defect resolution takes more rounds of testing than expected, resource bottlenecks develop, impact of data health issues on solution quality becomes clear, new performance issues are found where none were expected, the list goes on.

(Static) Plans are useless, but (Dynamic) Planning is quite useful. You need to manage all your activity threads resource and effort loaded and scheduled in the right priority sequence to understand your schedule risk. Push too much for UAT exit schedule and you risk solution acceptance. Yield too much to business users and you get testing paralysis, where no amount of testing is enough, and all low impact threads are showstoppers.

Many projects consume the slack with the build activities and approach UAT as a last-minute ceremonial step before pushing for go-live, this is a dangerous way to manage your OCM track. Business users need to test extensively and feel comfortable, without the PMO police micromanaging test schedule to the point that end-users start questioning the whole UAT process. Change is tough, change that is rushed is even worse.

Make sure to plan enough buffer in your project schedule towards UAT and End User Training given the risk of showstopper design gaps or interfaces that just will not scale with full data sets. Never schedule UAT, End User Training, and Go-Live back to back without meaningful buffer. Things always take longer than they appear during the planning stage.

Lesson # 3: Wow Testers with Business-Focused Test Content. Make it Easy to Test.

Balance User Acceptance Testing vs. Super User Training. Balance the need for testers to test as well as understand the solution (training). Business testers will want to understand each S/4 master data object across all the IBP relevant fields and how they are used in IBP to understand how to maintain this dataset going forward. Although it’s tempting to park deep-dive training as a post-UAT activity, this is not how super-users think. They need to deeply understand what they are accepting.

IBP Order Based Planning (OBP) is particularly harsh in leaving demands unplanned if a single material across the multi-level BOM has a BOM or a Purchasing Info Record missing. Invest in data integrity check programs in S/4 to ensure the complex ruleset of field value combinations based intra-or inter-object dependencies can be easily validated to be in place without painful manual validation. Watch this space for a future blog on S/4 Data Integrity Checker Utility for IBP relevant data.

Invest in Concept Training. Create simple examples of which fields come together from which master data objects in S/4 to realize basic building blocks in planning, e.g., lead time scheduling, or lot sizing in IBP OBP. Highlight scenarios when data is read from Purchasing Info Record vs. Material Master, so users understand the data maintenance nuances to planning correctly in IBP. IBP is much more conceptual and much less transactional (S/4HANA is the reverse). Transactional test case scripting approach to UAT does not work well. Understand this dynamic and reflect this in your UAT.

Make sure to provide a detailed test schedule with conference rooms / conference call details, business process / concept training decks, data dictionary, test case scripts, testing tools & methodology decks, test data, work instructions on how to login etc. in a neatly organized online library. Testers appreciate the organized information, as this reduces the need to cling to functional consultants, which inevitably slows down test execution. Have a well room vs. a sick room in terms of your Zoom call breakout rooms to enable folks struggling with login issues or software issues to get help without slowing down the testers who are ok to test.

Put clear test procedures in place. Sessions must begin and end on time. Clear session manager and rules of engagement: ensure testers do not revert back to their day job during the test session.

Lesson # 4: Triage User Feedback and Set Clear Expectations Promptly. Not all Defects are Created Equal.

Ask testers to provide feedback during the test session itself when it’s fresh in their minds. Triage feedback to segment true defects vs. change requests vs. tester understanding issues vs. master data issues vs. interface job sequence failures. All ‘defects’ logged by testers are not configuration / code issues. Assign a priority score to each test case. This helps with both scheduling of the test case as well as defaulting priority for associated defects.

If you are conducting UAT in a new test environment with converted data, you will need to execute your SIT test cases as well as data conversion validation before launching into the core UAT test cases, which focus more on the business process and day-in-the-life aspects.

Prepare for the inevitable differences of opinion regarding defects vs. change requests. Have a clearly thought out and communicated policy of how the team will triage tester feedback to call out true defects from well-dressed change requests. It helps to assign delivery dates to change requests instead of just pushing business users to concede that something is not a true defect. Business users may sometimes develop a now-or-never mindset, based on their past experiences with large scale transformations. IBP grows quarterly, and it is quite natural to grow the solution through business releases aligned with SAP’s quarterly innovation cycle.

Lesson # 5: Carefully Calibrate Your UAT Operating Model in these times. Remote or In-Room or Hybrid?

Securing the right number of testers, the right testers, and the right bandwidth of the right testers, is where seasoned PMO practitioners shine.

Carefully plan your approach to virtual vs. in-office UAT execution. Despite the intuitive advantages of getting people together for UAT, it is quite challenging to practice social distancing when you need to look at a screen together. It is also quite difficult for the remote participants, as onsite users talk muffled through a mask in hybrid environments (testers in-room, consultants remote).

UAT cycles can take 4-5 weeks for most projects and even longer for the complex ones where S/4, IBP, and SAC are being co-deployed. You also need to carefully map the test cases to test sessions. Do not schedule more than 3-4 test cases per session, even if these are lower complexity test cases. Cram too many test cases in a single session, and testers will feel overwhelmed and rushed, their test and feedback quality will naturally go down.

Do not ignore the human factor in your UAT strategy. Rewards & recognition are a powerful driver for most professions, testers are no exception. Gamify the UAT cycle with rewards for most test cases executed on schedule, most defects logged, etc. to encourage tester participation. Nothing serious, group recognition with Amazon gift cards is plenty to communicate to your testers that you see them as humans that need to feel the win in crossing the bridge to S/4 and IBP.

Invest in powerful dashboarding to understand insights: which test areas seem to have the most usability challenges, is there a pattern to the data errors, defect aging issues with particular consultants, etc.

Stay humble to learn and be open to new ideas in these uncertain times, there are no best practices that you should blindly apply. UAT is all about users and recognizing the human factor is key to acceptance & adoption.

Source: sap.com

No comments:

Post a Comment