Overview

It is common to load data into HANA Cloud, Data Lake. Loads can also take a long time, and because they run through a database connection, it can be tedious to keep an open DBX (or client application) session to facilitate a long-running load from object storage.

A built-in event scheduler in SAP HANA Cloud, data lake relational engine can be used to schedule SQL functionality. Through this blog you will learn how to schedule data movement from a SAP HANA Cloud, HANA database to a SAP HANA Cloud, data lake relational engine instance using this event scheduler.

Let’s walkthrough the entire process from the Data Prep to the EVENT creation to Load Tables Asynchronously in HANA Cloud, data lake Relational Engine.

First step over here will be to import data into the SAP HANA Cloud, HANA Database. The primary step over here is to directly Import the TPCH data.csv file from this GitHub repository.

Step 1: Download the TPCH CUSTOMER DATASET from GitHub

1. To IMPORT data into your HANA DB Instance/Tables start by downloading the TPCH data file from this GitHub repository. Once you click on the link, it will redirect you to the GitHub TPCH data file directory. We can directly download the file from there.

2. The TPCH CUSTOMER Dataset will be an example for this blog. Save the customer.tbl file to customer.csv on your local machine.

Step 2: Create Table in HANA and Import the Data

Now, once the data file is downloaded. We need to create a Table in our HANA Database Instance. That’s where we will be importing the data into.

Open a SQL console to the HANA DB instance and create the following table. The following code will create a TPCH schema in the HANA DB instance and then create a CUSTOMER table within that schema.

CREATE SCHEMA TPCH;

CREATE TABLE TPCH.CUSTOMER (

C_CUSTKEY integer not null,

C_NAME varchar(25) not null,

C_ADDRESS varchar(40) not null,

C_NATIONKEY integer not null,

C_PHONE varchar(15) not null,

C_ACCTBAL decimal(15,2) not null,

C_MKTSEGMENT varchar(10) not null,

C_COMMENT varchar(117) not null,

primary key (C_CUSTKEY)

);

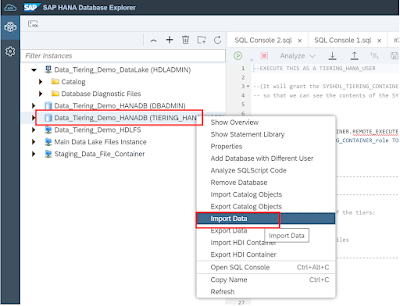

Once the table is created, right click on the HANA DB Instance and select on IMPORT Data.

On the “Import Type” page select “Import Data From”.

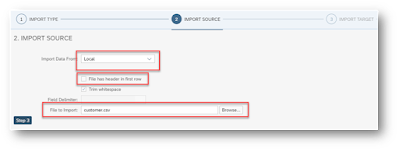

Proceed to the “Import Source” step. On the “Import Source” step select “Local” and then uncheck the “File has header in first row” box.

Then select the “customer.csv” file that was downloaded earlier.

On the “Import Target” step, select “Add to an existing table” and fill in the information for the TPCH.CUSTOMER table that was created.

We need to verify the table mapping and go to step 5 to finish the import. So, this is how we IMPORT data into the TPCH HANA DB TABLES.

Step 3: Set up a Remote Server

Next step is to Set up a Remote Server from SAP HANA Cloud, data lake to SAP HANA Cloud, HANA Database. Making a remote server connection between HDLRE and the HDB instance containing the data you want to retrieve is the first step in setting up an HDLRE instance.

First, choose properties from the context menu when you right-click the HANA database in Database Explorer and copy the host value.

Since we have already imported the data into our HANA database, we can proceed to preparing HDLRE connection for Scheduling data movement. The below syntax will create a remote HANA server and through that we can Load the data into our HDRLE tables.

Use a SQL console that is connected directly to the HDLRE instance. Run this SQL against the HDLRE instance using a user with the MANAGE ANY REMOTE SERVER privilege to Create Remote server.

Notice, you are naming the remote server HDBSERVER. Replace the <HANA Host Name> with the host copied from the properties section.

CREATE SERVER HDBSERVER CLASS 'HANAODBC'

USING

'Driver=libodbcHDB.so;

ConnectTimeout=0;

CommunicationTimeout=15000000;

RECONNECT=0;

ServerNode= <HANA Host Name>:443;

ENCRYPT=TRUE;

ssltruststore= <HANA Host Name>:443;

ssltrustcert=Yes;'

DEFAULT LOGIN 'DBADMIN' IDENTIFIED BY 'Password1';

Step 4: Create EXTRNLOGIN and LOCAL TEMP TABLE

Now, that the remote server is created, you must create the EXTERNLOGIN that will map your HDLRE user to the HANA user credentials and allow access to the HANA database.

Notice below in the CREATE EXTERNLOGIN statement you are granting your HDLRE user permission to use the HANA User for the HDBSERVER that was created above.

Ensure that the HANA user used in the SQL below has access to the objects that need to be referenced. It would be a good idea to use the DBADMIN user that we used initially to create the CUSTOMER table.

Replace <HDL USERNAME> with the current HDLRE user that is being used and replace <HANA USERNAME> and <HANA PASSWORD> with the HANA user credentials.

CREATE EXTERNLOGIN <HDL USER NAME> to HDBSERVER REMOTE LOGIN <HANA USER NAME> IDENTIFIED BY <HANA PASSWORD>;

To make sure we are getting the data back, we will create a virtual table that points to your customer table in HANA. For a quick test to ensure everything has been set up successfully. You will create a temporary table that points to your customer table in HANA.

CREATE EXISTING LOCAL TEMPORARY TABLE HDLRE_CUSTOMER

(

C_CUSTKEY integer not null,

C_NAME varchar(25) not null,

C_ADDRESS varchar(40) not null,

C_NATIONKEY integer not null,

C_PHONE varchar(15) not null,

C_ACCTBAL decimal(15,2) not null,

C_MKTSEGMENT varchar(10) not null,

C_COMMENT varchar(117) not null,

primary key (C_CUSTKEY)

) AT 'HDBSERVER..TPCH.CUSTOMER';

Then run a select against that table to ensure you are getting data back.

SELECT * FROM HDLRE_CUSTOMER;

You should be able to see the data return in the output.

Some Cleanup:

DROP TABLE HDLRE_CUSTOMER;

DROP SERVER HDBSERVER;

Step 5: Event Scheduler:

Now that we get back the data, we can finally use the Event Scheduler to load Data/tables asynchronously. HDLRE has a built-in event and event scheduler. One can take advantage of the remote service and the event scheduler to copy data from one’s HANA database to their HDLRE.

Start by creating a Destination Table for your data. In this case this is just a CUSTOMER table that is inside HDLRE.

CREATE TABLE HDLRE_CUSTOMER

(

C_CUSTKEY integer not null,

C_NAME varchar(25) not null,

C_ADDRESS varchar(40) not null,

C_NATIONKEY integer not null,

C_PHONE varchar(15) not null,

C_ACCTBAL decimal(15,2) not null,

C_MKTSEGMENT varchar(10) not null,

C_COMMENT varchar(117) not null,

primary key (C_CUSTKEY)

);

Here I will break down Creating an Event in HDLRE.

In the following SQL you create an Event called PullDataFromHANA. Immediately after you create a schedule SchedulePullDataFromHANA.

For the blog demo purpose, the Scheduler is scheduled to start at 1:00 AM and repeat the event every Monday.

Below the “HANDLER” you define the SQL script to be executed. The script creates a local temporary table (this table will be lost once the connection is dropped) and then inserts the data from that the temporary table into your HDLRE_CUSTOMER table which persists inside of your HDLRE instance.

So, every Monday the event is copying the data from your HANA table to your HDLRE table.

CREATE EVENT PullDataFromHANA

SCHEDULE SchedulePullDataFromHANA

START TIME '1:00am' ON ('Mon')

HANDLER

BEGIN

CREATE EXISTING LOCAL TEMPORARY TABLE HDLRE_CUSTOMER_TempTable

(

C_CUSTKEY integer not null,

C_NAME varchar(25) not null,

C_ADDRESS varchar(40) not null,

C_NATIONKEY integer not null,

C_PHONE varchar(15) not null,

C_ACCTBAL decimal(15,2) not null,

C_MKTSEGMENT varchar(10) not null,

C_COMMENT varchar(117) not null,

primary key (C_CUSTKEY)

) AT 'HDB_SERVER..TPCH.CUSTOMER';

INSERT INTO HDLRE_CUSTOMER SELECT * FROM HDLRE_CUSTOMER_TempTable;

END;

You can trigger the event manually to test if it is working. Trigger the event and then run a Select from the HDLRE table to ensure that the data has been copied.

TRIGGER EVENT PullDataFromHANA;

SELECT * FROM HDLRE_CUSTOMER;

Following is the output:

You have now successfully made an event that transfers data from a HANA database to a data lake database on a recurring basis. Get inventive and use SAP HANA Cloud’s data lake events to automate any repetitive SQL tasks! Events can contain any legitimate SQL operations!

No comments:

Post a Comment