Data being one of the most important assets for any Enterprise , its exploration and analysis becomes very crucial.

SAP Data Intelligence is a very powerful tool , which lets you do those complex processing on the data .

What is SAP Data Intelligence and how does it relate to Data Hub?

In this blog, you will be able connect HANA database as a service with Data Intelligence, explore the data via meta explorer and apply Random Forest Classifier algorithm on it.

For this you will be requiring a HANA database a service running on SAP Cloud Platform (Foundry) , a running instance of SAP Data Intelligence

So lets Get Started

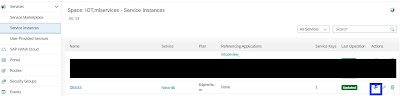

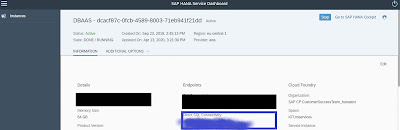

Open SAP Cloud Platform Cockpit, navigate to the Global Account , then to Sub account , and finally to the space , where your HANA instance is running and open the HANA Dashboard.

SAP Data Intelligence is a very powerful tool , which lets you do those complex processing on the data .

What is SAP Data Intelligence and how does it relate to Data Hub?

In this blog, you will be able connect HANA database as a service with Data Intelligence, explore the data via meta explorer and apply Random Forest Classifier algorithm on it.

For this you will be requiring a HANA database a service running on SAP Cloud Platform (Foundry) , a running instance of SAP Data Intelligence

So lets Get Started

Open SAP Cloud Platform Cockpit, navigate to the Global Account , then to Sub account , and finally to the space , where your HANA instance is running and open the HANA Dashboard.

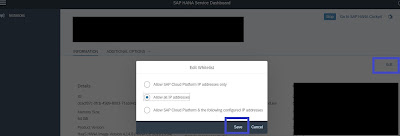

Click On Edit and then Allow All IP address , this will make sure your SAP Data Intelligence instance can access the HANA instance

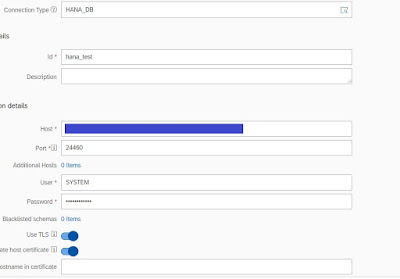

Its time to login into your SAP Data Intelligence and navigate to connection management and create a connection of type HANA_DB

user , password – username and password for logging into the HANA database

Host,Port – direct sql connectivity host and port , which can be found on HANA DB dashboard from above step

Now we are going to create a Jupyter notebook.

For analysis , my database (File) looks like

| User ID | Gender | Age | Salary | Purchased |

| 1 | Male | 19 | 19000 | 0 |

| 2 | Male | 25 | 24000 | 1 |

| 3 | Male | 36 | 25000 | 0 |

| 4 | Female | 37 | 87000 | 1 |

| 5 | Female | 29 | 89000 | 0 |

| 6 | Female | 27 | 90000 | 1 |

For analysis i will be using (Only Age , Salary Column) for predicting Purchased column

now open a jupyter notebook from ML scenario manager and install these libraries one by one

pip install sklearn

pip install hdbcli

pip install matplot

Code For Jupiter (Note , if you have any library missing , kindly install using above step)

2 things to configue

1. HANA connection id – line 2

2. Enter Table Name (Schema.TableName) – line 13

import notebook_hana_connector.notebook_hana_connector

di_connection = notebook_hana_connector.notebook_hana_connector.get_datahub_connection(id_="hana") # enter id of the connection

from hdbcli import dbapi

conn = dbapi.connect(

address=di_connection["contentData"]['host'],

port=di_connection["contentData"]['port'],

user=di_connection["contentData"]['user'],

password=di_connection["contentData"]["password"],

encrypt='true',

sslValidateCertificate='false'

)

cursor = conn.cursor()

path="ML_TEST.PURCHASE" #enter table name

sql = 'SELECT * FROM '+path

cursor = conn.cursor()

cursor.execute(sql)

c=0

X=[]

y=[]

for row in cursor:

d_r=[]

#I AM USING 4 COLUMN DATASET

d_r.append(row[2])

d_r.append(row[3])

y.append(row[4])

X.append(d_r)

# Importing the libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# Splitting the dataset into the Training set and Test set

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.25, random_state = 0)

# Fitting Random Forest Classification to the Training set

from sklearn.ensemble import RandomForestClassifier

classifier = RandomForestClassifier(n_estimators = 10, criterion = 'entropy', random_state = 0)

classifier.fit(X_train, y_train)

# Predicting the Test set results

y_pred = classifier.predict(X_test)

# Making the Confusion Matrix

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_pred.tolist())

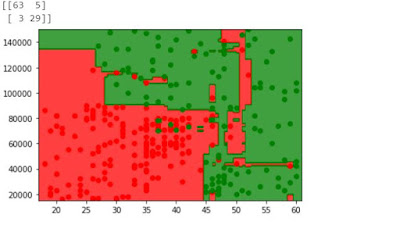

print(cm)

arrx=np.array(X_train)

y_set=np.array(y_train)

from matplotlib.colors import ListedColormap

X1, X2 = np.meshgrid(np.arange(start = arrx[:, 0].min() - 1, stop = arrx[:, 0].max() + 1, step = 0.1),

np.arange(start = arrx[:, 1].min() - 1, stop = arrx[:, 1].max() + 1, step = 1000))

plt.contourf(X1, X2, classifier.predict(np.array([X1.ravel(), X2.ravel()]).T).reshape(X1.shape),

alpha = 0.75, cmap = ListedColormap(('red', 'green')))

plt.xlim(X1.min(), X1.max())

plt.ylim(X2.min(), X2.max())

for i, j in enumerate(np.unique(y_set)):

plt.scatter(arrx[y_set == j, 0], arrx[y_set == j, 1],

c = ListedColormap(('red', 'green'))(i), label = j)

you should be able to view the results in a graph.

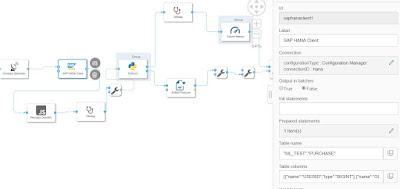

Now Lets us create a pipeline from ML Scenario Manager for creating the model.

First let us create a pipeline from the template python producer

(There are some changes in the components ) to get data from HANA

1. Constant Generator – to feed in the SQL query , please see the configuration below, in this case the query is

SELECT * FROM ML_TEST.PURCHASE

2. HANA Client (To connect with HANA):things to note(Connection,TableName) and if you scroll down(ColumnHeader) select it to None

3. JS Operator – to extract only the body of the message i.e. rows

$.setPortCallback("input",onInput);

function isByteArray(data) {

switch (Object.prototype.toString.call(data)) {

case "[object Int8Array]":

case "[object Uint8Array]":

return true;

case "[object Array]":

case "[object GoArray]":

return data.length > 0 && typeof data[0] === 'number';

}

return false;

}

function onInput(ctx,s) {

var msg = {};

var inbody = s.Body;

var inattributes = s.Attributes;

// convert the body into string if it is bytes

if (isByteArray(inbody)) {

inbody = String.fromCharCode.apply(null, inbody);

}

msg.Attributes = {};

msg.Body = inbody;

$.output(msg.Body);

}

4. To String converter (Use inInterface for sending the data from JS operator to the python file)

Python File for training the model and saving it

# Example Python script to perform training on input data & generate Metrics & Model Blob

def on_input(data):

import pandas as pd

import io

from io import BytesIO

import os

import numpy as np

import json

dataset = json.loads(data)

i =0;

# to send metrics to the Submit Metrics operator, create a Python dictionary of key-value pairs

X=[]

y=[]

for j in dataset:

x_temp=[]

x_temp.append(j["AGE"])

x_temp.append(j["SALARY"])

y.append(j["PURCHASED"])

X.append(x_temp)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.25, random_state = 0)

# Fitting Random Forest Classification to the Training set

from sklearn.ensemble import RandomForestClassifier

classifier = RandomForestClassifier(n_estimators = 10, criterion = 'entropy', random_state = 0)

classifier.fit(X_train, y_train)

# Predicting the Test set results

y_pred = classifier.predict(X_test)

# Making the Confusion Matrix

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_pred.tolist())

metrics_dict = {"confusion matrix": str(cm)}

# send the metrics to the output port - Submit Metrics operator will use this to persist the metrics

api.send("metrics", api.Message(metrics_dict))

# create & send the model blob to the output port - Artifact Producer operator will use this to persist the model and create an artifact ID

import pickle

model_blob = pickle.dumps(classifier)

api.send("modelBlob", model_blob)

api.set_port_callback("input", on_input)

wiretaps have been used to check the output , you may skip those blocks

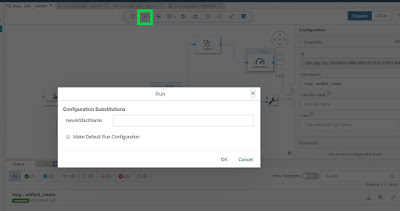

For running the pipeline , you may need the dockerfile

Content of the dockerfile

FROM python:3.6.4-slim-stretch

RUN pip install tornado==5.0.2

RUN python3.6 -m pip install numpy==1.16.4

RUN python3.6 -m pip install pandas==0.24.0

RUN python3.6 -m pip install sklearn

RUN groupadd -g 1972 vflow && useradd -g 1972 -u 1972 -m vflow

USER 1972:1972

WORKDIR /home/vflow

ENV HOME=/home/vflow

Now create tags for the dockerfile (Custom tag blogFile is create ) , tag your python file with this tag as well. Build the dockefile

Now we can run the pipeline and store the artifact (Please provide a name )

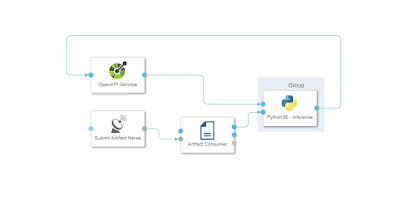

Now we have to create another pipeline to make an API , so that it can be consumed.For this case use the template (Python Consumer)

As done in the above step , tag the python and update the script

import json

import io

import numpy as np

import pickle

# Global vars to keep track of model status

model = None

model_ready = False

# Validate input data is JSON

def is_json(data):

try:

json_object = json.loads(data)

except ValueError as e:

return False

return True

# When Model Blob reaches the input port

def on_model(model_blob):

global model

global model_ready

model = pickle.loads(model_blob)

model_ready=True

# Client POST request received

def on_input(msg):

error_message = ""

success = False

try:

attr = msg.attributes

request_id = attr['message.request.id']

api.logger.info("POST request received from Client - checking if model is ready")

if model_ready:

api.logger.info("Model Ready")

api.logger.info("Received data from client - validating json input")

user_data = msg.body.decode('utf-8')

# Received message from client, verify json data is valid

if is_json(user_data):

api.logger.info("Received valid json data from client - ready to use")

# obtain your results

feed = json.loads(user_data)

data_to_predict = np.array(feed['data'])

api.logger.info(str(data_to_predict))

# check path

prediction = model.predict(data_to_predict)

prediction = (prediction > 0)

success = True

else:

api.logger.info("Invalid JSON received from client - cannot apply model.")

error_message = "Invalid JSON provided in request: " + user_data

success = False

else:

api.logger.info("Model has not yet reached the input port - try again.")

error_message = "Model has not yet reached the input port - try again."

success = False

except Exception as e:

api.logger.error(e)

error_message = "An error occurred: " + str(e)

if success:

# apply carried out successfully, send a response to the user

result = json.dumps({'Results': str(prediction)})

else:

result = json.dumps({'Error': error_message})

request_id = msg.attributes['message.request.id']

response = api.Message(attributes={'message.request.id': request_id}, body=result)

api.send('output', response)

api.set_port_callback("model", on_model)

api.set_port_callback("input", on_input)

Now you can deploy the pipeline , once it is done , you will get a url , which you can use for the testing of your model , make sure to append /v1/uploadjson/ to your url.

Deployment of the pipeline can take a while.

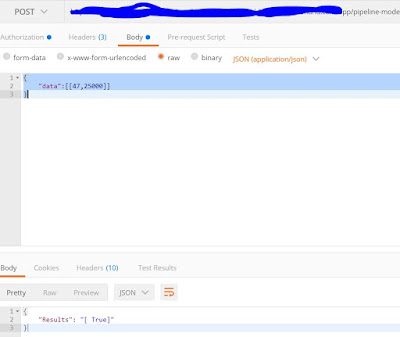

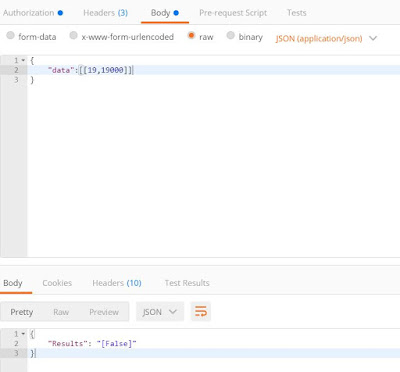

Post data you can test the model

headers of the call , Authorization is Basic with username

[{"key":"X-Requested-With","value":"XMLHttpRequest","description":""},{"key":"Authorization","value":"Add your authentication here":""},{"key":"Content-Type","value":"application/json","description":""}]

Body of the request , having Age and Salary

{

"data":[[47,25000]]

}

No comments:

Post a Comment