Open Source Apache Spark is fast becoming the de facto standard for Big Data processing and analytics. It’s an ‘in-memory’ data processing engine, utilising the distributed computing power of 10’s or even 1000’s of logical linked host machines (cluster). It’s able to crunch through vast quantities of both structured and unstructured data. You can easily scale out your cluster as your data appetite grows.

In addition to this it can also be used as a data federation layer spanning both traditional databases as well as other popular big data platforms, such as Hadoop HDFS, Hadoop Hbase, Cassandra, Amazon Redshift and S3, to name a few.

SAP Hana Vora, extendeds upon Apache Spark further by providing additional business functions such as hierarchy support and currency translation.

In this blog though I’ll demonstrate how easy it is to consume Hana data directly via Apache Spark to get you up and running, until you are ready for the added benefits Vora provides.

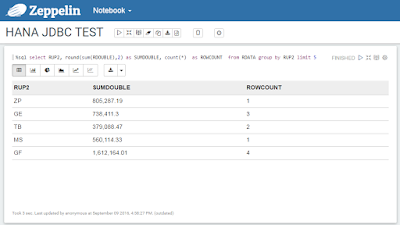

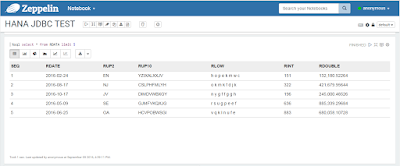

The following screen capture shows the end result of this blog, a HANA query being called directly by Spark SQL. This is similar to how Virtual tables and Smart Data Access (SDA) are used within Hana, to query other databases in real-time.

In addition to this it can also be used as a data federation layer spanning both traditional databases as well as other popular big data platforms, such as Hadoop HDFS, Hadoop Hbase, Cassandra, Amazon Redshift and S3, to name a few.

SAP Hana Vora, extendeds upon Apache Spark further by providing additional business functions such as hierarchy support and currency translation.

In this blog though I’ll demonstrate how easy it is to consume Hana data directly via Apache Spark to get you up and running, until you are ready for the added benefits Vora provides.

The following screen capture shows the end result of this blog, a HANA query being called directly by Spark SQL. This is similar to how Virtual tables and Smart Data Access (SDA) are used within Hana, to query other databases in real-time.

Note: I’ve use a Zeppelin Notebook for presenting the results, though Spark SQL can be called by many popular reporting presentation tools, including Lumira, Tableau, Spotfire, etc.

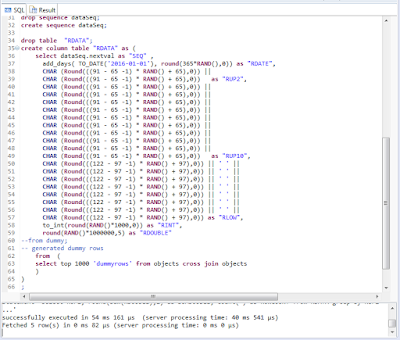

The first step is to create a table in Hana with some test data. The following sql statement generates a 1000 rows of test data, but can be tweaked if your wish to increase the number for heavier testing.

Generate Test Data

drop sequence dataSeq;

create sequence dataSeq;

drop table "RDATA";

create column table "RDATA" as (

select dataSeq.nextval as "SEQ" ,

add_days( TO_DATE('2016-01-01'), round(365*RAND(),0)) as "RDATE",

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) as "RUP2",

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) ||

CHAR (Round(((91 - 65 -1) * RAND() + 65),0)) as "RUP10",

CHAR (Round(((122 - 97 -1) * RAND() + 97),0)) || ' ' ||

CHAR (Round(((122 - 97 -1) * RAND() + 97),0)) || ' ' ||

CHAR (Round(((122 - 97 -1) * RAND() + 97),0)) || ' ' ||

CHAR (Round(((122 - 97 -1) * RAND() + 97),0)) || ' ' ||

CHAR (Round(((122 - 97 -1) * RAND() + 97),0)) || ' ' ||

CHAR (Round(((122 - 97 -1) * RAND() + 97),0)) || ' ' ||

CHAR (Round(((122 - 97 -1) * RAND() + 97),0)) || ' ' ||

CHAR (Round(((122 - 97 -1) * RAND() + 97),0)) as "RLOW",

to_int(round(RAND()*1000,0)) as "RINT",

round(RAND()*1000000,5) as "RDOUBLE"

--from dummy;

-- generated dummy rows

from (

// Change 'top 1000' to number of rows required

select top 1000 'dummyrows' from objects cross join objects

)

)

;

Execute the SQL.

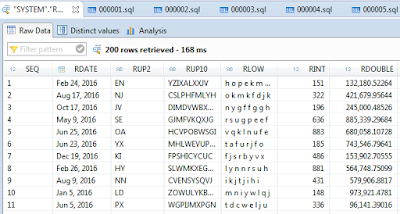

The results are as follows.

Next we need to prepare Apache Spark.

- Download and copy the latest Hana JDBC Driver (ngdbc.jar) to your Spark cluster.

- In your ‘spark-defaults.conf’ file you made need to add a reference to the jar file such as 'spark.jars <file location from step 1>/ngdbc.jar'

- (optional for those familiar with Zeppelin) Depending on your setup you may also need to add the following to the Zeppelin interpreter: ‘spark.files’ and ‘spark.jars’ properties - pointing the location of the Hana JDBC jar file.

Note: I’ve tested Spark using the recent SPS12 version of the Hana JDBC Driver (ngdbc.jar) against a SPS10 & SPS12 system and both seem ed to work fine. Older versions of the driver give the following error in Spark: ‘ org.apache.spark.SparkException: Job aborted due to stage failure: Task not serializable: java.io.NotSerializableException: com.sap.db.jdbc.topology.Host’

With the driver copied over and Spark config pointing to the jar file, you are now able to execute Spark commands against HANA. Ensure you have your Hana connection details. In a Hana MDC environment execute ‘select * from sys_databases.m_services’ if in doubt.

Note: At present only Basic Authorization can be used to access Hana (via Spark)

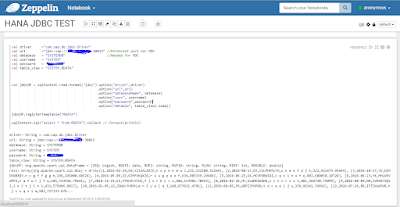

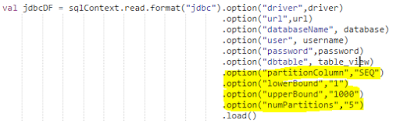

In this example I used the follow Scala syntax to create a Temporary Table (virtual table) in Spark linked to the Hana table created earlier.

Spark Scala code to Create Temporary Table (Virtual Table)

val driver ="com.sap.db.jdbc.Driver"

val url ="jdbc:sap://<your hana system>:<port>" //Different port for MDC

val database = "<your MDC Database Name" //Needed for MDC

val username = "<HANA USERID>"

val password = "<HANA PASSWORD>"

val table_view = "<TABLE, VIEW , CALC VIEW>"

val jdbcDF = sqlContext.read.format("jdbc").option("driver",driver)

.option("url",url)

.option("databaseName", database)

.option("user", username)

.option("password",password)

.option("dbtable", table_view).load()

jdbcDF.registerTempTable("<TEMP TABLE NAME IN SPARK>")

The results of running in Zeppelin are:

Now a basic Spark SQL can be run on the virtual table.

To better improve performance (for larger data-sets) it's also possible to call the query in parallel.

Using the following Scala Syntax I'v requested the data be split into 5 distinct sub queries on Hana, before being union-ed again in Spark.

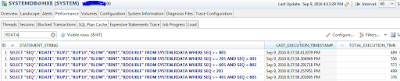

When larger data-sets are queried in Spark then you can see multiple sub queries are called in Hana.

Source: scn.sap.com

No comments:

Post a Comment