As you start using it with larger Tables or Views in HANA, you may notice that the query that is pushed down into HANA is not optimised efficiently. SUM & GROUP BY Clauses are NOT pushed down into HANA, this may cause a large granular result set to be move across the network, to only be Aggregated in Spark. That is certainly a waste of HANA's powerful query engine.

In this blog I will demonstrate the problem and show several ways to help get around it, using Apache Spark.

Using the same dataset from the earlier blog we can see how more complex Spark SQL ( executing against a test table in HANA - "RDATA") is actually pushed down into HANA.

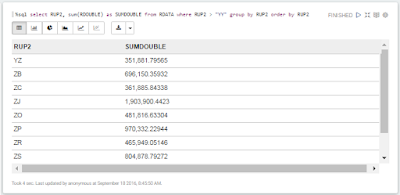

Executing the following Spark SQL in Zeppelin:

%sql select RUP2, sum(RDOUBLE) as SUMDOUBLE from RDATA where RUP2 > "YY" group by RUP2 order by RUP2

In this blog I will demonstrate the problem and show several ways to help get around it, using Apache Spark.

Using the same dataset from the earlier blog we can see how more complex Spark SQL ( executing against a test table in HANA - "RDATA") is actually pushed down into HANA.

Executing the following Spark SQL in Zeppelin:

%sql select RUP2, sum(RDOUBLE) as SUMDOUBLE from RDATA where RUP2 > "YY" group by RUP2 order by RUP2

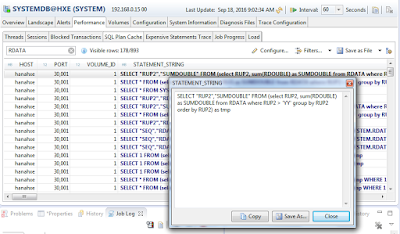

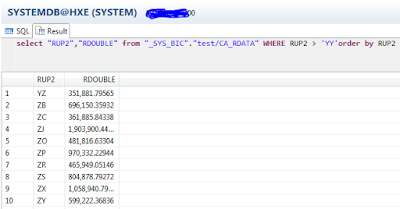

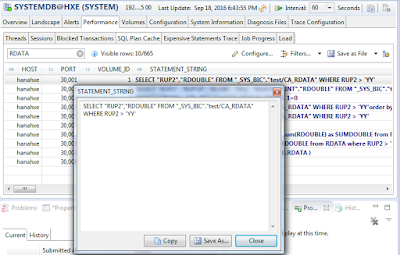

In Hana the query is called with Column Pruning and Filtering, but NO Aggregation:

The actual query that gets executed in HANA is:

SELECT "RUP2","RDOUBLE" FROM SYSTEM.RDATA WHERE RUP2 > 'YY'

You can see that the COLUMN pruning and WHERE clause filtering are pushed down, but sadly the important aggregation statements of SUM and GROUP BY are NOT. Rather than sending over just a small results set of records from Hana to Spark, a much larger data set is sent to be Aggregated in Spark.

Fortunately there is a Gold, Silver and Bronze solution to this.

- (GOLD) Install SAP HANA Vora on your cluster [check with SAP on licensing and system requirements]

- (SILVER) Avoid reading HANA Tables directly and utilise Hana Calculation Views, which have built in Aggregation

- (BRONZE) Manually push-down a more complex SQL statement directly from SPARK

Below I will demonstrate how these various options work, in reverse order.

1. BRONZE

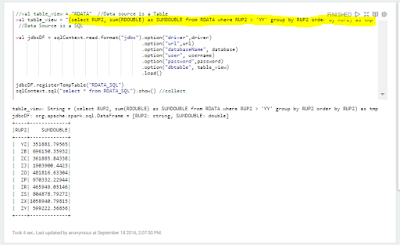

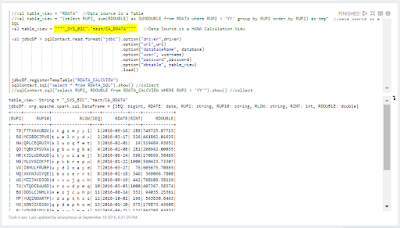

The simplest but least sophisticated of the options is use the more complex SQL as part of the data source definition.

In the SCALA script, used in the earlier blog instead of using a table as the data source, a full SQL statement is used instead.

val table_view = "(select RUP2, sum(RDOUBLE) as SUMDOUBLE from RDATA where RUP2 > 'YY' group by RUP2 order by RUP2) as tmp"

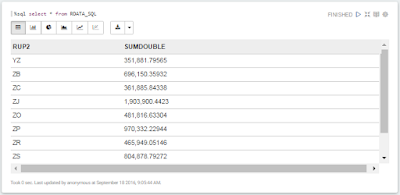

Executing the Spark SQL in Zeppelin:

In HANA the following SQL is called:

The following SQL is executed in HANA:

SELECT "RUP2","SUMDOUBLE" FROM (select RUP2, sum(RDOUBLE) as SUMDOUBLE from RDATA where RUP2 > 'YY' group by RUP2 order by RUP2) as tmp

As you can see the SUM and GROUP BY have been push-down to HANA.

2. SILVER

Where possible it is better to call well designed Hana Calculation Views, rather than the base Tables. Your production SAP S/4 or B/4 has 1000’s of tables, with complex business relationships between tables. If this operational data is needed in Spark or SAP Vora to join with contextual (candidate) data, from your Big data storage solution, then it's typically more efficient to read HANA Calculation views than the base tables. From a security perspective it’s also much better to use a HANA Calculation views to ensure data level security requirements are met.

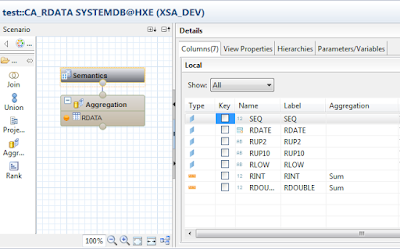

To illustrate this I’ve created a simple Calculation view, in HANA with Aggregation.

Due to the design of the query, the SUM() and GROUP BY clause aren’t strictly required anymore, see below.

E.g. select "RUP2","RDOUBLE" from "_SYS_BIC"."test/CA_RDATA" WHERE RUP2 > 'YY' order by RUP2

We can now use this Calc View directly in Spark, to avoid pushing down complex SQL in the data source definition.

The data source is defined now as a Calculation View instead of the Table.

E.g.

val table_view = """"_SYS_BIC"."test/CA_RDATA""""

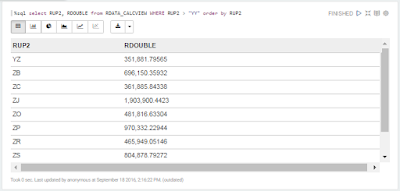

We can now execute the similar SQL in SPARK without the SUM() and GROUP BY, and the aggregated results are returned as expected.

%sql select RUP2, RDOUBLE from RDATA_CALCVIEW WHERE RUP2 > "YY" order by RUP2

In HANA the query is pushed down as expected.

The pushdown SQL in HANA is:

SELECT "RUP2","RDOUBLE" FROM "_SYS_BIC"."test/CA_RDATA" WHERE RUP2 > 'YY'

Due to the design of the Calculcation view the SUM and GROUP BY aren't necessay, and the aggregate results are returned.

Whew!!! Since Spark still doesn't do it for us !!!!!!!

For the keen eye’d observer you’ll notice the ORDER BY is still NOT Pushed down, and is instead performed in Spark.

Not the end of the world but still not optimal.

3. GOLD

SAP HANA Vora (an ADD-On to Spark) gets around this problem, straight out of the box, by having advanced push-down of logic to HANA.

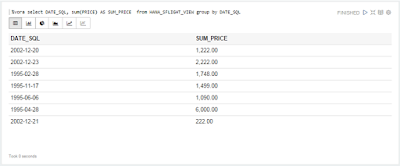

If you execute a similar SUM & GROUP BY query in Vora, against a SAP HANA data source then you can see a vastly improved SQL push-down:

%vora select DATE_SQL, sum(PRICE) from HANA_SFLIGHT_VIEW group by DATE_SQL

In HANA:

Source: scn.sap.com

No comments:

Post a Comment