How to free Hana System on Public Cloud from I/O performance issue?

Apart from memory, storage performance plays a major role in safeguarding HANA performance. Storage system used for SAP HANA in TDI environments must full fill a certain set of KPIs for minimum data throughput and maximum latency time for Hana data and log volume. Cloud vendor need to pass the KPI checked using HWCCT (Hardware Configuration Check Tool) for SAP to certify their cloud platform to run SAP HANA. The reason is to safeguard customer HANA system from any possible I/O performance that’ll lead to performance degradation up to system standstill and irresponsive.

◈ Permanently slow system

◈ High commit times due to slow write rate to log volume

◈ Database requests may be blocked where poor I/O performance extend it to a length that causes a considerable performance impact due to Long waitforlock and Critical phase on savepoint.

◈ Increase startup times and database request time due to slow row/ column load.

◈ Longer takeover and active time for HSR

◈ Increase number of HANA lock such as “Barrier Wait”, “IO Wait”, “Semaphore Wait”,etc.

etc, etc.

Things are getting worse for a high load system when there is constantly high volume of modified pages flushing into disk, e.g. during data loading, long running transactions, massive update/ insert, etc. We have a scenario where the customer’s BWoH production system was constantly standstill when there’s a high load with update/ insert activity, where it escalated to SAP and up to the management level and later figured out the culprit was due to the system hosted by the on-premise Hosting vendor failed to meet SAP TDI Storage KPI.

Now back to the scenario of running our HANA systems on Public Cloud. Cloud Vendors had certified their platform to run SAP HANA, however, is our own responsibility to setup the HANA systems to meet SAP Storage KPI to avoid all possible I/O issues. Guidelines of optimal storage configuration are provided by each Cloud vendor as below:

GCP

https://cloud.google.com/solutions/partners/sap/sap-hana-deployment-guide

“To achieve optimal performance, the storage solution used for SAP HANA data and log volumes should meet SAP’s storage KPIs. Google has worked with SAP to certify SSD persistent disks for use as the storage solution for SAP HANA workloads, as long as you use one of the supported VM types. VMs with 32 or more vCPUs and a 1.7 TiB volume for data and log files can achieve up to 400 MB/sec for writes, and 800 MB/sec for reads.”

AWS

https://aws.amazon.com/blogs/awsforsap/deploying-sap-hana-on-aws-what-are-your-options/

◈ With the General Purpose SSD (gp2) volume type, you are able to drive up to 160 MB/s of throughput per volume. To achieve the maximum required throughput of 400 MB/s for the TDI model, you have to stripe three volumes together for SAP HANA data and log files.

◈ Provisioned IOPS SSD (io1) volumes provide up to 320 MB/s of throughput per volume, so you need to stripe at least two volumes to achieve the required throughput.

Azure

https://docs.microsoft.com/en-gb/azure/virtual-machines/workloads/sap/hana-get-started

“However, for SAP HANA DBMS Azure VMs, the use of Azure Premium Storage disks for production and non-production implementations is mandatory.

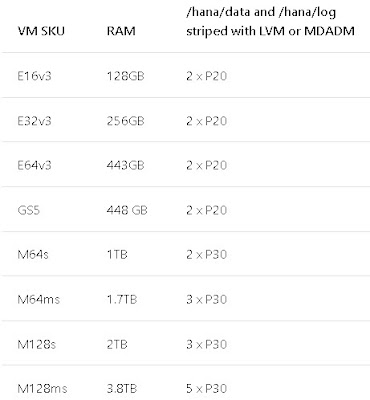

Based on the SAP HANA TDI Storage Requirements, the following Azure Premium Storage configuration is suggested:”

Now we knew that it is important to have at least 1.7 TiB SSD persistent disk for GCP, LVM stripping with 3 gp2 or 2-3 io1 volume for AWS and 2-3 LVM stripping for Azure premium storage.

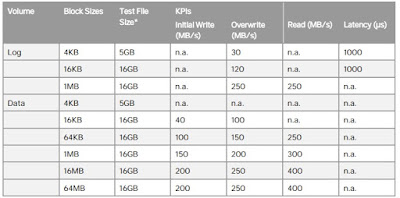

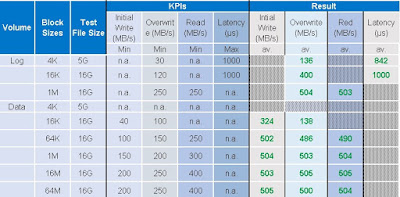

Next, let look at the certified KPI for data throughput and latency for HANA Systems:

1943937 – Hardware Configuration Check Tool – Central Note

So… what were the KPIs achieved by GCP and AWS without and with optimal storage config?

*Tests were conducted months ago and for your own reference. For a better and accurate result reflected on your system, you are advised to run the HWCCT storage test.

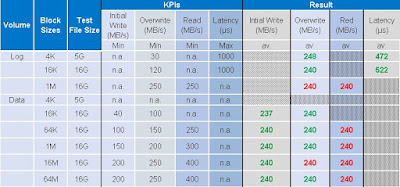

GCP < 1.7TB SSD PD

GCP >= 1.7TB SSD PD

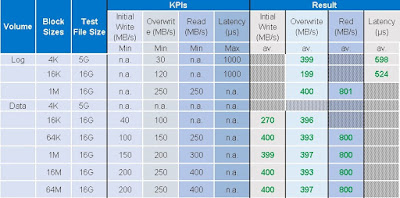

AWS gp2 without LVM Stripping

AWS 3 x gp2 LVM Stripping

Not getting a chance to test on Azure platform yet. It would be great if someone had tested it and able to share the result.

From the result, we see slow I/O that failed on certain KPI for storage setup without following the guidelines, and thus, there’s a risk of arising performance issues caused by slow I/O stated above.

If you run your HANA system on Cloud and constantly encounter I/O issue stated above, do ensure HANA data volume and log volume are setup according to the respective guidelines. By doing so will ensure you stay within SAP support and eliminate any possible I/O performance and maximize the usage of the solid underlying platform provided by GCP, AWS and Azure.

No comments:

Post a Comment