Introduction

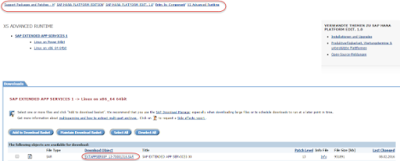

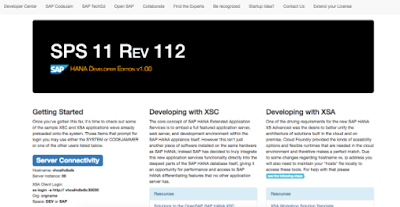

We will be posting new videos to the SAP HANA Academy to show new features and functionality introduced with SAP HANA 2.0 Support Package Stack (SPS) 00.

We will be posting new videos to the SAP HANA Academy to show new features and functionality introduced with SAP HANA 2.0 Support Package Stack (SPS) 00.

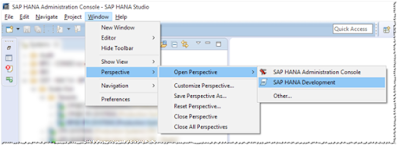

Tutorial Video