Friday 30 December 2022

Consuming SAP HANA Cloud from the Kyma environment

Thursday 29 December 2022

HANA Docker Install with NFS mounts – (Fully Automated)

Wednesday 28 December 2022

Accessing SAP HANA Cloud, data lake Files from Python

Overview:

Friday 23 December 2022

NSE Implementation Experience

Wednesday 21 December 2022

Getting Started on your SAP S/4HANA implementation

Monday 19 December 2022

MTA project Integration with Git in Business Application Studio: HANA XSA

Friday 16 December 2022

Wednesday 14 December 2022

Add New Fields To “S/4HANA Manage Purchase Requisition- Professional Fiori App” With CDS Extension

Problem:

Saturday 10 December 2022

Spend Reporting with S/4HANA Embedded Analytics

Friday 9 December 2022

Replicate artifacts data from an HDI Container in SAP Business Application Studio to SAP HANA On_Premise

Wednesday 7 December 2022

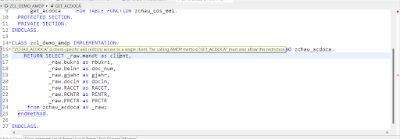

Consume CDS View inside CDS Table Function by using AMDP

Friday 2 December 2022

Use multi-tenant capabilities of SAP Job Scheduling Service to schedule tenant-specific jobs to pull data from the S/4HANA system

SAP Job Scheduling Service

Wednesday 30 November 2022

Whatsapp Integration in SAP S/4 HANA On-Premise

Introduction:

Monday 28 November 2022

Automating SAP HANA Installation in Minutes (AWS) – Part 2

Introduction:

Monday 21 November 2022

Automating SAP HANA Installation in Minutes (AWS) – Part 1

Friday 18 November 2022

Extensibility Guide for SAP S/4HANA Cloud: SAP Extensibility Explorer

Wednesday 16 November 2022

Building Multi-tenant SaaS Solution and Extension using Nodejs on BTP

Context

Monday 14 November 2022

Load Tables Asynchronously in SAP HANA Cloud, data lake Relational Engine

Overview

Friday 11 November 2022

Installation Guide Eclipse in Mac OS

Wednesday 9 November 2022

How can a single Supplier can have multiple Contact Persons in SAP S/4HANA Cloud?

Monday 7 November 2022

Linking Contract Accounting with an operative service system (Disconnection and Reconnection of Services)

Saturday 5 November 2022

Keep-Data-Clean: Practical approaches to keep your core (S/4 HANA) system clean

Saturday 22 October 2022

SAP Data Intelligence and SAP PaPM Cloud integration

Scenario:

Friday 21 October 2022

Analyze SAP S/4HANA On-Premises Data using SAP Analytics Cloud Powered by SAP Data Warehouse Cloud

Wednesday 19 October 2022

AWS EC2 OS patching automation for SAP Landscape

Monday 17 October 2022

SAP S/4HANA Cloud Content Federation with SAP BTP Launchpad Site

Saturday 15 October 2022

Receive Notifications from Amazon Simple Notification Service for SAP S/4HANA BTP Extension App

Friday 14 October 2022

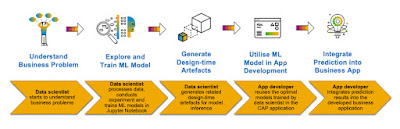

Develop a Machine Learning Application on SAP BTP – Data Science Part

Wednesday 12 October 2022

HA/DR Architecture on SAP Shared File system on Windows in Azure Cloud

Monday 10 October 2022

Data migration from SAP S/4HANA Cloud and SAP HANA Smart Data Integration

Background

Friday 7 October 2022

How to recreate a HANA Cloud service key aka password rotation

Problem:

Solution:

Wednesday 5 October 2022

GDAL with SAP HANA driver in OSGeo4W

Setup SAP HANA plug-in…

Monday 3 October 2022

Python hana_ml: Classification Training with APL(GradientBoostingBinaryClassifier)

Environment

Wednesday 28 September 2022

SAP HANA Cockpit Installation.

Monday 26 September 2022

Hana Table Migration using Export & Import

Requirement –

Solution –

Wednesday 21 September 2022

What’s New in SAP HANA Cloud in September 2022

Friday 16 September 2022

Jupyter Notebook and SAP HANA: Persisting DataFrames in SAP HANA

Introduction

SAP HANA

Wednesday 14 September 2022

Python with SAP Databases

Monday 12 September 2022

HANA SPS upgrade from HANA 2.0 Rev 37 to HANA 2.0 Rev 59 on the DR server when primary and secondary server setup as replication are present

Saturday 10 September 2022

SAP Analytics Cloud – TroubleShooting – Timeline Traces

Friday 9 September 2022

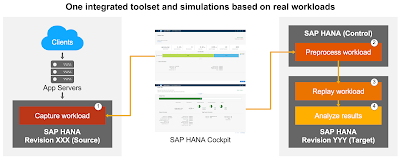

SAP HANA Capture and Replay Tool End-to-end Hands-On Tutorial

What can I do with SAP HANA capture and replay?

Wednesday 7 September 2022

Python hana_ml: PAL Classification Training(UnifiedClassification)

Sunday 28 August 2022

Load Data from A Local File into HANA Cloud, Data Lake

Overview

Ever wondered how will you load data/file from your local machine into HANA Cloud, Data Lake without hesitation?

Then you are at the right place. This blog will provide you a step-by-step guide as to how anyone can easily load data from their local machine to the HANA Cloud, Data Lake using Data Lake File store.

Step-by-step process:

Firstly, one must provision a Data Lake instance from the SAP HANA Cloud Central through SAP BTP Cockpit. One can learn to do so by going through the following tutorial – Provision a Standalone Data Lake in SAP HANA Cloud | Tutorials for SAP Developers

Friday 26 August 2022

Integrating SAP PaPM Cloud & SAP DWC

Wednesday 24 August 2022

E-Mail Templates in S/4 HANA- Display table in Email Template

SAP has a very interesting feature in S/4 HANA (cloud and on premise both) – E-Mail Templates.

In this blog post, we will learn how to embed table with multiple records in SE80 email template.

Example:

◉ Requirement is to send an email by end of the month to all employees who has pending hours in his/her time sheet. Data is taken from Std HR tables and pending hours is calculated using formula for each employee for project on which he is assigned. Pending hours = Planned hours – (Approved + Submitted) hours.

Monday 22 August 2022

CDS Views – selection on date plus or minus a number of days or months

Problem

Need to be able to select data in a CDS view where the records selected are less than 12 months old. This needs a comparison of a date field in the view with the system date less 12 months.

The WHERE clause should look something like the following.

Where row_date >= DATS_ADD_MONTHS ($session.system_date,-12,'UNCHANGED')

The problem with this is that the CDS view SQL statement above is not permitted, giving the following error message on activation.

Friday 19 August 2022

Monitoring Table Size in SAP HANA

Monday 8 August 2022

Flatten Parent-Child Hierarchy into Level Hierarchy using HANA (2.0 & above) Hierarchy Functions in SQL

Knock knock! Anyone else also looking for handy illustrations of the hierarchy functions introduced with HANA 2.0? Well count me in then.

While trying hard not to write SQLs with recursive self joins to flatten a hierarchical data format presented in parent-child relationship, the hierarchy functions in HANA can be a saviour for sure. Let’s look into something easy to implement using pre-defined hierarchy functions available with HANA 2.0 & above.

As a starter, let’s assume we have a miniature article hierarchy structured as below:

Saturday 6 August 2022

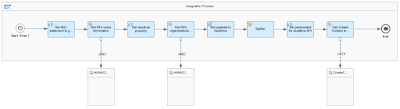

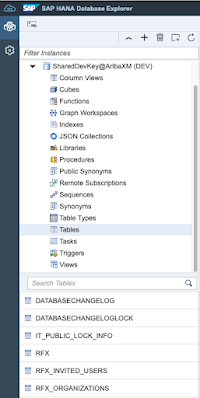

Integration of SAP Ariba Sourcing with Qualtrics XM for Suppliers, HANA Cloud Database

In this blog, I will give you an overview of a solution to extract supplier data from a Sourcing event in SAP Ariba Sourcing, and save it in a mailing list in SAP Qualtrics XM for Suppliers, using BTP services.

Process Part 1

First, to extract the information from SAP Ariba Sourcing, I use the Operational Reporting for Sourcing (Synchronous) API to get events by date range, and also the Event Management API which returns supplier bid and invitation information from the sourcing events.

Then, I store the information I need in a SAP HANA Cloud database. I created 3 tables to store the information that I will send to SAP Qualtrics XM for Suppliers: RFx header information, Invitations, and Organizations contact data.

Finally, I send all the information needed to a SAP Qualtrics XM for Suppliers mailing list, which will then handle automatically sending surveys to suppliers that participated in the Ariba sourcing events.

To send the information to SAP Qualtrics XM for Suppliers, I use the Create Mailing List API.

Integration

All this is orchestrated by the SAP Integration Suite, where I created 2 iFlows:

◉ The first iFlow is to get the information from the SAP Ariba APIs, and store it in the SAP HANA Cloud database.

Process Part 2

Friday 5 August 2022

Processing of Prepayments with SAP S/4HANA Accruals Management

For sure you have already heard about the SAP S/4HANA Accruals Management?

Starting with S4 HANA OP 2021, the Accrual Management provides a new functionality to process deferrals. So far, the processing of prepayments is a typical and manual activity for the Finance users. With this new functionality Finance can achieve efficiency gains and it’s quite easy to implement as well. And you can also use the deferrals’ part even if you didn’t implement the accruals’ part yet.

In this blog I will show you how to set up the SAP S/4HANA Accruals Management for deferrals in order to optimize this process.

Thursday 4 August 2022

App Extensibility for Create Sales Orders – Automatic Extraction: Custom Proposal for Sales Order Request Fields (Using BAdI)

In the Create Sales Orders – Automatic Extraction app, the system starts data extraction and data proposal for sales order requests immediately after your purchase order files are uploaded. If SAP pre-delivered proposal rules do not satisfy your business needs, key users can create custom logic to implement your own proposal rules.

Here is an example procedure. In your custom logic, you want the system to set the sales area to 0001/01/01 if the company code is 0001, and set the request delivery date to the current date plus seven days if this date is initial. In case ML can not extract the date from file or date is not exist in the file.

Wednesday 3 August 2022

How to Display Situations in Your Custom Apps with the Extended Framework of Situation Handling

Situation Handling in SAP S/4HANA and SAP S/4HANA Cloud detects exceptional circumstances and displays them to the right users.

I’m the lead UI developer within the Situation Handling framework for end-user facing apps and I’m excited to present a new feature in the Situation Handling extended framework to you. Together with the team from SAP Fiori elements, we introduce a new, simplified way of displaying situations right in your business apps. You can enable a great user experience for your end users with low effort. You should read through this tutorial-style article which explains the details and points you to further resources that you can take as a blueprint for your successful implementation.

With the extended framework of Situation Handling, situations can be displayed in apps based on SAP Fiori elements for OData version 4. You can do this without any front-end development, just by extending the app’s OData service.

Tuesday 2 August 2022

Currency conversion in BW/4HANA, Enterprise HANA Modelling

Most the business model cost center or profit center are located in different country and with different currency profit and cost is generated. At the end of the year finance team try to calculate total cost or profit against a target currency (USD,EUR or different one) which is the headquarter of the company located to generate laser and balance sheet. In that scenario we need to perform currency conversion to generate analytics report. This blog I am going to discuss about the currency conversion step for different scenarios i.e. BW/4HANA, Enterprise HANA Modelling SAP Analytics Cloud

1. Currency conversion in BW/4HANA:

In our scenario, A finance analyst wants all the profits generated in the Belgium and France plant (in EURO) need to converted into the USD which is the currency of the organization head office to generate the Laser posting.

Monday 1 August 2022

Backup and Recovery for the SAP HANA (BTP)

SAP HANA (HANA Cloud, HAAS ..) offers comprehensive functionality to safeguard your database i.e. SAP HANA offers automatic Backup to back up your database and ensure that it can be recovered speedily and with maximum business continuity even in cases of emergency. The recovery point objective (RPO) is no more than 15 minutes.

A full backup of all SAP HANA Cloud instances is taken automatically once per day for last 14 days

These Backups are encrypted using the capabilities of the SAP Business Technology Platform. The retention time for backups is 14 days. This means that an instance can be recovered for 14 days.

Wednesday 27 July 2022

Decoding S/4HANA Conversion

To start with there is always a lot of confusion on S/4HANA Conversion projects.

Too many tasks, too many teams, and too many responsibilities. Also moving away from our beloved Business Suite sparks fear in us.

Lets explain why we should move away from ECC 6.0 :

SAP Maintenance Strategy:

SAP provides mainstream maintenance for core applications of SAP Business Suite 7 software (including SAP ERP 6.0, SAP Customer Relationship Management 7.0, SAP Supply Chain Management 7.0, and SAP Supplier Relationship Management 7.0 applications and SAP Business Suite powered by SAP HANA®) on the respective latest three Enhancement Packages (EhPs) until December 31, 2027.

Saturday 23 July 2022

XaaS Digital Assets with SAP S/4HANA Public Cloud

In this blog let us go in more details of various possible business models and corresponding pricing models that we can have as part of XaaS Digital Assets. Our focus will be on E2E business process for Subscription based products in SAP S/4HANA Public Cloud with an out of the box integration with SAP Subscription Billing & Entitlement Management solutions.

Let us imagine that there is a Digital Assets Software company having a software portfolio including various software & different pricing models like:

◉ Fixed Recurring Charge

◉ Tier Based Pricing

◉ Volume/Usage Based Pricing

Friday 22 July 2022

XSD Validation for DMEEX

There is new functionality available in DMEEX and delivered across SAP S/4HANA on-premise that allows you to define XSD (XML Schema Definition) validation for your format trees.

If you were provided with an XSD with a criterion for the output file from the requester (e.g., bank or other financial institution) you can now upload the XSD file and take advantage of the file being checked against the schema as soon as the file is created.

Wednesday 20 July 2022

SAP Data Warehouse Cloud bulk provisioning

As our customers adopt SAP Data Warehouse Cloud, we often need to help them set up new users for both training and productive use. This can be a significant administrative task when there are many users, spaces, connections, and shares needed for each user. NOTE: SAP provides the SAP Data Warehouse Cloud command line interface (CLI) for automating some provisioning tasks.

For a specific customer, we needed to create 30 training users, along with a Space per user, multiple Connections per user, and numerous shares from a common space. This could all have been accomplished using the SAP Data Warehouse Cloud user interface but we wanted to go faster, and more importantly make it repeatable.

Monday 18 July 2022

How can SAP applications support the New Product Development & Introduction (NPDI) Process?

In this blog you will get an overview of “How can SAP Applications support the New Product development and Introduction (NPDI) Process for Discrete and Process industry .

Introduction of NPDI Process:

NPDI stands for “New Product Development and Introduction is the complete process of bringing a new product to the customer/Market. New product development is described in the literature as the transformation of a market opportunity into a product available for sale and it can be tangible (that is, something physical you can touch) or intangible (like a service, experience, or belief).

Friday 15 July 2022

SAP AppGyver – Handling Image : Loading and displaying data

This article is a continuation of the previous one. This article assumes the environment created in the previous article, so please refer to that article if you have not yet done so.

This time, I will explain how to display the image data stored in the BLOB type column of HANA Cloud using the SAP AppGyver application.

Additional development to the AppGyver application

Add a page

In this case, I would like to create a function that displays a list of image IDs, and when I tap on one, the image is displayed.I would like to add a separate page for this function, although it could be created on the same page.

Wednesday 13 July 2022

Monday 11 July 2022

Difference between Role, Authorization Object/s, and Profile

As a Functional Consultant, one may wonder what a Role is and how different it is from the Authorization Object and Profile. While it is mostly the job of the Security team to assign the required Role for a user, it is also the Functional Consultant’s responsibility to provide inputs about the required Transactions, restrictions within a Transaction, and how these restrictions should vary depending on the user.

Let’s begin this blog by defining what a user is. In simple terms, if a system has our users already created in it, only then we will be able to log in using a username and password. In SAP, Transaction code SU01 is used to create a user. Using this Tr. Code, users can be created, modified, deleted, locked, unlocked, and copied to create a new one. Typically, in a project user creation has certain prerequisites. Initially, the user or the concerned manager requests the user creation by filling in the access form and providing all the required details. This is followed by one or two stages of approval and finally the creation of the user by the Security team.

Friday 8 July 2022

How to use Custom Analytical Queries in SAP S/4HANA Embedded Analytics?

In this blog post you will learn step-by-step how to create a report in a SAP Fiori environment on operational SAP S/4HANA data. This is done using the ‘Custom Analytical Query’ SAP Fiori app. This SAP Fiori app is standard available in SAP S/4HANA Embedded Analytics and allows users to create reports themselves, directly on the operational data. These reports can be consumed in SAP Fiori or in any other visualization application like SAP Analysis for Office or SAP Analytics Cloud.

How to create a Custom Analytical Query?

To create a Custom Analytical Query the following steps need to be executed:

Step 1: Start the Custom Analytical Query app

Step 2: Create a new report

Step 3: Select the fields

Step 4: Assign to rows and columns

Step 5: Add custom fields

Step 6: Add filters

Step 7: Publish

Wednesday 6 July 2022

Pass Input Parameters to CV from Parameters derived from Procedure/Scalar Function

I am writing this blog post on SAP HANA Input Parameters. There are few blogs on HANA IPs but they are not giving clear understanding. Here I am giving a basic example which will make the understanding easy for HANA developers.

Those who are working on HANA for quite sometime and developed SAP HANA CVs they must have worked on Input Parameters and Variables.

A Variable:

Variables are bound to columns and are used for filtering using WHERE clauses. As such, they can only contain the values available in the Columns they relate to.

Friday 1 July 2022

SAP AppGyver – Handling Image: Data Writing

Now, here is an article on SAP AppGyver.

Today I would like to explain how to handle image data. Images can be found at …..There are pros and cons to storing it in HANA Cloud’s BLOB type column.

Assumption

In this article, I will explain how to create an application that takes a photo and stores it in a BLOB type column in HANA Cloud.

Wednesday 29 June 2022

Providing a solution to an agile business requirement with SAP BTP

In this blog, we will describe the process of identifying and adjusting the correct pieces from SAP BTP Platform in order to solve a specific customer request. This process starts from fully understanding the business needs. Then, how this translates to different SAP BTP components, in order not only to answer the current requirement but also and the future ones.

Business Case

A self service mechanism was requested by a customer (mainly from business users) in order to quickly create or edit new derived time dependent measures. This mechanism will help them to take faster and better business decisions. The key points which driven the provided solution were mainly two, who will use it (Business users) and how / where (from reporting layer).

Monday 27 June 2022

Analyzing High User Load Scenarios in SAP HANA

If you are a ERP/NetWeaver system administrator, you will face many scenarios where you experience high resource utilization in the HANA DB. In order to correct these situations, you need to analyze the root cause of this load. This blog post will help you in the analysis process to find the root cause of the load. It will help you find the exact application user which caused this load on your HANA DB.

When get reports from your monitoring tools or users about performance issues in the system, do the following:

◉ Login to HANA Cockpit

◉ Open the Database (usually tenant) which is affected by the issue.

◉ Go to CPU Usage -> Analyze Workloads

Friday 24 June 2022

SAP PaPM Cloud: Downloading Output Data Efficiently

Let’s say that as a Modeler, you have successfully uploaded your data into SAP Profitability and Performance Management Cloud (SAP PaPM Cloud) and utilized SAP PaPM Cloud’s extensive modeling functions for enrichment and calculation. And as a result of your Modeling efforts, you now have the desired output that you would like to download from the solution. The question is: Depending on the number of records, what would be the most efficient way to do this?

To make it simple, I’ll be using ranges to differentiate small and large output data. Under these two sections are step-by-step procedure on how to download results generated from SAP PaPM Cloud – in which based on my experience, would be the most efficient way.

Friday 10 June 2022

SAP Tech Bytes: CF app to upload CSV files into HANA database in SAP HANA Cloud

Prerequisites

◉ SAP BTP Trial account with SAP HANA Database created and running in SAP HANA Cloud

◉ cf command-line tool (CLI)

If you are not familiar with deploying Python applications to SAP BTP, CloudFoundry environment, then please check Create a Python Application via Cloud Foundry Command Line Interface tutorial first.

I won’t repeat steps from there, like how to logon to your SAP BTP account using cf CLI. But I will cover extras we are going to work with / experiment with here.

Thursday 9 June 2022

SAP HANA On-Premise SDA remote source in SAP HANA Cloud using Cloud Connector

SAP Cloud Connector serves as a link between SAP BTP applications and on-premise systems. Runs as on-premise agent in a secured network and provides control over on-premise systems and resources that can be accessed by cloud applications.

In this blog, you will learn how to enable cloud connector for HANA Cloud Instance, install and configure the cloud connector. Also to connect an SAP HANA on-premise database to SAP HANA Cloud using SDA remote source.

Wednesday 8 June 2022

How to deal with imported Input Data’s NULL values and consume it in SAP PaPM Cloud

Hello there! I will not bother you with some enticing introduction anymore and get straight to the point. If you are:

(a) Directed here because of my previous blog post SAP PaPM Cloud: Uploading Input Data Efficiently or;

(b) Redirected here because of a quick Google search result or what not…

Either way, you are curious on how a User could use a HANA Table with NULL values upon data import and consume this model in SAP Profitability and Performance Management Cloud (SAP PaPM Cloud). Then, I got you covered with this blog post.

Monday 6 June 2022

Exception Aggregation in SAP SAC, BW/BI and HANA: A Practical approach

Monday 30 May 2022

Transforming Hierarchy using HANA Calculation view

Introduction:

This blog post is on usage of two powerful Nodes namely Hierarchy function and Minus Node in HANA Calculation view. Both Nodes are available in SAP HANA 2.0 XSA and HANA cloud.

Minus and Hierarchy function Node are available starting SAP HANA 2.0 SPS01 and SPS03 respectively for on premise and available in SAP HANA CLOUD version.

This use case will be helpful in business scenario where one wants to migrate from SAP BW 7.X to SAP HANA 2.0 XSA or SAP HANA Cloud. SAP BW is known for Data warehousing and strong reporting capabilities. While migration from SAP BW to SAP HANA 2.0 or SAP HANA cloud some of features are not available out the box. In this case HANA cloud is considered as backend for data processing and modelling purpose and Analysis for office for reporting.

Saturday 28 May 2022

Extending business processes with product footprints using the Key User Extensibility in SAP S/4HANA

With SAP Product Footprint Management, SAP provides a solution, giving customers transparency on their product footprints, as well as visibility into the end-to-end business processes and supply chains.

SAP S/4HANA comes with the Key User Extensibility concept, which is available both in the cloud and on-premise versions.

Key User Extensibility, together with product footprints calculated in SAP Product Footprint Management, enables customers to enrich end-to-end business processes with sustainability information, helping to implement the “green line” in the sustainable enterprise. With Key User Extensibility, this can be achieved immediately, as the extension of the business processes can be introduced right away, by customers and partners, during an implementation project.

Friday 27 May 2022

Configuration of Fiori User/Web Assistant with/without Web Dispatcher for S/4 HANA On-Premise System

Overview –

The Web Assistant provides context-sensitive in-app help and is an essential part of the user experience in SAP cloud applications. It displays as an overlay on top of the current application.

You can use the Web Assistant to provide two forms of in-app help in SAP Fiori apps:

◉ Context help: Context-sensitive help for specific UI elements.

◉ Guided tours: Step-by-step assistance to lead users through a process.

Wednesday 25 May 2022

Migration Cockpit App Step by Step

Migration Cockpit is a S/4HANA app that replaces LTMC from version 2020 (OP).

This is a powerful data migration tool included in the S/4HANA license and it delivers preconfigured content with automated mapping between source and target, this means that if your need matches the migration objects available, you do not have to build a tool from the scratch, it is all ready to use, reducing the effort of your data load team.

Monday 23 May 2022

LO Data source enhancement using SAPI

In this blog we will discuss about LO Data source enhancement using SAPI. The scenario is same.

But before going to the implementation I want to discuss about the enhancement framework architecture which given below –

Sunday 22 May 2022

SAP BW4HANA DS connation (MS SQL DB) Source system via SDA

Introduction:

As you are all aware now, you cannot connect DS directly to the SAP BW4HANA system, Hance needs to connect DS DB with the HANA database via SDA and create a source system.

Based on customer requirements set up HANA DB connection with MS SQL DB and set up source system.

DISCLAIMER

The content of this blog post is provided “AS IS”. This information could contain technical inaccuracies, typographical errors, and out-of-date information. This document may be updated or changed without notice at any time. Use of the information is therefore at your own risk. In no event shall SAP be liable for special, indirect, incidental, or consequential damages resulting from or related to the use of this document.

Friday 20 May 2022

Data driven engineering change process drives Industry 4.0

We are “Bandleaders for the Process.” Our mission is to orchestrate plant operation, with leadership across the manufacturing value chain. This reminds me of my job in the plant 20 years ago.

One important mission was to manage engineering change; it required a lot of time and attention to plan, direct, control and track all the activities across the team with multiple files and paper documents:

◉ What is the impact of change?

◉ When will the new parts come from the suppliers? How many old parts do we have in stock?

◉ Which production order should be changed? What is the status of production orders?

◉ Are new tools ready? Have all of build package documents been revised?

Wednesday 18 May 2022

Rise with SAP: Tenancy Models with SAP Cloud Services

Introduction

Transitioning to Rise with SAP cloud services, SAP customers have a choice of opting for either single tenanted or multi-tenanted landscape. The choice of tenancy model largely depends on the evaluation of risk, type of industry, classification of data, security, sectorial and data privacy regulations. Other considerations include performance, reliability, shared security governance, migration, cost, and connectivity. While customer data is always isolated and segregated between tenants, the level of isolation is a paramount consideration in choosing a Tenancy Model.

In this blog, we will cover tenancy models available under Rise with SAP cloud services and explore nuanced differences and some of the consideration for choosing each of the tenancy models.