Introduction

SAP HANA provides and supports various capabilities for data integration and data provisioning to realize lots of different integration scenarios. However, there are also certain scenarios in which you just want to load data from local flat files once, without putting effort in setting up a proper data integration landscape.

As part of my daily work with SAP HANA, this requirement usually pops up when working on demos, proof of concepts or performance/load tests. In such cases, I sometimes receive large flat files with the data I need to load into SAP HANA.

This task is rather easy for on-premise installations, when you have access to the operating system, but can be way more tricky to what is sounds when working with SAP HANA on SAP Cloud Platform (e.g. SAP HANA Cloud or SAP HANA service). In such cases, we cannot put the flat file on the file system of the SAP HANA instance. So we need alternative ways how to get the data into the database.

Digression: Three different ways how to insert data from flat files into SAP HANA

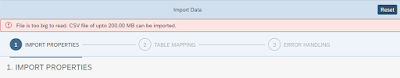

The SAP HANA database explorer provides a way to import flat files directly from your browser. However, this approach is limited with regards to the file size limit of 200 MB

I found myself a good rule of thumb to decide on the way how to upload data from flat files:

(1) Small flat files (up to max. <10-20 MB)

When the import of the data is connected to modelling tasks in SAP Web IDE and the file is rather small, a possible way to import the file is to place it directly into the design-time definition of the HDI container and define the import via an .hdbtabledata HDI artefact.

Table Data (.hdbtabledata)

This approach only supports .csv files.

Keep attention when working with Git. The most Git repositories have file size and/or repository limits. Besides that, it is neither a good practice to check in large files in a Git repository, nor to put it into a project in SAP Web IDE.

(2) Medium flat files (< 200 MB)

For small and medium sized files (smaller than 200 MB) the capabilities of SAP HANA database explorer can be leveraged to import the data via the browser after the data model has been set up.

Again, this approach only supports .csv files.

(3) Large flat files (> 200MB, up to multiple GB)

For such files I found myself a convenient way to import the data using Python. With the way described in the blog post there is no need to think about the necessary INSERT statements for the database. This happens automatically, because Pandas works nicely together with SQLAlchemy. Furthermore, this approach allows to process large files in chunks without loading the entire file into memory.

In addition to that, Python supports multiple (flat) file formats that can be used to read data into Pandas dataframes.

Follow along the blog post to learn more about option 3.

Using Python, Pandas and SQLAlchemy for flat file imports to SAP HANA

Example environment

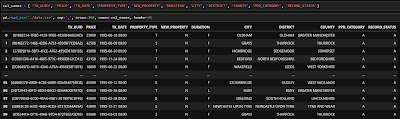

I am using the following dataset from Kaggle for the example in the blog post – it’s just a random pick: https://www.kaggle.com/hm-land-registry/uk-housing-prices-paid

The SAP HANA instance I am using is an SAP HANA service instance on SAP Cloud Platform, but it works the same way with SAP HANA Cloud or an on-premise installation. As a preparatory step, I created a service instance from type “schema” and manually created the following table.

Note that I did not use the same column names like in the CSV.

CREATE COLUMN TABLE UK_HOUSE (

TX_UUID NVARCHAR(38),

PRICE INTEGER,

TX_DATE DATE,

PROPERTY_TYPE NVARCHAR(1),

NEW_PROPERTY NVARCHAR(1),

DURATION NVARCHAR(1),

CITY NVARCHAR(30),

DISTRICT NVARCHAR(40),

COUNTY NVARCHAR(30),

PPD_CATEGORY NVARCHAR(1),

RECORD_STATUS NVARCHAR(1)

);

Python package requirements

The following Python packages are required to perform the task described in the blog post. The snippet is compliant with the syntax to declare requirement.txt, so the requirements can be either installed from a requirements.txt or manually via pip.

hdbcli>=2.5.101

pandas>=1.1.1

python-dotenv>=0.14.0

sqlalchemy-hana>=0.5.0

Providing the database credentials

I generally do not like to put credentials into the source code. That is why I create a .env file at the location of my IPython Notebook. By using the package python-dotenv, I can load this .env file later and access the credentials from the environment variables.

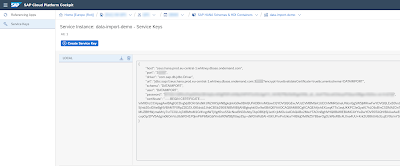

I obtain the credentials for the SAP HANA schema by manually creating service key for the service instance, which I created earlier in SAP Cloud Platform cockpit.

Please note that the certificate is required when working with SAP HANA service or SAP HANA Cloud.

The example assumes that the SAP HANA instance is whitelisted for all IPs. In case you do not allow traffic from all IP addresses, you might need to set up an ssh tunnel to an application on SAP Cloud Platform and proxy the requests via the tunnel.

The code snippets provided in the blog post require the .env to look like the following:

HANA_ADDRESS="<<The URL>>"

HANA_PORT="<<The port>>"

HANA_USER="<<The DB user>>"

HANA_PW="<<The DB password>>"

HANA_CERT="<<The certificate string>>"

Python imports

I declare the following imports within my Jupyter Notebook to load the required Python packages.

import pandas as pd

import os

from hdbcli import dbapi

from dotenv import load_dotenv

from sqlalchemy import create_engine

#Loading the .env file into the environment variables

load_dotenv()

Create an SQLAlchemy engine

Next, I create an SQLAlchemy engine for SAP HANA. Because will be towards SAP HANA in the cloud, I need to pass additional connect_args to take care of the encryption and certificates.

engine = create_engine(f'hana://{os.getenv("HANA_USER")}:{os.getenv("HANA_PW")}@{os.getenv("HANA_ADDRESS")}:{os.getenv("HANA_PORT")}', connect_args={

'sslTrustStore' : os.getenv("HANA_CERT"),

'encrypt' : 'true',

'sslHostNameInCertificate' : '*'

}}

Read and insert the data

Now it is time to touch the actual data. Depending on the file size and/or the number of rows there are two possible ways to proceed:

1. Load the data at once into Python and at once into SAP HANA

2. Load the data in chunks of individual size into Python and insert the data chunk by chunk (example in this post)

I personally prefer option 2 for several reasons:

◉ Loading a really large file (possibly larger than the main memory) at once will unnecessarily slow down the machine

◉ Processing the file in chunks allows easier error handling

◉ The data import can be theoretically paused/aborted and continued as each chunk is individually committed to the database

I previously created the database table with my individual column names, so I also need to overwrite the columns names from the file in my Pandas dataframe.

col_names = [

'TX_UUID',

'PRICE',

'TX_DATE',

'PROPERTY_TYPE',

'NEW_PROPERTY',

'DURATION',

'CITY',

'DISTRICT',

'COUNTY',

'PPD_CATEGORY',

'RECORD_STATUS'

]

For validation purposes (e.g. encoding, column names, … ) I perform a quick data preview and load the first 100 rows:

If everything looks good it is time to read the data in chunks and insert the entries into our database table. I instruct Pandas to read the CSV in chunks of 500.000 entries – this can be adjusted individually and may also depend on the number of columns.

chunker = pd.read_csv('./data.csv', sep=',', chunksize=500000, header=0, names=col_names)

Reading the data in chunks allows me to process them within a loop.

The actual insert to the database is fully automatically handled by the to_sql() function of the Pandas dataframe. It uses the SQLAlchemy dialect behind the scenes. That is the reason why the hdbcli package alone is not enough.

It is important to set the index parameter to False within the to_sql() function. Otherwise an additional column would be expected in the target table to store the index.

In case of an exception I will store the chunk as a new CSV file together with the error message into an error folder. Especially for large CSV files, this allows me to handle the chunks containing errors separately after all chunks have been processed.

for chunk in chunker:

try:

chunk.to_sql(schema='DATAIMPORT', name='UK_HOUSE', con=engine, index=False, if_exists='append')

except Exception as e:

ts = str(datetime.datetime.now().timestamp())

if not os.path.isdir("./error/"):

os.mkdir("./error/")

chunk.to_csv(f"./error/{ts}.csv")

with open(f'./error/{ts}.log', 'w', encoding='utf-8') as f:

f.write(str(e))

f.close()

You may have noticed that I created a DATE column in my database table but did not explicitly instruct Pandas to read the column as a datetime column. This work well as long as the string containing the date format can be casted by SAP HANA.

Error handling for failed chunks

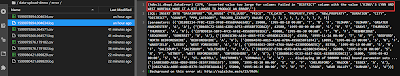

Especially for large and unseen flat files it can be the case that there are some records with unexpected values, which might not fit the table structure. Of course, I could perform data validation as part of the data processing, but I want to keep it simple and rather react to errors instead of acting beforehand.

Before the data was initially processed by the steps mentioned so far, I modified four different lines to generate some expected errors.

I analyze the generated errors in the .log files and see that there are some values that are too large for the specified columns in the target table. My chosen method how to deal with the errors is simply to adjust the target table (does not really makes sense in this example, but it’s just an example):

ALTER TABLE UK_HOUSE ALTER (DISTRICT NVARCHAR(200), COUNTY NVARCHAR(200));

Afterwards, I can run the following piece of code to only reprocess chunks that failed before. It will only consider the .csv files in the ./error/ folder, which do not start with an underscore. If a chunk is processed successfully, I will rename the file to start with an underscore (you can also remove it instead of renaming it)

In case the processing of a chunk fails again (there might be an additional error in the same chunk) I can rerun this code snippet until I only have files beginning with an underscore.

folder = "./error/"

files = [f for f in os.listdir(folder) if os.path.isfile(os.path.join(folder, f)) and f[0] != '_' and f.endswith(".csv")]

for f in files:

df = pd.read_csv(folder+f, sep=',', index_col=0)

df.to_sql(schema='DATAIMPORT', name='UK_HOUSE', con=engine, index=False, if_exists='append')

os.rename(folder+f, folder+"_"+f)

os.rename(folder+f.replace(".csv",".log"),folder+"_"+f.replace(".csv",".log"))

Additional information on database table generation

It is also possible to skip the step of creating the database table manually beforehand. The to_sql() function will also generate a table if it does not exist. However, the datatypes are not optimal set when using this approach and columns with characters are always generated as LOB. It is possible to define the datatypes in the to_sql() function. Please refer to the official documentation in this case.

No comments:

Post a Comment