In this article will demonstrate complete steps “How to optimize HANA SQL statements”.

What is an expensive SQL statement?

SQL statements consuming significant resources are called expensive SQL statements.

SQL statements that are not executed efficiently can cause local and system-wide problems. The most critical are the following areas:

◉ A long runtime can result in delays of the business activities

◉ A high CPU consumption can lead to system-wide CPU bottlenecks

◉ High memory consumption can be responsible for out-of-memory situations and performance penalties due to unload of tables from memory

Identification of Critical SQL Statements:

A key step in identifying the source of poor performance is to understand how much time is spent in the SAP HANA engine for query execution. By analyzing SQL statements and calculating their response times, you can better understand how the statements affect application and system performance.

◉ SQL statements that have caused problems in the past

◉ SQL statements that are currently causing problems

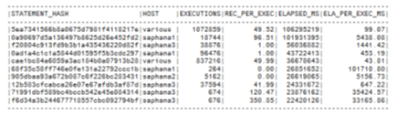

To determine SQL statements with a particularly high runtime you can check for the top SQL statements in terms of TOTAL_EXECUTION_TIME in the SQL plan cache in SAP HANA Studio

For example:

To determine the top SQL statements that were executed during a dedicated time frame in the past, you can check the SQL plan cache history (HOST_SQL_PLAN_CACHE). You can use the SQL statement: “HANA_SQL_SQLCache_History” (SAP Note 1969700) in order to check for top SQL statements during a specific time frame: You have to specify a proper BEGIN_TIME / END_TIME interval and typically use ORDER_BY = ‘ELAPSED’, so that the SQL statements with the highest elapsed time from SAP HANA are returned.

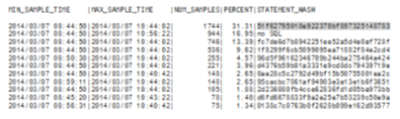

The thread sample history (tables M_SERVICE_THREAD_SAMPLES, HOST_SERVICE_THREAD_SAMPLES), if available, can also be used to determine the top SQL statements. You can use the SQL statement: “HANA_Threads_ThreadSamples_FilterAndAggregation” available from SAP Note 1969700. You have to specify a proper BEGIN_TIME / END_TIME interval and use AGGREGATE_BY = ‘STATEMENT_HASH’ to identify the top SQL statements during the time frame.

In this case the SQL statement with hash 51f62795010e922370bf897325148783 is executed most often and so the analysis should be started with it. Often you need to have a look at some more SQL statements, for example the statements related to the next statement hashes fc7de6d7b8942251ee52a5d4e0af728f and 1f8299f6cb5099095ea71882f84e2cd4

In cases where the M_SERVICE_THREAD_SAMPLES / HOST_SERVICE_THREAD_SAMPLES information is not usable you can use the thrloop.sh script to regularly collect thread samples as described in SAP Note 1989031

In case of an out-of-memory (OOM) situation you can determine potentially responsible SQL statements by analyzing the OOM dump file(s) as described in SAP Note1984422

SAP HANA Alert 39 (“Long running statements”) reports long running SQL statements and records them in the table _SYS_STATISTICS.HOST_LONG_RUNNING_STATEMENTS. Check the contents of this table to determine details of the SQL statements that caused the alert

SQL Plan Cache Analysis:

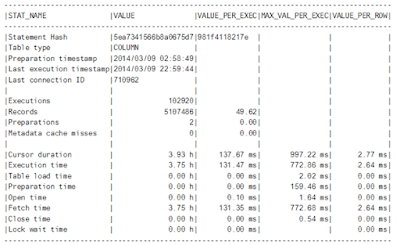

The SAP HANA SQL Plan Cache can be evaluated in detail for a particular statement hash.

The SQL statement: “HANA_SQL_StatementHash_KeyFigures” available in SAP Note 1969700 can be used to check for the SQL Plan Cache details of a specific SQL statement (the related STATEMENT_HASH has to be maintained as input parameter).

The historic execution details for a particular SQL statement can be determined with the SQL statement: “HANA_SQL_StatementHash_SQLCache_History” included with SAP Note 1969700. Also here the appropriate STATEMENT_HASH has to be specified as input parameter.

Results:

Based on the results of this evaluation you can distinguish the following situations:

◉ If the value for Executions is unexpectedly high, further analysis should be done on the application side in order to check if it is possible to reduce the number of executions.

◉ If the value for Records is unexpectedly high, further analysis should be done on the application side in order to check if it is possible to reduce the number of selected records.

If the value for Cursor duration is very high and at the same time significantly higher than the value for Execution time, you have to check which processing steps are executed on the

◉ application side between the individual fetches. A high value for Cursor duration can negatively impact the database in general because open changes may impact the MVCC mechanism.

◉ If the value for Preparation time is responsible for a significant part of the Execution time value you have to focus on optimizing the parsing (for example, sufficient SQL plan cache size, reuse of already parsed SQL statements).

If Execution time is much higher than expected (that can be based on the statement complexity and the number of processed rows), the SQL statement has to be checked more in detail on technical layer to understand the reasons for the high runtime.

Detailed Statement Analysis:

When you have identified a critical SQL statement and identified its overall key figures from the SQL plan cache analysis you can have a closer look at the actual runtime behavior of the SQL statement. The following tools can be used.

◉ Plan Explanation

- Creation of an execution plan

◉ Plan Visualizer

- Detailed graphical execution plan

- Temporal breakdown (timeline) available

◉ QO Trace

- Query optimizer trace

- Advanced tool that can be useful to understand the decisions of the query optimizer

- Particularly helpful to understand column searches

◉ JE Trace

- Join evaluation trace

- Advanced tool to analyze table join operations

◉ Performance trace

- Low level recording of key performance indicators for individual SQL statement processing steps

Optimization of Critical SQL Statements:

◉ Optimize outlier queries

◉ Queries that sometimes take much longer than expected can be caused by query-external factors (for example, resource bottlenecks) that have to be determined and eliminated.

◉ Check data manipulation commands (DML)

DML operations like INSERT, UPDATE and DELETE can be impacted by lock waits.●

◉ Improve SQL query design

The performance of your SQL queries can be improved significantly by knowing how the SAP HANA database and SAP HANA engines process queries and adapting the queries accordingly.

◉ Create indexes for any non-primary key columns that are often queried.

SAP HANA automatically creates indexes for primary key columns; however, if you need indexes for non- primary key columns, you must create them manually.

◉ Create virtual star schemas on SAP HANA data by joining attribute views to tables that contain measures within the definition of an analytic view

By using analytic views, SAP HANA can automatically recognize star queries and enable the performance benefits of using star schemas, such as reducing dependencies for query processing.

With procedures, no large data transfers to the application are required and you can use performance- enhancing features such as parallel execution. Procedures are used when other modeling objects, such as analytic or attribute views, are not sufficient.

1. If you use SQLscript to create procedures, follow the best practices for using SQLScript.

2. For statistical computing, create procedures using the open source language R.

◉ Download and install the available application function libraries, such as Predictive Analysis Library (PAL) and Business Function Library (BFL).

You can see that during the period in the red rectangle both CPU consumption and SQL throughput decreased. During that time frame you would look for something that consumed a lot of resources or blocked the statements (locking); just after 15:35 you see that the CPU consumption increases while the SQL throughput decreases. Here, a possible case would be a change in usage: instead of many small, fast SQL statements the workload changed to a few “heavy” (complicated calculation requiring many CPU cycles) SQL statements.

If there was a high statement load in the same period when you experienced the slow execution the root cause is likely a lack of resources. To resolve the situation consider restricting the number of users on SAP HANA, upgrading the hardware, or get in touch with SAP Support if scalability can be improved in this case.

If you did not experience a high statement load during the time frame of the problem, check for background activities:

◉ Delta Merges: Use the monitoring view M_DELTA_MERGE_STATISTICS to check if delta merges happened. In that case try to improve the delta merge strategy to prevent merges happening in phases where users are disturbed

◉ Column Unloads: See the Monitoring View M_CS_UNLOADS to look for signs of column unloads. If a column used in the problematic statement had to be loaded before execution, the execution itself will take significantly longer.

◉ Savepoints: Savepoints consume resources and write-lock the database during their critical phase. Check M_SAVEPOINTS and look for savepoints during the time frame of the problem. If a savepoint slowed down your execution, the chance of having the same problem again is very low. If this is happening very often the service team will engage accordingly.

Performance of SQL Series can be improved significantly by knowing how the SAP HANA database and SAP HANA engines process queries and adapting your queries accordingly.

As a general guideline for improving SQL query performance, SAP recommends to avoid operations that are not natively supported by the various SAP HANA engines. Such operations can significantly increase the time required to process queries. In addition, the following specific recommendations may help improve the performance of your SQL queries:

◉ Avoid calculations in queries.

◉ If two columns are frequently compared by queries, ensure the two columns have the same data type.

For columns of different types, SAP HANA uses implicit type casting to enable comparison. However, implicit type casting has a negative effect on performance. If you cannot ensure the two columns have the same type from the beginning, one of the following steps can improve performance:

If possible, change the type of value as this has less cost than changing the type of column

Consider adding a generated column. A generated column improves query performance at the expense of increased insertion and update costs

Avoid join predicates that do not have the equal condition. Join predicates connected by OR, Cartesian product, and join without join predicates are not natively supported

Avoid using filter predicates inside outer join predicates because they are not natively supported. You can rewrite such filter predicates into equijoin predicates using a generated column.

Avoid cyclic joins because cyclic outer joins are not natively supported and the performance of cyclic inner joins is inferior to acyclic inner joins.

Avoid using OR to connect EXISTS or NOT EXISTS predicates with other predicates.

If possible, use the NOT EXISTS predicate instead of NOT IN. The NOT IN predicate is more expensive.

Avoid using the UNION ALL, UNION, INTERSECT and EXCEPT predicates because they are not natively supported.

For multiple columns involved in a join, create the required indexes in advance. SAP HANA automatically creates indexes to process joins; however, if the queries are issued by an update transaction or a lock conflict occurs, the indexes cannot be created, which may cause performance issues.

Create indexes on non-primary key columns to enhance the performance of some queries using the index adviser. SAP HANA automatically creates indexes for all primary key columns.

Indexing the primary key columns is usually sufficient because queries typically put filter conditions on primary key columns. When filter conditions are on non-key fields and tables have many records, creating an index on the non-primary key columns may improve the performance.

No comments:

Post a Comment