Just putting up and writing down all the consolidated best practices and guidelines including tips for SAP HANA modeling (Majorly for version 2.0 SPS 02)

SAP HANA layered architecture (Great to have) and naming conventions

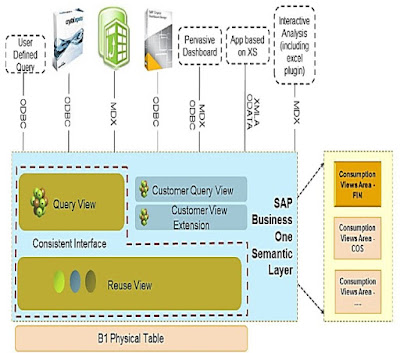

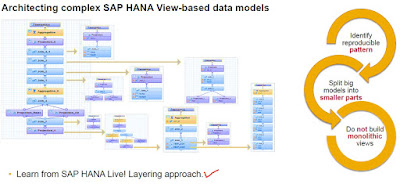

As per my view in Native HANA great to have Three (3) Layers like SAP HANA Live models

1. Base View (1 layer – Raw data 1:1 source to HANA Base layer, Global Filters)

2. Reuse View (Max 3- 4 layers view good to have – Logics, BTL, Mappings, complex)

3. Query View/Report View (1 layer – Final View – Thin layer)

P.S – Master data will be maintained as individual with Dimensions with Star model and consumed in relevant transactional HANA Models

Calculation View: CV_<Layer>_<BUSINESS_NAME> e.g. CV_BV_FSR_1

Naming Conventions – Keep the name as short as possible (preferably under 15 chars)

Name every element in CAPITAL LETTERS. Give meaningful business names

◈ CV_BV_XYZ

◈ CV_RV_XYZ1, 2, 3 (Based on the complexity layers will increase – max 5 nice to have. Possible chunk to smaller

◈ CV_QV_XYZ

Good for reconciling and support reason to analyse the issues (Easier troubleshooting)

Top 10 reasons to choose SAP HANA

SAP HANA layered architecture (Great to have) and naming conventions

As per my view in Native HANA great to have Three (3) Layers like SAP HANA Live models

2. Reuse View (Max 3- 4 layers view good to have – Logics, BTL, Mappings, complex)

3. Query View/Report View (1 layer – Final View – Thin layer)

P.S – Master data will be maintained as individual with Dimensions with Star model and consumed in relevant transactional HANA Models

Calculation View: CV_<Layer>_<BUSINESS_NAME> e.g. CV_BV_FSR_1

Naming Conventions – Keep the name as short as possible (preferably under 15 chars)

Name every element in CAPITAL LETTERS. Give meaningful business names

◈ CV_BV_XYZ

◈ CV_RV_XYZ1, 2, 3 (Based on the complexity layers will increase – max 5 nice to have. Possible chunk to smaller

◈ CV_QV_XYZ

Good for reconciling and support reason to analyse the issues (Easier troubleshooting)

SAP HANA Best practices and guidelines

Beneath mentioned few HANA best practices and guidelines are from my experience and referral from experience HANA consultants.

Always great to follow and adhere to the SAP HANA best practices and guidelines

- Use Inner join/referential/left outer joins as maximum any way it depends on the business needs, consider replacing them with UNION nodes (where possible). May vary based on a business case to case

- Specify the correct cardinality in joins (n:1 or 1:1) – only if sure

- Use table functions instead of scripted calculation views

- All views/tables are nice to be used with a projection node. Projection nodes improve performance by narrowing the data set (required columns)

- Use star join in calculated view and join calculation view (Dimension – Master data) for better performance (Star schema concept)

- Execute in SQL-engine (Semantics properties or at during custom calculations)

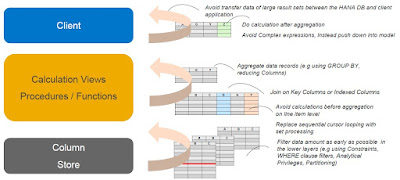

- Avoid transfer of large result sets between the SAP HANA DB and client applications

- Reduce the data set as early as possible. Use design time filters at the lowest level, this helps to reduce at least 20-30% execution time

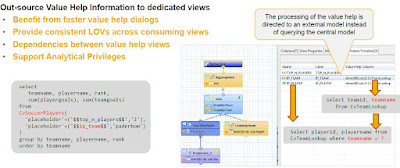

- Input Parameter (Mandatory/Optional): Placeholders part of the models can be used in the calculation. Can accept multiple values and can be derived from the table (Value help Models) or stored procedures

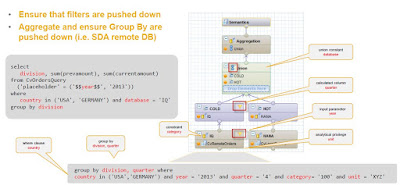

- Ensure Variables (where clause) is pushed to the lowest level. Confirm using Visualization Plan/plan cache

- Wherever possible use variables and input parameters to avoid fetching a big chunk of data

- Avoid calculated object using IF-THEN-ELSE expression, use restricted measure instead. HANA SPS 11 supports expression using SQL in Restricted column

- Avoid performing joins on calculated columns

- Proceeding, avoid script-based calculation view, WHERE clause will not be pushed down

- Using Filter is better than using Inner Join to limit the dataset

- Avoid joining columns which are having more NULL values

- Before You Begin please check for key columns for any null values at table level. The columns that are part of the joins in the HANA models should not contain any NULL values (resolve null values through ETL or SLT jobs before starting modeling)

- While using Unions make sure there will be no null values in Measure columns otherwise Union Operation will chock (do manage mappings and supply 0 values for null measures columns)

- Execute the SAP HANA models in SQL Engine (Semantics properties)

- Make use of aggregation nodes in your models for handling duplicates

- Avoid filters on the calculated column (consider materializing these columns)

- Do not create working tables in different schemas. This will create security problems on ownership. Instead of that create a separate schema and create all working tables and use it in your Modelling

- One of the best practices in HANA modeling is to define joins on columns with either INTEGER or BIGINT as data types

- Check the performance of the models during the initial development rather than doing at the final phase

- Partition the tables if they are having a huge number of records for better performance Max 2B records per table (or table partition) and max 1000 partitions per table nice to have

- Use analytical privilege latest SQL analytical privilege (SP10) to filter the data based on business requirement

- Join on Key columns and indexed columns

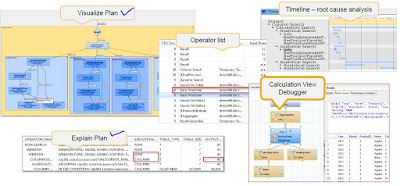

- Use execution plan & Visualization plan to analyse HANA Models performance and take necessary steps if any performance issues observed, Best way to deal with performance issues is after every step of the Modelling rather than final version of the modeling, by this good chance of overcoming memory allocation issues/CPU/memory consumption issues can be addressed during the development stage

- It is not recommended to have joined on calculated columns/fields with NVARCHAR or DECIMAL as data types, might create performance issues. Anyway, case to case it differs based on business needs, however, stick to best practices

These are some of HANA the best practices pursued by numerous SAP HANA consultants. However, still, some complex business requirements coerce us to use or alleviate from such HANA best practices, which can be ignored.

SAP HANA general performance principles

- Identify the long-running queries by reviewing the Performance tab to analyse system performance located under the Administration perspective

- Perform performance testing of HANA information models in a QA environment before hit production environment

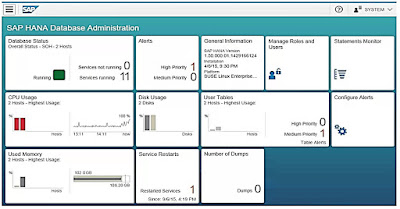

- SAP HANA cockpit is a good Admin monitor tool, make use of this tool soon to keep away from the performance bottlenecks and avoid major issues on production environment

- SAP HANA automatically handles Indexes on key columns usually enough, however, when filter conditions on Non- Key fields, please create a secondary index on non-key columns if it is necessary. Creating an index on the non-primary key columns (with high cardinality) will enhance the performance

- Non-SAP applications make use of SDA (Smart data access), no loading required, low cost, maintenance, on virtual tables business will get Realtime data insights if customer applications are greater side to non-SAP side

SAP HANA 2.0 new features for better performance (SAP HANA data modelling)

Simply setting up and writing down all the consolidated few new features for SAP HANA Modelling – Version 2 SPS03 perspective what we witnessed for better performance execution. Maybe a couple might be referenced for reference purpose, nice to adopt them while implementing SAP HANA models and later for support activities & monitoring

- Expose only required columns used in reports and hierarchies at lower data models

- Give meaningful business names for all exposed attributes in final reporting views

- In Latest version please use calculation views of type Dimension (Master Data – in place of Attribute View) and calculation Views of type with cube/star-join (Analytical Views and Calculations Views old concept – Star Schema – Fact Table surrounded by Dimensions (Attribute Views)

- Note: Table functions can be used as input sources for either dimensions or facts

- Nice to have Virtual tables (SDA), SDI: Scheduling and Executing Tasks (Flow Graphics), extended table (Dynamic Tiering) can be consumed in calculation views, so it is essential these features extended to all business cases

- SAP HANA Cockpit – offline and online (SAP HANA admin performance monitor tool)

- SAP Web IDE for SAP HANA – Full support for graphical data models (Web-based)

- Enabling SQL Script with Calculation views (outdated/retired)

- SAP HANA 2.0 use SQL Script table functions instead of script-based calculation views. Script based-calculation models can be refactored into table functions

Consuming non-In Memory data in Calculation views

Dynamic Tiering

• Provides a disk-based columnar SAP HANA database extension using SAP HANA external tables (extended tables)

Smart Data Access

• Provides a virtual access layer to outside SAP HANA data (e.g. other databases, Hadoop systems, etc.) using so-called virtual tables model and approach these scenarios carefully and monitoring query performance

• Ensure that filters and aggregation is pushed to remote sources

Calculation View Enhancements – Hierarchies (Hierarchy SQL Integration)

• Hierarchy-based SQL processing capabilities enabled via SAP HANA View-based hierarchies

Restricted Objects in advanced versions of HANA

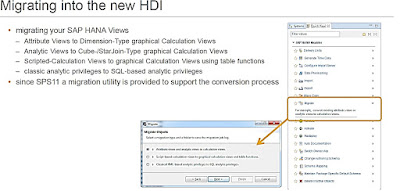

The flowing HANA artifacts should not be created by HANA modelers since they are deprecated in the higher versions and required the laborious amount of work for migrating these objects.

1. Attribute Views and Analytical Views

2. Script based calculation views

3. XML – based Classical analytical privilege

Prepare for the future migration from existing old SAP HANA information views

Security and Authorizations – Analytical privileges

Create SQL-based analytic privileges

◈ Start with general Attribute based Analytic Privilege, then switch to SQL-based

◈ Use SQL Hierarchies within SQL Analytical Privileges

SAP HANA Cockpit – offline and online – Sample dashboard (Fiori)

Few other HANA modelling area guidelines like Joins, Calculated columns, Filters, Aggregation and so on

The right place to create calculated columns in HANA models

◈ A view having calculated columns at the lower levels can be slower than a view having the same equivalent calculated columns at the highest level. However, some cases may differ based on business needs (Case by case). The greater part we have actualized in our projects at the top level (Aggregation)

◈ Calculated columns at the top level (Aggregation) for example, if a calculation such as “Sales amount – Tax amount” is done at the lowest level (for each table record entry) then it will take significantly longer to process. Another example like needs to view rating flag like sales >20,000 to be calculated on the sum of measures. All Date Calculations, Time Stamp Conversions, Time Zone Conversions must be pushed to the TOP level of the Model. Because they are the most expensive statements

◈ On the lowest level – If the calculated column is a measure like count/sales data for

◈ which discount/logic needs to be applied. (With the goal that it will not give you an unexpected value)

NOTE: Developer needs to analyze from his end, and create the calculated columns on the best place based on the requirement

Filters

◈ Apply all filters, Fixed values and the input parameter expressions at the lowest level of the views

◈ Using filters is better than Inner Join to limit the dataset in terms of performance

◈ Avoid applying filters on calculated columns

◈ Use SQL analytical Privilege to filter data, and avoid using classical analytical privilege since they are obsolete in HANA 2.0 SPS03

SAP HANA Restricted measures and logical partitioning (Example on year OR on the plant in the case to view region wise logical partition)

Aggregation

◈ When using the aggregation node, always try to use at least one of the aggregated functions like COUNT, MIN, MAX, AVG, so that the aggregation works much faster

◈ If there are some unavoidable duplicates rows getting generated from the models, then use the aggregation node immediate to the JOIN node and remove the duplicates at the very lower level itself

◈ Never use the TOP aggregation node for removing duplicates, always introduce a separate aggregation node for handling duplicates

◈ Be careful with the keep flag in aggregation node, where the results get different when this flag is been set in aggregation node (Lower level is best)

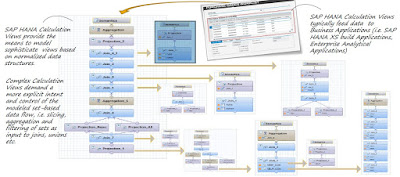

SAP HANA modeling – Normalized virtual data model scenarios

SAP HANA virtual data modeling – Key concept

SAP HANA best practice – Designing larger virtual data models using HANA views

SAP HANA General Tips and Tricks

Value help look up models

SAP HANA general tips and tricks – filter and aggregation to push down

No comments:

Post a Comment