You need to compare an APL classification or regression model to a non-APL model, and you want to make sure the evaluation process is not flawed. This blog should help you run this comparison in a fair manner; it includes code snippets you can copy and paste in your own Python notebook.

The importance of using a Hold-out dataset

To compare the accuracy obtained by an APL model and the accuracy obtained by a non-APL model, the use of the same input dataset for model training and model testing, as shown in the following diagram, can be tempting because it is quick and easy to do:

The problem with this approach is that it will lead to an overrated model accuracy. There is a better way to evaluate whether the predictive model generalizes well or not on new data.

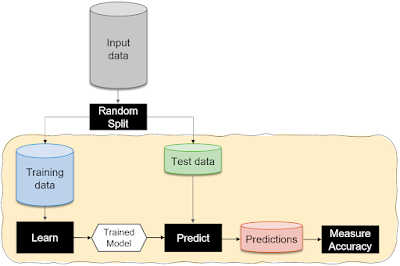

The approach taken by most ML practitioners to assess the accuracy of their classification and regression models consists of randomly splitting the data; a large chunk (e.g., 80%) is reserved for Training, and the remaining rows for Test, as represented below:

The data split operation is usually done once. When the input dataset doesn’t have a lot of rows though, you may want to specify different random seeds; changing the random seed will change the rows going into the training bucket and the rows going into the test bucket; that way you can check if the accuracy of the predictive algorithm is repeatable for different seeds.

Splitting the dataset is not a guarantee of doing the right thing. Below is a situation we have encountered in a project:

Although the intention was good, the implementation was incorrect due to a mistake in the Python code; the model was trained against the entire input dataset allowing the ML algorithm to learn not only from the training data, but also from the test data, which should not be permitted.

APL Gradient Boosting, and each non-APL algorithm you want to compare to it, must go through the same evaluation workflow:

1. Learn from the Training dataset.

2. Make a prediction for each row in the Test dataset (hold-out).

3. Measure the accuracy of the predictions made in step 2.

The purpose of this first part of the blog is to show how you can randomly split your ML data and produce at the end two HANA tables: a Train table and a Test table (hold-out). We will cover two scenarios: i) working from a HANA dataframe with PAL partitioning ii) working from a Pandas dataframe with scikit-learn. We will conclude with a third case where the test data was already prepared and given to you.

In-database Train-Test Split

Let’s use Census as our input dataset. We define a HANA dataframe pointing to the Census table as follows:

from hana_ml import dataframe as hd

conn = hd.ConnectionContext(userkey='MLMDA_KEY')

df_remote = conn.table('CENSUS', schema='APL_SAMPLES')

df_remote.head(3).collect()

The function train_test_val_split from HANA ML is what we need to prepare a hold-out dataset. We ask for a 75%-25% split and we provide a random seed:

key_col = 'id'

target_col = 'class'

# Random Split

from hana_ml.algorithms.pal.partition import train_test_val_split

train_remote, test_remote, valid_remote = train_test_val_split(random_seed= 317,

training_percentage= 0.75, testing_percentage= 0.25, validation_percentage= 0,

id_column= key_col, partition_method= 'stratified', stratified_column= target_col, data= df_remote )

# Number of rows and columns

print('Training', train_remote.shape)

print('Hold-out', test_remote.shape)

Finally, we persist the training table and the hold-out table in the HANA database for classification modeling later:

train_remote.save(('CENSUS_TRAIN'), force= True)

test_remote.save(('CENSUS_TEST'), force= True)

Local Train-Test Split

Another option to prepare your hold-out dataset is to use the function train_test_split from scikit-learn. Here is an example with the dataset California housing:

import pandas as pd

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

# Prepare Input dataframe

bunch_local = fetch_california_housing()

df = pd.DataFrame(bunch_local.data)

feat_names = ['Income','Age','Rooms','Bedrooms','Population','Occupation','Latitude','Longitude']

df.columns = feat_names

df['Price'] = bunch_local.target

df.insert(0, 'Id', df.index)

df.head(3)

We ask for a 80%-20% split and we provide a random seed:

train, test = train_test_split(df, test_size=0.2, random_state=37)

# Number of rows and columns

print('Training', train.shape)

print('Hold-out', test.shape)

We persist the two tables in HANA for regression modeling in part 2:

from hana_ml import dataframe as hd

conn = hd.ConnectionContext(userkey='MLMDA_KEY')

train_remote = hd.create_dataframe_from_pandas(connection_context=conn,

pandas_df= train,

table_name='HOUSING_TRAIN',

force=True,

drop_exist_tab=True,

replace=False)

test_remote = hd.create_dataframe_from_pandas(connection_context=conn,

pandas_df= test,

table_name='HOUSING_TEST',

force=True,

drop_exist_tab=True,

replace=False)

When the Hold-out dataset already exists

If someone provides you with the hold-out dataset, does that mean you can start the evaluation right away? No. Before jumping blindly into evaluation, it is preferable to run a few checks. You should verify that the hold-out data and the training data are similar.

For example, in the case of a binary classification, a Test dataset (hold-out) with a target that has 0s but no 1s will only allow to assess one aspect of the model: its ability to correctly predict the negative class. This could happen if the hold-out dataset was inappropriately built by selecting the top n rows in a table sorted by the class.

Features must be checked too. You don’t want to evaluate a classification or regression model with an important feature, say house_owner, which takes the values Yes/No in the Training dataset but yes/no in the Test dataset.

No comments:

Post a Comment