There are a lot of sources where you can find some rudimentary information about how a HANA savepoint is working or which event is triggering one. But not really how the different phases are working or which dependencies are there and how you can determine the thread details. In context of a customer issue I have done some researching and reengineering.

This means if you do not know what a savepoint or a delta merge is, stop reading now

I will only explain them on a high-level basis.

1. General stuff about savepoints

2. Impacts of a long running savepoint

3. Customer challenge

4. How to analyze it

5. Improvements

„ SAP HANA persists in-memory data by using savepoints. Each SAP HANA service has its own separate savepoints. A savepoint is a periodic point in time, when all the changed data is written to storage, in the form of pages.

During a savepoint operation, the SAP HANA database flushes all changed data from memory to the data volumes. The data belonging to a savepoint represents a consistent state of the data on disk and remains so until the next savepoint operation has completed. Redo log entries are written to the log volumes for all changes to persistent data

One goal of performing savepoints is to speed up restart: when starting up the system, logs need not be processed from the beginning, but only from the last savepoint position. Savepoints are coordinated across all processes (called SAP HANA services) and instances of the database to ensure transaction consistency. By default, savepoints are performed every five minutes, but this can be configured.

Savepoints normally overwrite older savepoints, but it is possible to freeze a savepoint for future use; this is called a snapshot. Snapshots can be replicated in the form of full data backups, which can be used to restore a database to a specific point in time. This can be useful in the event of data corruption, for instance. In addition to data backups, smaller periodic log backups ensure the ability to recover from fatal storage faults with minimal loss of data.“

ALTER SYSTEM SAVEPOINT

global.ini -> [persistence] -> savepoint_interval_s

=> Default: 300 (s) = 5min

Triggers:

◉ startup

◉ soft shutdown

◉ backup

◉ snapshot

◉ reclaim datavolume

◉ auto merge function (mergedog)

◉ manual

Hard manual merge:

MERGE DELTA OF "<table_name>"

Immediate merge for all partitions of the system with respect to the available system resources.

Forced manual merge:

MERGE DELTA OF "<table_name>" WITH PARAMETERS ('FORCED_MERGE' = 'ON')

Immediate merge for all partitions of the system with no respect to the available system resources.

Merge of a single partition:

MERGE DELTA OF "<table_name>" PART <part_id> ...

Note: If you trigger merges manually, you have to take attention to the little difference between auto merges and manual merges: The compression of the table will only be triggered in the auto merge scenario! You have to trigger them in the manual scenario by your own!

There are 2 critical blocking time frames during merge in cause of blocking phases. One is called “critical phase” which in my experience causes not so many issues as the other one called “wait for lock”.

This means if you do not know what a savepoint or a delta merge is, stop reading now

I will only explain them on a high-level basis.

1. General stuff about savepoints

2. Impacts of a long running savepoint

3. Customer challenge

4. How to analyze it

5. Improvements

1. General stuff about savepoints

„ SAP HANA persists in-memory data by using savepoints. Each SAP HANA service has its own separate savepoints. A savepoint is a periodic point in time, when all the changed data is written to storage, in the form of pages.

During a savepoint operation, the SAP HANA database flushes all changed data from memory to the data volumes. The data belonging to a savepoint represents a consistent state of the data on disk and remains so until the next savepoint operation has completed. Redo log entries are written to the log volumes for all changes to persistent data

One goal of performing savepoints is to speed up restart: when starting up the system, logs need not be processed from the beginning, but only from the last savepoint position. Savepoints are coordinated across all processes (called SAP HANA services) and instances of the database to ensure transaction consistency. By default, savepoints are performed every five minutes, but this can be configured.

Savepoints normally overwrite older savepoints, but it is possible to freeze a savepoint for future use; this is called a snapshot. Snapshots can be replicated in the form of full data backups, which can be used to restore a database to a specific point in time. This can be useful in the event of data corruption, for instance. In addition to data backups, smaller periodic log backups ensure the ability to recover from fatal storage faults with minimal loss of data.“

ALTER SYSTEM SAVEPOINT

global.ini -> [persistence] -> savepoint_interval_s

=> Default: 300 (s) = 5min

Triggers:

◉ startup

◉ soft shutdown

◉ backup

◉ snapshot

◉ reclaim datavolume

◉ auto merge function (mergedog)

◉ manual

Hard manual merge:

MERGE DELTA OF "<table_name>"

Immediate merge for all partitions of the system with respect to the available system resources.

Forced manual merge:

MERGE DELTA OF "<table_name>" WITH PARAMETERS ('FORCED_MERGE' = 'ON')

Immediate merge for all partitions of the system with no respect to the available system resources.

Merge of a single partition:

MERGE DELTA OF "<table_name>" PART <part_id> ...

Note: If you trigger merges manually, you have to take attention to the little difference between auto merges and manual merges: The compression of the table will only be triggered in the auto merge scenario! You have to trigger them in the manual scenario by your own!

There are 2 critical blocking time frames during merge in cause of blocking phases. One is called “critical phase” which in my experience causes not so many issues as the other one called “wait for lock”.

| Blocking: “wait for lock” | ConsistentChangeLock => If this lock is held by other threads / transactions, the duration of this phase is increasing => at the same time blocking all DMLs (INSERT, UPDATE or DELETE etc.) are blocked by the savepoint with ConsistentChangeLock |

| Blocking: “critical” | remaining I/O writing are performed. During this time other transactions aren’t allowed to perform changes on the underlying table and are blocked with ConsistentChangeLock. |

=> A blocking phase holds locks until the phase ends

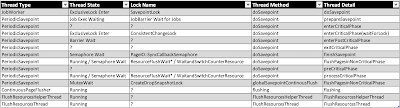

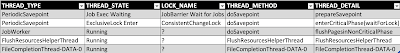

Here you can see the different thread details of a savepoint (as reminder to search for inside the threads):

Phases order from top to bottom (example!):

2. Impact of long running savepoints

If a Delta Merge takes longer than 300s it can happen that it blocks the next savepoint in cause of the ConsistentChangeLock in the waitForLock phase. This can lead to a complete hanging system because the savepoint will block all other modification operations in the system. The same if your disks are full or you are struggling with the performance of your I/O subsystem.

You should check your system and get a feeling how big your tables are and how long do they normally spend for delta merge. Additionally you should know how long a normal savepoint takes in your system. Just query the monitoring views for this runtimes.

For this you should frequently check the “mini checks” within the SQL collection (Note: 1969700). In this SQL are also checks especially for merges and savepoints.

3. Customer challenge

The customer has some quite big systems with > 6TB of data in HANA 2.0 SPS03. A lot of tables are blowing up the 2 billion row limit. So this means we have also a lot of partitions.

In the night there is a lot of parallel load on the system. There are 40 – 50.000 statements/sec. In this high load time frame the affected tables filled with huge amount of data which will trigger the mergedog in cause of the merge decision.

This means we have a high load in cause of the “normal” parallel workload and we have additionally the internal system jobs like delta merges and savepoints at this point in time. The customer had bad performance experience in this time frame. So how to analyze the root cause?

Affected time frame: 23:30 – 00:15

4. How to analyze it

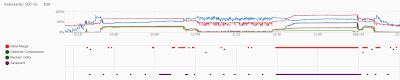

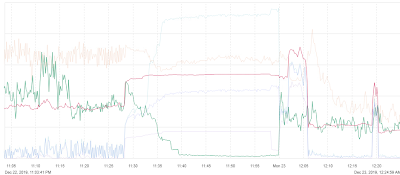

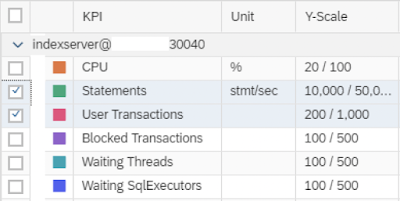

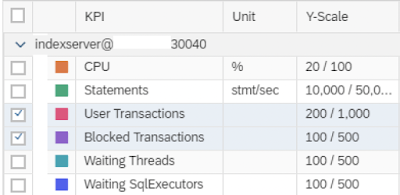

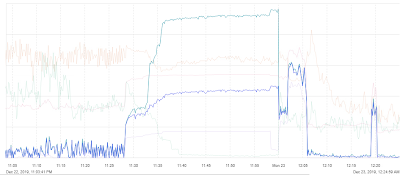

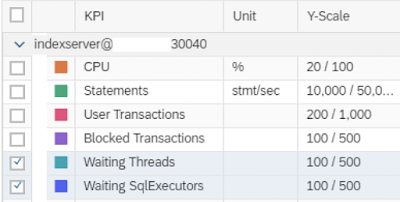

Via HANA Cockpit (use the Workload Analysis within the timeframe)

◉ use different KPI in cause of the different scaling of the axes

◉ mark the affected time frame to get details

=> 30k stmt/sec

◉ >400 waiting threads

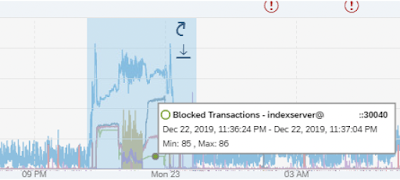

◉ A lot of blocked transaction at this time

◉ Mark the time frame and choose “Background Jobs”:

◉ Runtime of the merge (red) and savepoint (purple)

◉ 31min runtime of the merge (11:29 – 12:00)

◉ 30min runtime of the savepoint (11:29 – 11:59)

◉ with the release of the consistentchangelock by the delta merge the savepoint could be finished earlier than the merge finished its postprocessing

You can see that in this 30min of the delta merge and the parallel savepoint no other operation could be executed, in cause of the consistent change lock of both of them. This leads to a hanging system. The blocked transactions and waiting threads are increasing till these locks are released.

To illustrate this in detail, you will see how this scenario affected the other transactions/threads:

◉ more user transactions but less finished statements in cause of the ConsistentChangeLock

◉ more blocked transactions in cause of the ConsistentChangeLock

◉ finally you see the effect on a thread bases: waiting threads and SQL Executors

You can also use the HANA Studio or the SAP HANA Database Explorer (tool inside HANA Cockpit for SQL executions) for more details.

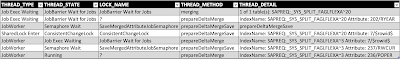

Therefor use some of these statements:

1969700:

HANA_Tables_ColumnStore_Merges_1.00.90+_ESS

HANA_Threads_ThreadSamples_FilterAndAggregation_1.00.122.08+

Modification Section: ‘?’ APP_USER

◉ The App User “?” stands of the internal threads

◉ here I have filtered for the merge process

◉ you can see the shared lock as consistentchangelock on table partition 20 of FAGLFLEXA

◉ afterwards other partitions of this table are also processed

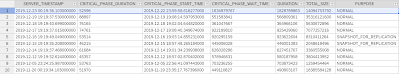

select * from _SYS_STATISTICS.HOST_SAVEPOINTS_BASE where critical_phase_wait_time > 300000000 order by critical_phase_start_time desc;

◉ All time-based values have the unit microseconds (see guide: SAP_HANA_SQL_and_System_Views_Reference)

◉ All size values based on Byte

◉ Critical phase is pretty short

◉ Critical phase wait time is a bit too long

If you analyzed it manually via the SQL Statement Collection or the HANA Cockpit and want some more details you can trigger a RTE dump with more details about the thread:

indexserver.ini -> [persistence] -> runtimedump_for_blocked_savepoint_timeout = <seconds>

5. Improvements

Improve savepoint in high load phases

Savepoints are required to write modified data down to disk (SAP Note 2100009). Normally it is the main intention to shorten the blocking savepoint phase as much as possible and accept longer savepoint durations at the same time. During mass imports the opposite can be better: Shorter savepoints with the risk of increased blocking phases. Shorter savepoints can reduce the amount of data written to disk and they can reduce the amount of logs that need to be kept, reducing the risk of file system overflows.

During mass changes the following parameter adjustments can be considered to reduce the overall savepoint duration:

lower values for global.ini -> [persistence] -> savepoint_max_pre_critical_flush_duration (e.g. 300 instead of 900)

higher values for global.ini -> [persistence] -> savepoint_pre_critical_flush_retry_threshold (e.g. 10000 instead of 3000)

Common reasons for ConsistentChangeLock contention

For SAP HANA <= 2.0 SPS 03 the time an internal activity like a table optimization holds a ConsistentChangeLock during attribute value container writes can be controlled with the following SAP HANA parameter (default: 268435456 byte -> 256 MB):

global.ini -> [persistence] -> non_trans_cch_block_size

A reduction, e.g. to smaller values like 134217728 (128 MB) or 16777216 (16 MB) is typically acceptable and can make sure that the ConsistentChangeLock is released more frequently.

With SAP HANA >= 2.0 SPS 04 the implementation has changed and setting this parameter is no longer required.

Improve merge process

indexserver.ini -> [indexing] -> parallel_merge_threads

=> default: 2

This parameter stands for the number of attributes to merge in parallel for each table and partitions! So if the merge dog identifies multiple tables and you chosen a too high number, you can overload your system.

The system uses cost functions to decide which table to merge, when, and in which order. There are also cost functions that control how many tables are merged at the same time and how many threads are used to merge a single table.

Note: Every partition of a partitioned table is treated internally as a standalone table with its own data and delta store!

Known time frames to fill tables

If you know the exact time frame which is trigger / event based or have a fix starting time, you can disable the merge before the workload is started and the tables are getting filled. Afterwards you can turn on the merge again and trigger a manual merge (check compression!).

No comments:

Post a Comment