An important partitioning strategy to improve performance is to match partitions wherever possible with the most frequently queried data so that data pruning is possible.

Partition pruning takes place automatically in the background and tries to eliminate any unnecessary partition from the selection which is not essential for the query result. If your partition schema are designed to support this, pruning can avoid accessing and loading into memory partitions which are not required which reduces the overall load on the system and improve memory usage. The classic use case for effective pruning is where date-based partitions are used. For example, if a table is partitioned by day, month or year, a query restricted to the data of a specific date period is executed only on the partition with data for the selected date period.

Partition pruning is based on the partition definition. The query optimizer analyzes the WHERE clause of queries to determine whether or not the filters match the given partitioning specification of a table. If a match is found, it may be possible to target only the specific partitions that hold the data and thus reduce the number of partitions being queried.

I am going to provide you the steps and guidance needed to manage a sliding window scenario, with an example.

Let’s start from a non-partitioned table: PFC_COMBINED_FACTS and it contains 370 m rows and we have Data Service job running everyday to insert or update transaction data. The table has a composite primary key (PFCHOLIK, TODATE_FILTER) and the data type for column TODATE_FILTER is DATE and this column represent when the financial transaction happen at source system.

Let’s run a query to find out how many transaction rows per year

SELECT YEAR("TODATE_FILTER"), COUNT(*) FROM "DLIU"."PFC_COMBINED_FACTS"

GROUP BY YEAR("TODATE_FILTER") ORDER BY YEAR("TODATE_FILTER");

We can see from query result there is not too much before 2015 and also we are told by business the most frequent access date range is last 12 months.

Partition pruning takes place automatically in the background and tries to eliminate any unnecessary partition from the selection which is not essential for the query result. If your partition schema are designed to support this, pruning can avoid accessing and loading into memory partitions which are not required which reduces the overall load on the system and improve memory usage. The classic use case for effective pruning is where date-based partitions are used. For example, if a table is partitioned by day, month or year, a query restricted to the data of a specific date period is executed only on the partition with data for the selected date period.

Partition pruning is based on the partition definition. The query optimizer analyzes the WHERE clause of queries to determine whether or not the filters match the given partitioning specification of a table. If a match is found, it may be possible to target only the specific partitions that hold the data and thus reduce the number of partitions being queried.

I am going to provide you the steps and guidance needed to manage a sliding window scenario, with an example.

Table Partition & Memory Usage

Let’s start from a non-partitioned table: PFC_COMBINED_FACTS and it contains 370 m rows and we have Data Service job running everyday to insert or update transaction data. The table has a composite primary key (PFCHOLIK, TODATE_FILTER) and the data type for column TODATE_FILTER is DATE and this column represent when the financial transaction happen at source system.

Let’s run a query to find out how many transaction rows per year

SELECT YEAR("TODATE_FILTER"), COUNT(*) FROM "DLIU"."PFC_COMBINED_FACTS"

GROUP BY YEAR("TODATE_FILTER") ORDER BY YEAR("TODATE_FILTER");

We can see from query result there is not too much before 2015 and also we are told by business the most frequent access date range is last 12 months.

Let’s run the UNLOAD statement

UNLOAD "DLIU"."PFC_COMBINED_FACTS"

After statement run, the data is unloaded from memory and then stored on the disk and the loaded status is ‘NO’.

What will happen if user wants to query only one day of transaction from this table? for an example TODATE_FILTER = ‘20200101’

SELECT * FROM "DLIU"."PFC_COMBINED_FACTS" WHERE "TODATE_FILTER" = '20200101'

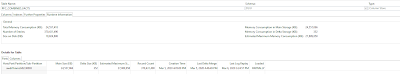

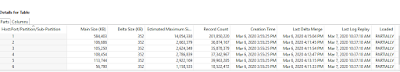

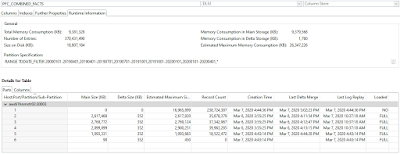

Let’s open the table details and you can see this simple query is expensive for non-partition table and a one day query has forced HANA to load quite amount of data into memory. Here total memory usage 24 GB. The loaded status has changed from NO to PARTIALLY as well

Do we need to load all data into memory in order to answer this one day query? Can we just load the data of most frequent access date range? We can design a partition schema which keep most frequent used data in memory but unload the less frequent used one to optimize the memory usage and improve the query performance.

Let’s alter the non-partitioned table and apply the range partitions. It will take around 10 minutes to create partitions and all data will be allocated into partitions based on TODATE_FILTER value

ALTER TABLE "DLIU"."PFC_COMBINED_FACTS" PARTITION BY RANGE ("TODATE_FILTER")

(

PARTITION '20000101' <= VALUES < '20190101',

PARTITION '20190101' <= VALUES < '20190401',

PARTITION '20190401' <= VALUES < '20190701',

PARTITION '20190701' <= VALUES < '20191001',

PARTITION '20191001' <= VALUES < '20200101',

PARTITION OTHERS

);

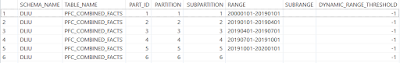

After partition schema created let’s check the partition metadata.

SELECT * FROM M_CS_PARTITIONS WHERE "SCHEMA_NAME" = 'DLIU' AND "TABLE_NAME" = 'PFC_COMBINED_FACTS'

SELECT * FROM M_CS_TABLES WHERE "SCHEMA_NAME" = 'DLIU' AND "TABLE_NAME" = 'PFC_COMBINED_FACTS'

Here are all partition ranges and the last partition is OTHERS

We can see that the partition 6 has a date range TODATE_FILTER > 20200101 and contains 18,522,472 rows and estimate memory usage is only 1.1 GB. Ideally the only partition required for previous

user query is the partition 6. Based on different use case the most frequent used data could be last 6 month or even one year. Our requirement is LAST 12 months with sliding window. When a new period starts, a new partition is created to accommodate the new data and at the same time the aged partition is merged into oldest partition. The oldest partition is always unloaded from memory at beginning of day, for other partitions we keep them in memory.

Sliding Window Scenario

The concept of a sliding window scenario is to manage and keep the same number of partitions on a partitioned table over time. When a new period starts, a new partition is created to accommodate the new data and at the same time the aged partition is merged into oldest partition The oldest partition can be moved into warm storage but for now we want to keep it simple. still kept in hot store but unload from memory and store on the disk. In case users wants to query this less frequent used data and it can be easily loaded into memory.

These are the steps you need to follow to develop a sliding window scenario

◉ Profile your data and analyze query pattern to identify partition specification and partition column

◉ Discuss with business to find out what are most frequent used period

◉ Design your initial partition schema with ALTER TABLE statement and apply it

◉ Design a manage partition sliding window procedure to handling adding and merging partitions

◉ Schedule your procedure to execute automatically

◉ Update partition logic to adapt the business requirement changes

In future with data life cycle in place we will move oldest partition away from hot store into warm store, it could be dynamic tiering or NSE.

Setting up the Sliding Window Procedure

Here is the code with which you can automate the processing of managing a sliding window. The procedure has been created to manage a sliding window scenario for a partitioned table, which we applied initial partition schema in earlier step. Please test it properly regarding to business requirement and query performance.

The partitioned table has uneven partitions, the first one is the largest and oldest partition which contains data from 2000 to 2018, and from 2019, each partition is designed with 3 month periods. You need to figure out the balance between number of partitions and performance improvement. This stored procedure can be scheduled to run everyday. The procedure checks if the new partition need to be created today and is already created or not; if not then it does following:

◉ Create a new partition to accommodate new data, for our case, if procedure run on April 1st, it will add a new partition with the range 20200101<= VALUE < 20200401

◉ Merge the aged partition into the oldest partition

Before merge:

partition 1: 20000101 <= value < 20190101

partition 2: 20190101 <= value < 20190401

After merge:

partition 1: 20000101 <= value < 20190401

◉ Merges the delta storage of the partitioned table into the main storage to optimize performance

◉ Unload the oldest partition from memory

CREATE PROCEDURE "UTL"."SP_MANAGE_PARTITION_SLIDINGWINDOW"

(

IN P_SCHEMA_NAME NVARCHAR(50),

IN P_TABLE_NAME NVARCHAR(50),

IN P_PARTITION_FIELD NVARCHAR(50),

IN P_INTERVAL INT

)

LANGUAGE SQLSCRIPT

SQL SECURITY INVOKER

AS

BEGIN

DECLARE v_MONTHS INT := 0;

DECLARE v_LOOP INT := 0;

DECLARE i INT;

DECLARE v_SQL_RDF_PARTITION NVARCHAR(500);

DECLARE v_FROMDATE NVARCHAR(8);

DECLARE v_TODATE NVARCHAR(8);

v_PARTITIONS = SELECT PART_ID,

LEFT(RANGE,8) AS FROMDATE,

RIGHT(RANGE,8) AS TODATE

FROM M_CS_PARTITIONS

WHERE "SCHEMA_NAME" = :P_SCHEMA_NAME

AND "TABLE_NAME" = :P_TABLE_NAME

AND PARTITION > 0

AND RANGE <> '';

-- check if it is time to create a new partition

SELECT MONTHS_BETWEEN(MAX(TODATE),TO_NVARCHAR(NOW(),'YYYYMMDD')) INTO v_MONTHS

FROM :v_PARTITIONS;

-- our partition granularity is 3 months

IF (:v_MONTHS >=:P_INTERVAL) THEN

-- recalculate the table partitions

v_NEW_PARITIONS = SELECT ROW_NUMBER() OVER (ORDER BY FROMDATE) AS ID,FROMDATE,TODATE FROM

(

-- first two partitions will be merged

SELECT MIN(FROMDATE) AS FROMDATE,MAX(TODATE) AS TODATE

FROM :v_PARTITIONS

WHERE PART_ID IN (1,2)

UNION ALL

-- no change to the rest of partitions

SELECT FROMDATE, TODATE

FROM :v_PARTITIONS

WHERE PART_ID NOT IN (1,2)

UNION ALL

-- new partition will be added

SELECT MAX(TODATE) AS FROMDATE, CONCAT(TO_NVARCHAR(NOW(),'YYYYMM'),'01') AS TODATE

FROM :v_PARTITIONS

);

SELECT MAX(ID) INTO v_LOOP

FROM :v_NEW_PARITIONS;

-- prepare for alter partition script

v_SQL_RDF_PARTITION := 'ALTER TABLE "' || :P_SCHEMA_NAME || '"."' || :P_TABLE_NAME || '" PARTITION BY RANGE ("' || :P_PARTITION_FIELD || '") ( ';

FOR i IN 1..:v_LOOP DO

SELECT FROMDATE,TODATE INTO v_FROMDATE,v_TODATE FROM :v_NEW_PARITIONS WHERE ID = :i;

v_SQL_RDF_PARTITION := v_SQL_RDF_PARTITION || ' PARTITION ' || :v_FROMDATE || '<= VALUES < ' || :v_TODATE || ',';

END FOR;

--lastly we always need 'OTHERS' partition

v_SQL_RDF_PARTITION := v_SQL_RDF_PARTITION || ' PARTITION OTHERS ) ';

EXECUTE IMMEDIATE :v_SQL_RDF_PARTITION;

END IF;

-- trigger delta merge for all partitions

EXECUTE IMMEDIATE 'MERGE DELTA OF "' || :P_SCHEMA_NAME || '"."' || :P_TABLE_NAME || '"';

--unload 1st partition from memory

EXECUTE IMMEDIATE 'UNLOAD "' || :P_SCHEMA_NAME || '"."' || :P_TABLE_NAME || '" PARTITION (1)';

END

The procedure is scheduled to run everyday 2:00 am and make sure to avoid the run at database peak time. It will create and merge partition if needed otherwise just doing delta merge and unload the oldest partition. Make sure to review this with business at every specific periods. You may need to add level 2 if needed here we only need level 1 partition.

CALL "UTL"."SP_MANAGE_PARTITION_SLIDINGWINDOW" ('DLIU','PFC_COMBINED_FACTS','TODATE_FILTER',3);

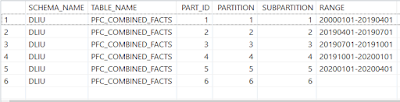

After April 1st run the partition schema will be changed to as below. The initial partition 2 (aged one) will be merged into the first partition (oldest) and the partition 5 (New) will be added with range

20200101 <= VALUE < 20200401, and the partition 6 (OTHERS) will be the range VALUE >= 20200401

The oldest partition is unloaded from memory and kept on the disk.

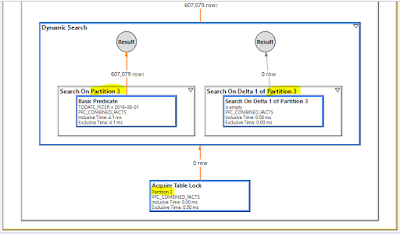

To prove this work we can execute the select statement with visualize plan. you can see it only search for partition 3, and the partition pruning play the magic.

SELECT “TODATE_FILTER” FROM “DLIU”.”PFC_COMBINED_FACTS” WHERE “TODATE_FILTER” = ‘20190801’

No comments:

Post a Comment